Incremental knowledge base construction using DeepDive Shin et al., VLDB 2015

When I think about the most important CS foundations for the computer systems we build today and will build over the next decade, I think about

- Distributed systems

- Database systems / data stores (dealing with data at rest)

- Stream processing (dealing with data in motion)

- Machine learning (extracting information from data)

(What did I miss? Anything you’d add to the list?)

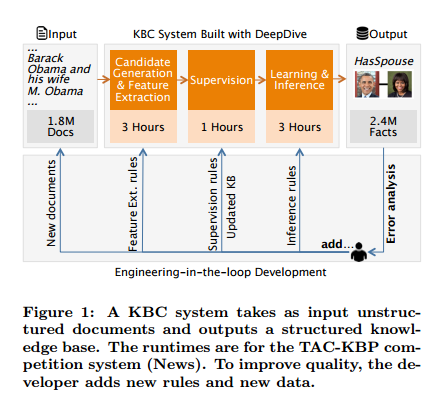

Regular readers will no doubt have noticed that these are the subject areas I most often cover on The Morning Paper. I’ve chosen today’s paper as representative of a large body of work at Stanford on a system called DeepDive. DeepDive sits at a very interesting intersection of the above topics, and its goal is to build a knowledge base – stored in a relational database – from information in large volumes of semi-structured and unstructured data. Such data is sometimes called dark data, and creating a knowledge base from it is the task of knowledge base construction (KBC).

The ultimate goal of KBC is to obtain high-quality structured data from unstructured information. These databases are richly structured with tens of different entity types in complex relationships… they can ingest massive numbers of documents – far outstripping the document counts of even well-funded human curation efforts.

The most important measures of KBC systems are precision (how often a claimed tuple is correct), and recall (of all the possible tuples to extract, how many are actually extracted). DeepDive itself has been used to build dozens of high-quality KBC systems (see descriptions of some of them at http://deepdive.stanford.edu/showcase/apps), and won KBC competitions.

In all cases, we have seen the process of developing KBC systems is iterative; quality requirements change, new data sources arrive, and new concepts are needed in the application. This led us to develop techniques to make the entire pipeline incremental in the face of changes both to the data and to the DeepDive program. Our primary technical contributions are to make the grounding and inference phases more incremental.

The incremental techniques improved system performance by two-orders of magnitude in five real KBC scenarios, while keeping the quality high enough to aid in the development process. Before we can understand those techniques, we need to cover some background on the overall KBC process itself.

KBC, the big picture

The process begins with a corpus of documents, and ends with a relational database containing facts extracted from those documents. KBC systems are typically developed incrementally with developers reviewing the quality of fact extraction (precision and recall) and adding new rules and data as needed. The resulting database will contain entities, relations, mentions, and relation mentions.

- An entity is a real-world person, place, or thing

- A relation associates two or more entities

- A mention is a span of text that refers to an entity or relationship

- A relation mention is a phrase that connects two mentions participating in a relation (e.g. London is the capital of England).

Ingestion and extraction of candidate mappings and features

As documents are ingested they are split into sentences. Each sentence is marked up using standard NLP pre-processing tools, including HTML stripping, part-of-speech tagging, and linguistic parsing, and then stored as a row in the database.

Once the document sentences are loaded and pre-processed, DeepDive looks for candidate mappings and features.

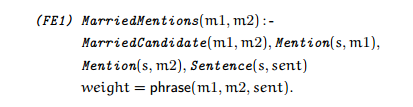

Candidate mappings are extracted using SQL queries. These extract possible mentions, relations, and entities. At this stage we go for high recall (get anything that might be interesting) and don’t worry too much about precision. For example, if we’re building a knowledge base including information about married partners, than we might extract a MarriedCandidate mapping every time two people are mentioned in the same sentence.

Features are supplementary information that the subsequent learning phases can use to learn which of the candidate mappings should indeed be considered true relations with high probability. In the marriage example, we might extract as a feature the phrase that appears between the two mentions. The system will learn a weight indicating how much confidence to place in a given phrase as an indication of a true relation.

In general, phrase could be an arbitrary UDF that operates in a per-tuple fashion. This allows DeepDive to support common examples of features such as “bag-of-words” to context-aware NLP features, to highly domain-specific dictionaries and ontologies. In addition to specifying sets of classifiers, DeepDive inherits Markov Logic’s ability to specify rich correlations between entities via weighted rules.

Supervised learning phase

There are two techniques for providing labelled training data. One is simply to provide true or false labels for evidence relations. The other is a technique called distant supervision. Distant supervision uses a supplementary dataset of facts to create larger sets of labelled data. Continuing the marriage example, suppose we have an external database of trusted facts indicating spouse relationships, and we choose a particular relationship P1 is married to P2. We then label as true every candidate marriage mapping the system has extracted that mentions P1 and P2. This will of course label as true indications of marriage some phrases that really aren’t, for example, “Crypto Bob (P1) exchanged messages with Crypto Alice (P2)”.

At first blush, this rule seems incorrect. However, it generates noisy, imperfect examples of sentences that indicate two people are married. Machine learning techniques are able to exploit redundancy to cope with the noise and learn the relevant phrases.

Inference and learning using a factor graph

Now the fun begins…

A DeepDive program defines a standard structure called a factor graph through inference rules specified by the developer. From the DeepDive website:

A rule expresses concepts like “If John smokes then he is likely to have cancer” and, in other words, describes the factor function of a factor and which variables are connected to this factor. Each rule has a weight (either computed by DeepDive or assigned by the user), which represents the confidence in the correctness of the rule. If a rule has a high positive weight, then the variables appearing in the rule are likely to take on values that would make the rule evaluate to true.

The factor graph contains two types of nodes: variables and factors. Variables may be either evidence variables (we know their true value, as a result of the supervision phase) or query variables whose value we want to predict. Factors define the relationships between the variables in the graph, “Each factor can be connected to many variables and comes with a factor function to define the relationship between these variables.”

Inference now takes place over the factor graph, using Gibbs sampling. Again, the website explains this well:

Exact inference is an intractable problem on factor graphs, but a commonly used method in this domain is Gibbs sampling. The process starts from a random possible world and iterates over each variable v, updating its value by taking into account the factor functions of the factors that v is connected to and the values of the variables connected to those factors (this is known as the Markov blanket of v). After enough iterations over the random variables, we can compute the number of iterations during which each variable had a specific value and use the ratio between this quantity and the total number of iterations as an estimate of the probability of the variable taking that value.

Thus DeepDive uses joint inference, determining the values of all events at the same time. This allows events to influence each other if they are directly or indirectly connected through influence rules.

Closing the loop

At the end of the learning and inference phrase, DeepDive has computed a marginal probability p for each candidate fact. Typically a user then selects facts with high confidence (e.g. p > 0.95) to produce the final knowledge base. It’s unlikely the system will be perfect first time around:

Typically, the user needs to inspect errors and repeat, a process that we call error analysis. Error analysis is the process of understanding the most common mistakes (incorrect extractions, too-specific features, candidate mistakes, etc.) and deciding how to correct them. To facilitate error analysis, users write standard SQL queries.

Incremental development

I only have space to cover this very briefly, see §3 in the paper for full details. DeepDive supports incremental grounding and_incremental inference_. Incremental grounding is the process of updating an existing factor graph based on new information, and incremental inference is the process of updating the learned probabilities given the change in the graph.

For incremental grounding, DeepDive uses standard incremental view maintenance techniques from the database community. Specifically, it uses the DRED algorithm of Gupta et al. (1993).

For incremental inference two different approaches are implemented – neither dominates the other and so the system chooses which to use at runtime using a simple set of rules based on the kinds of changes made to the graph.

- The sampling approach stores a set of possible worlds sampled from the original distribution, rather than storing all possible worlds, and then performs inference over these samples. “We use a (standard) Metropolis-Hastings scheme to ensure convergence to the updated distribution.”

- The variational approach stores a factor graph with fewer factors that approximates the original distribution. On the smaller graph, running inference and learning is often faster.