POTS: Protective optimization technologies, Kulynych, Overdorf et al., arXiv 2019

With thanks to @TedOnPrivacy for recommending this paper via Twitter.

Last time out we looked at fairness in the context of machine learning systems, coming to the realisation that you can’t define ‘fair’ solely from the perspective of an algorithm and the data it is trained on. Start pulling on that thread, and you end up with papers such as ‘Delayed impact of fair machine learning‘ that consider the longer term implications for groups the intention was to protect, when systems are deployed and interact with the real world creating feedback loops in a causal graph. Today’s paper looks even wider, encompassing the total impact of an algorithm, as part of a system, embedded in an environment. Not only for the groups explicitly considered by that algorithm, but also the impact on groups outside of consideration (the ‘utility function’) of the service provider. For example, navigational systems such as Waze can have negative impacts on communities near highways that they route much more traffic through, and Airbnb may have perfectly fair algorithms from the perspective of participants in the Airbnb ecosystem, whilst also having damaging consequences on the rental market and housing supply for locals and those outside of ‘the system.’

Kulynych, Overdorf, et al., are firmly on the side of ‘the little person’ raging against the algorithmically optimised machines in the hands of powerful service providers. They want to empower those disadvantaged by such systems to fight back, using protective optimisation technologies (POTs). In short this involves manipulating the inputs to the system so as to tip things into a better balance (at least as seen by the deployer of POTs tactics).

We argue that optimisation-based systems are developed to capture and manipulate behavior and environments for the extraction of value. As such, they introduce broader risks and harms for users and environments beyond the outcome of a single algorithm within that system. These impacts go far beyond the bias and discrimination stemming from algorithmic outputs fairness frameworks consider.

Taking a wider perspective

Borrowing from Michael Jackson’s theory of requirements engineering the authors consider four distinct aspects of a system and the environment it operates in:

- The domain assumptions, K, capture what is know about the application domain (the environment the system operates in), before deployment of the system

- The requirements, R, capture the desired state of the application domain once the system has been deployed

- The specification, S, describes how a system satisfies requirements R, in sufficient detail that the system could be implemented.

- The program, P, is an implementation of the machine derived from the specification.

We can introduce problems at all of these levels: with faulty domain assumptions all bets are off. Badly derived requirements lead to an incorrect description of the problem. Badly derived specifications lead to an incorrected description of the solution. And of course there can be badly implemented programs.

Most fairness frameworks focus on describing $S$ independent of $K$ and $R$… focusing on $S_{fair}$ has a number of repercussions. First, it does not reflect how harms manifest themselves in the environment… Second, focusing on achieving fairness for users might leave out the impact of the system on phenomena in the application domain that is not shared with the machine… Third, the focus on algorithms abstracts away potential harms of phenomena in the machine.

Negative externalities

Economists use the term negative externalities to describe the negative effects of a system. For example, when its consumption, production, or investment decisions casuse significant repercussions to users, non-users, or the environment. For example, traffic routing apps such as Waze can end up worsening congestion for all.

Optimization is a technique long established in, for example, resource allocation. However, its increasing supremacy in systems that reconfigure every aspect of life (education, care, health, love, work, etc.) for extraction of value is new and refashions all social, political, and governance questions into economic ones. This shift allows companies to commodify aspects of life in a way that conflates hard questions around resource allocation with maximization of profit and management of risk. The impact is subtle but fundamental…

Even a well-intentioned (idealized fair-by-design) service provider can cause negative externalities where their view of social utility and the application domain is incomplete or inaccurate.

Mitigating externalities becomes a greater issue when incentives, capacities, and power structures are not aligned.

Fighting back with POTs

If existing fairness frameworks offer an incomplete view on the potential negative externalities of a system, and can only be applied at the discretion of the service provider, is there anything that can be done externally to mitigate negative system impact?

Protective Optimisation Technologies are designed to be deployed by actors affected by an optimisation system. "Their goal is to shape the application domain in order to reconfigure the phenomena shared with the machine to address harms in the environment."

POTs are intended to eliminate the harms induced by the optimization system, or at least mitigate them. In other cases, POTs may shift the harms to another part of the problem space where the harm can be less damaging for the environment or can be easier to deal with.

It’s a bit like playing a game of adversarial machine learning, but out in the real world. There are three different kinds of inputs that can potentially be influenced: (i) inputs users generate when interacting with the system, (ii) inputs about individuals and environments the service provider receives from third-party (e.g., buying in data), and (iii) inputs from markets and regulators that define the political and economic context in which the system operates.

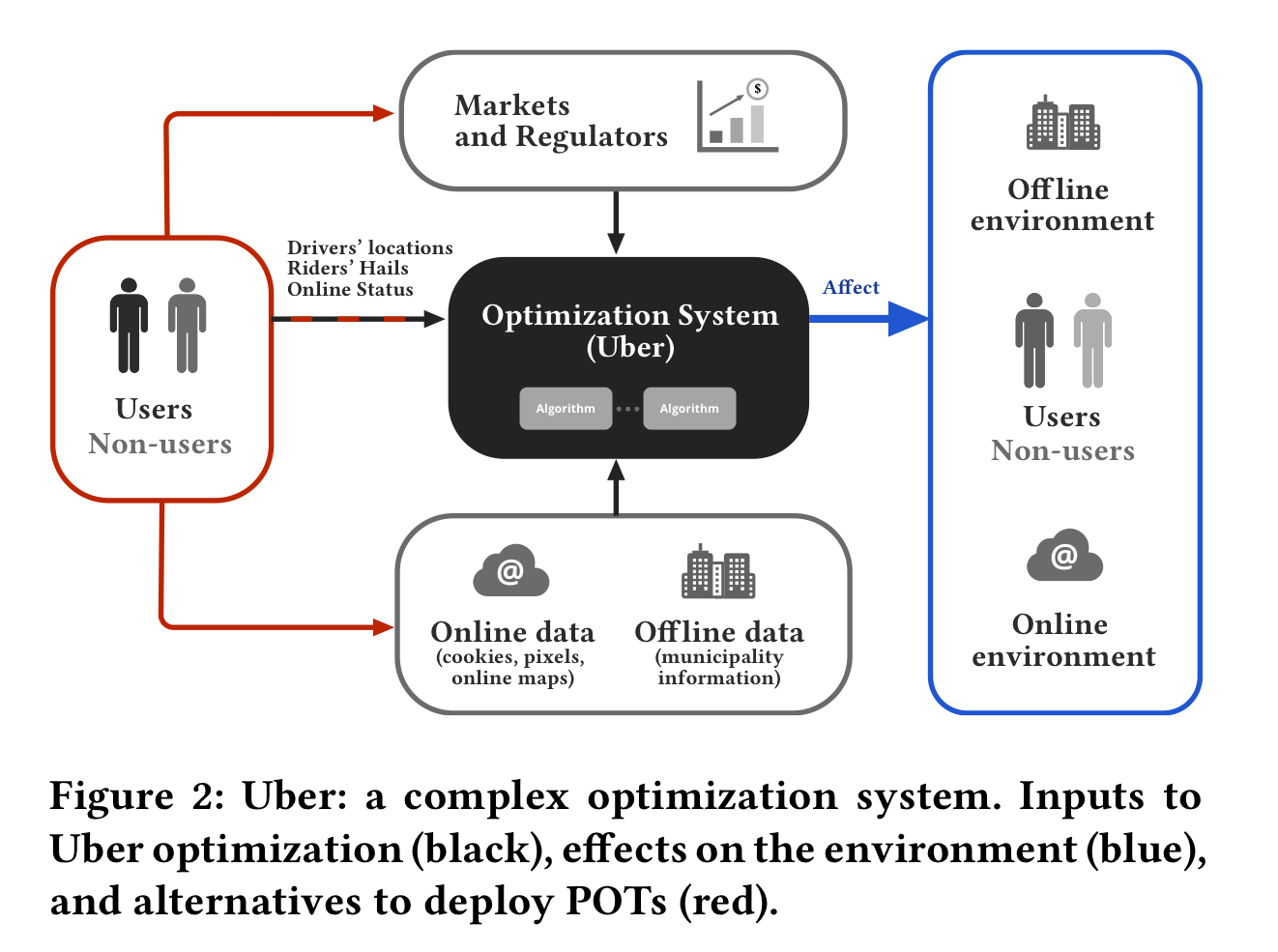

As a well-known example consider Uber – it combines these inputs using managerial and mathematical optimisation to riders to drivers and set ride prices. "Reports and studies demonstrate that these outcomes cause externalities: Uber’s activity increases congestion and misuse of bike lanes, increases pollution, and decreases public support for transit." Uber’s requirements $R$ only consider matching drivers to riders with associated dynamic pricing.

Considering the three categories of inputs, the behaviour of Uber’s system can be influenced by, for example, (i) changing user inputs to the system, such as drivers acting in cohort to induce surges, (ii) changing the online of offline signals gathered by Uber (e.g. changing city urban planning), or (iii) changing regulations or mandating salary increases.

The goal of a POT is finding actionable – feasible and inexpensive – modifications to the inputs of the optimization system so as to maximize social utility…

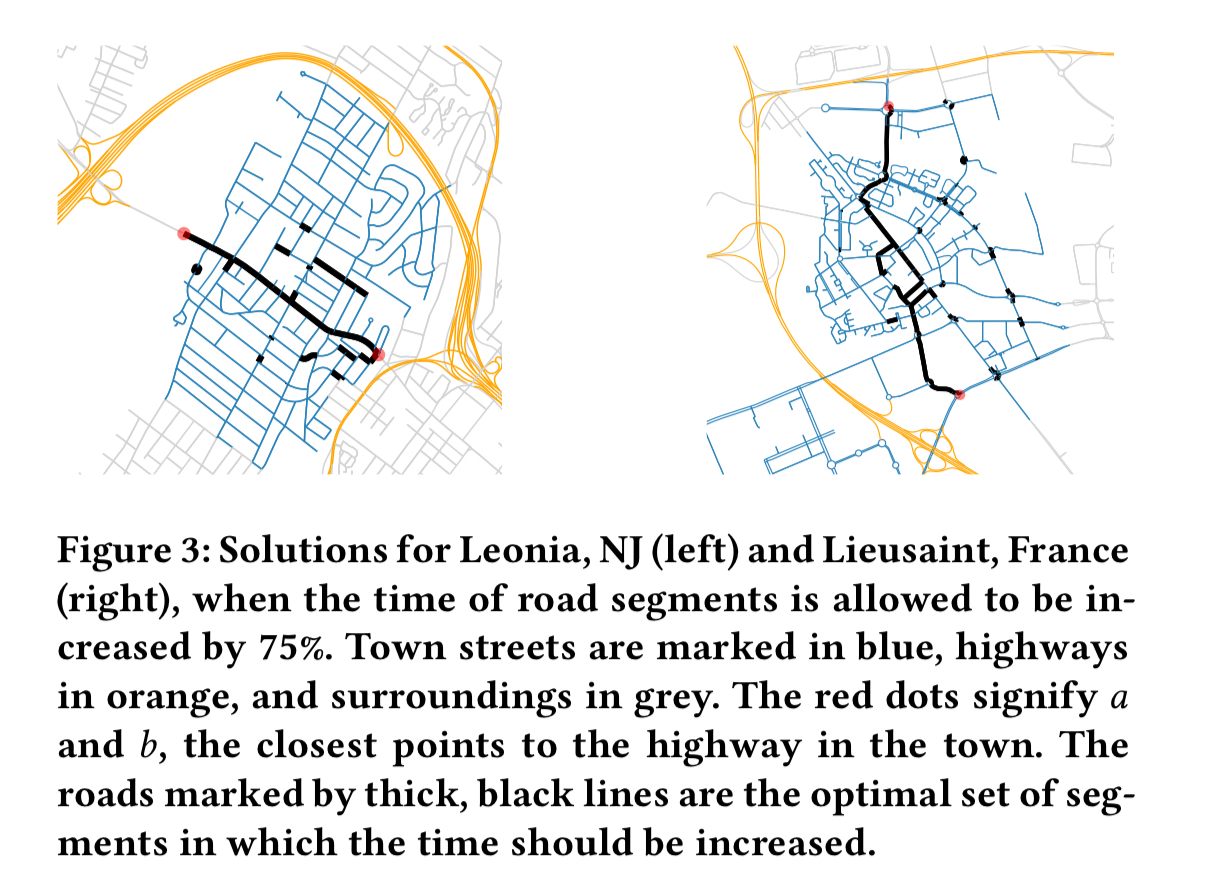

The first example in the paper looks at how to combat negative externalities caused by Waze for residents of towns and neighbourhoods adjacent to busy routes. Three towns reporting such issues are Leonia in New Jersey, Lieusaint in France, and Fremont in California. The authors use route planning software to figure out local road changes (specifically, what slowdown in local traffic) is required to prevent Waze from sending traffic through the town.

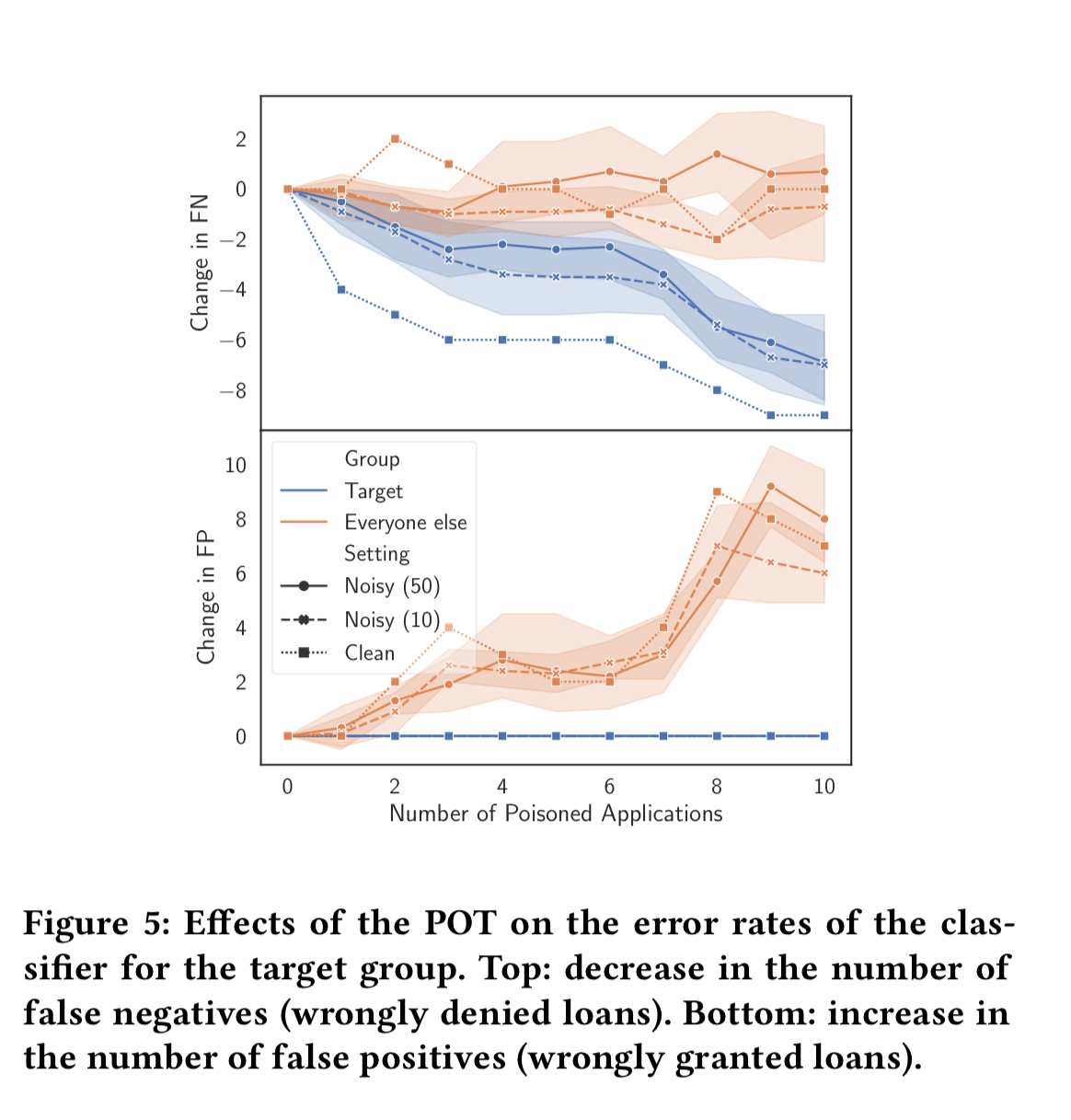

A second example looks at credit scoring. Here a group perceiving themselves to be disadvantaged by an algorithm have one option at their disposal: changes the inputs to the machine learning system by taking and repaying loans. "The POT must inform the collective about who and for which loan they should apply for and repay, in such a way that they reduce the false-negative rate on the target group after retraining." This is a bit like the concept of poisoning in adversarial machine learning, but used with the intention of decreasing an error for a given target group.

Things get complicated pretty quickly though:

Unfortunately, the POT increases the false positives. That is, it shifts the harm to the bank, which would give more loans to people who cannot repay, increasing its risk.

There are also consequences for taking a loan that you can’t repay (e.g. dispossesion), which make it unclear whether it’s in the interests of the group the measure was trying to protect either.

Is it realistic?

The paper makes a good argument for considering the impacts of a system in the broadest sense, and not merely some measure of utility that is blind to many of the system externalities. But organising on a level to disrupt the workings of a large service provider is not easy, and if it ends up in an arms race then that battle looks hard to win. The problem is real, but I’m not yet convinced that POTs are the answer.

The last word

The fact that a whole infrastructure for optimizing populations and environments is built in a way that minimizes their ability to object to or contest the use of their inputs for optimisation is of great concern to the authors – and should be also to anyone who believes in choice, subjectivity, and democratic forms of government.

TeX math formatting seems to have failed in the blog post again. Not a big deal, just a minor annoyance – I think the only math-mode is in the quote “Most fairness frameworks focus on…”.

Interesting paper, though. Usually we see regulation as the answer to externalities – using governmental power to convert externalities to direct costs for the entities responsible for the externalities. In other cases, popular movements can apply social and economic pressure (negative publicity, boycotts) to do the same thing indirectly. POTs is an interesting approach that’s related to the latter category but not really part of it.