The curious case of the PDF converter that likes Mozart: dissecting and mitigating the privacy risk of personal cloud apps Harkous et al., PoPET ’16

This is the paper that preceded “If you can’t beat them, join them” we looked at yesterday, and well worth interrupting our coverage of CODASPY ’17 for. Harkous et al., study third-party apps that work on top of personal cloud service (e.g., Google Drive, Dropbox, Evernote,…). Careful analysis of 100 third-party apps in the Google Drive ecosystem showed that 64% of them request more permissions than they actually need. No surprise there sadly! The really interesting part of the paper for me is where the authors investigate alternate permission models to discover what works best in helping users to make informed privacy choices. Their Far-reaching insights model (which we’ll get to soon!) is a brilliant invention.

…cloud permissions allow 3rd party apps to get access to any file the user has stored in the cloud… Put simply, the scale and quality of data that can be collected is both a privacy nightmare for unaware users and a goldmine for advertisers.

The rest of this review will proceed as follows:

- A quick summary of the findings from investigating existing apps and the permissions they request,

- An analysis of permission models to see what best helps users to make privacy-informed choices

- A brief look at PrivySeal, the privacy-informing app store the authors built for Google Drive

- Suggestions from the authors for how cloud providers can improve their offerings to safeguard users’ privacy.

You want access to what?

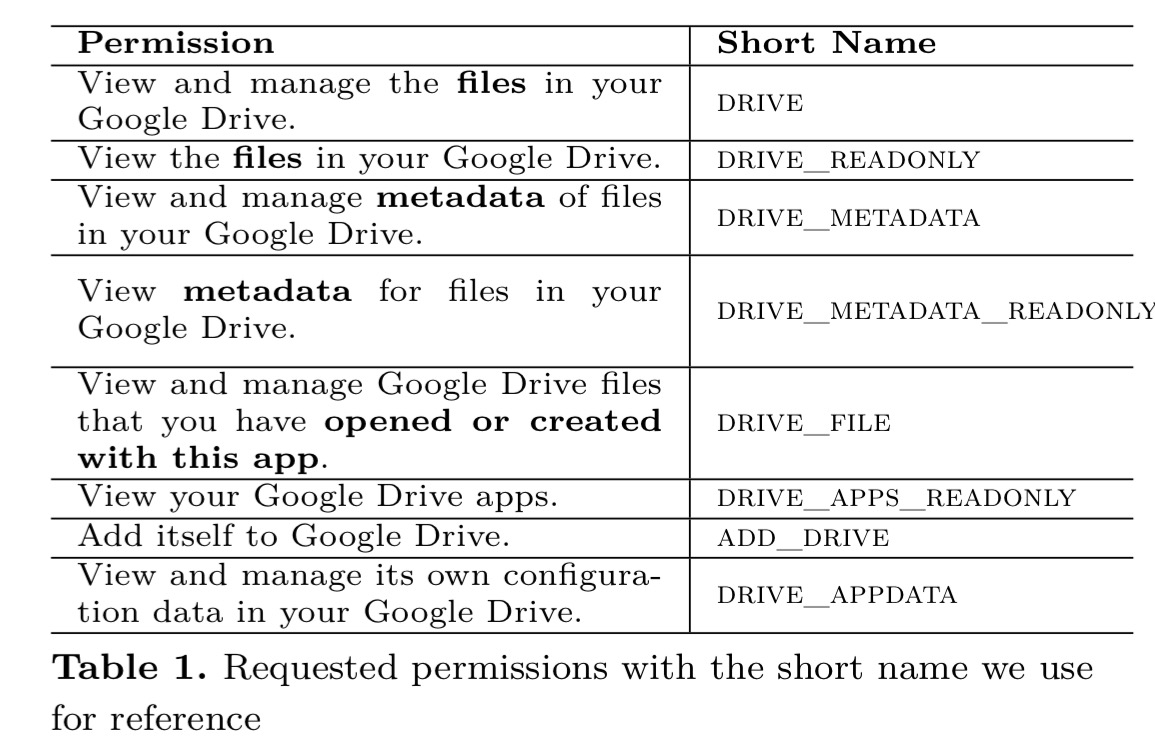

This study concerned Google Drive apps, but I’ve no reason to believe there wouldn’t be similar findings in other ecosystems. Here are the possible permissions that a Google Drive app can request:

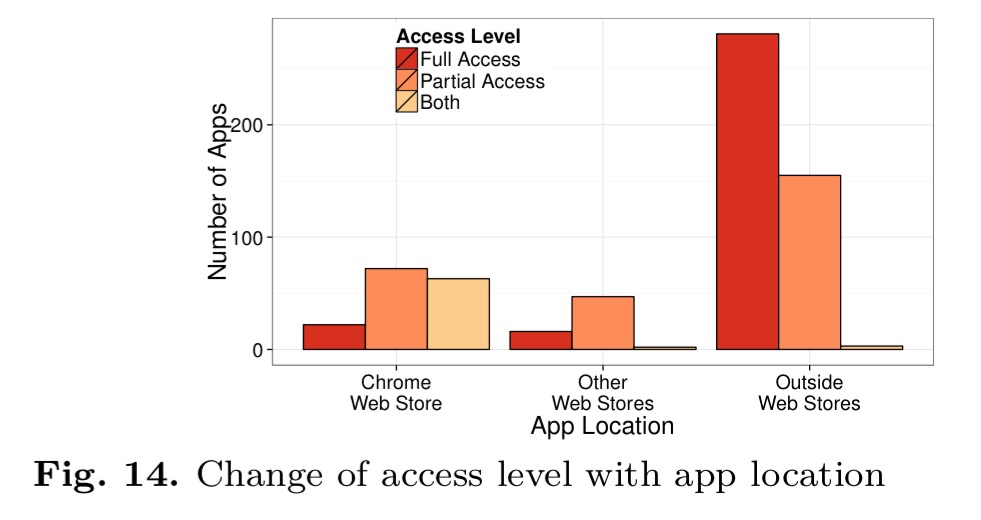

Finding out the actual permissions an app requests isn’t something you can easily automate, so the authors investigated 100 randomly selected apps out of the 420 “works with Google Drive” apps in the Google Chrome Web Store at the time of the study. When we get to PrivySeal, we’ll see that only around 25% of the apps that people install actually come from the official Google Web Store – and those that come from elsewhere tend to have lower privacy standards!

Analyzing the [application permissions], we found out that 64 out of 100 apps request unneeded permissions. In other words, the developers could have requested less invasive permissions with the current API provided by Google. In total, 76 out of the 100 apps requested full access to the all the files in the user’s Google Drive. Moreover, the 64 over-privileged apps have actually all requested full access. Accordingly, in our sample, around 84% (64/76)of apps requesting full access are over-privileged.

Far-reaching insights for privacy-informed consent

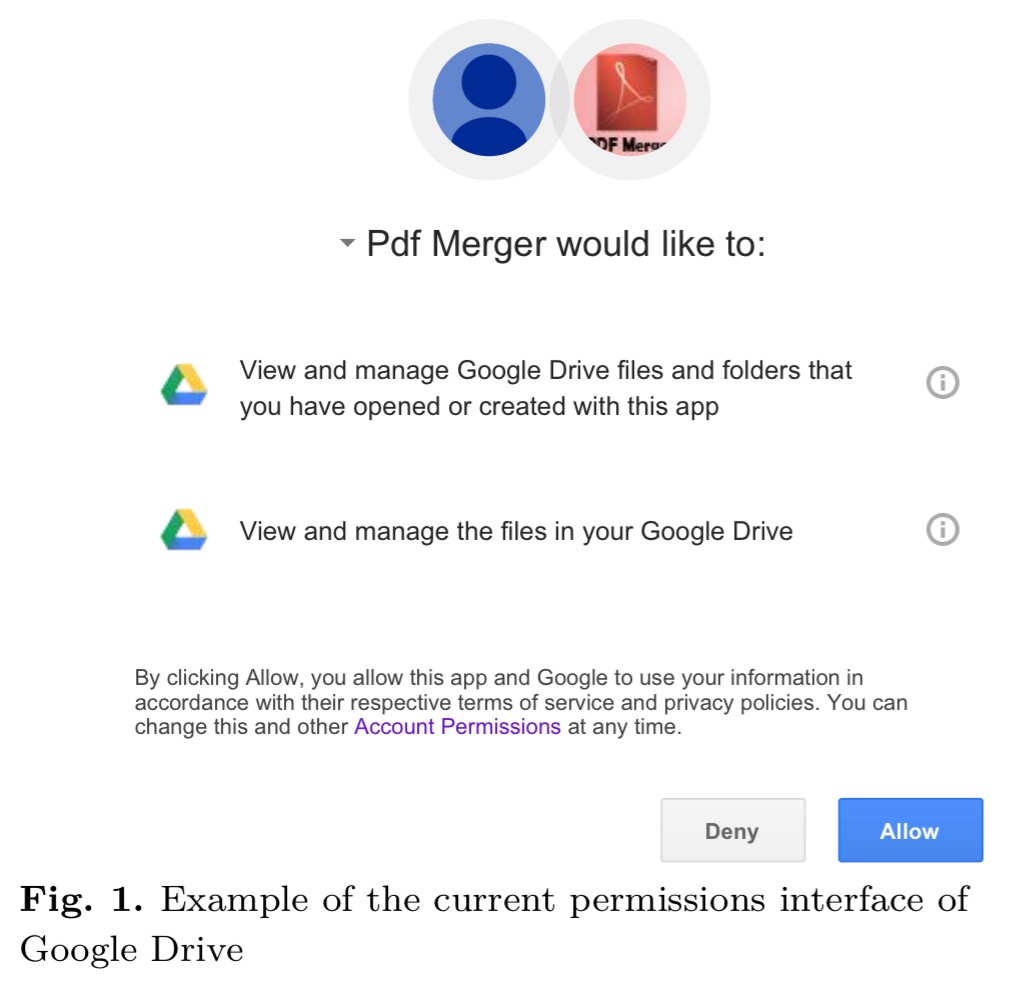

As we saw yesterday, the current permissions interface of Google Drive looks like this:

In the light of the risk that over-privileged apps pose, we propose three alternatives to the existing permission model before evaluating their efficacy.

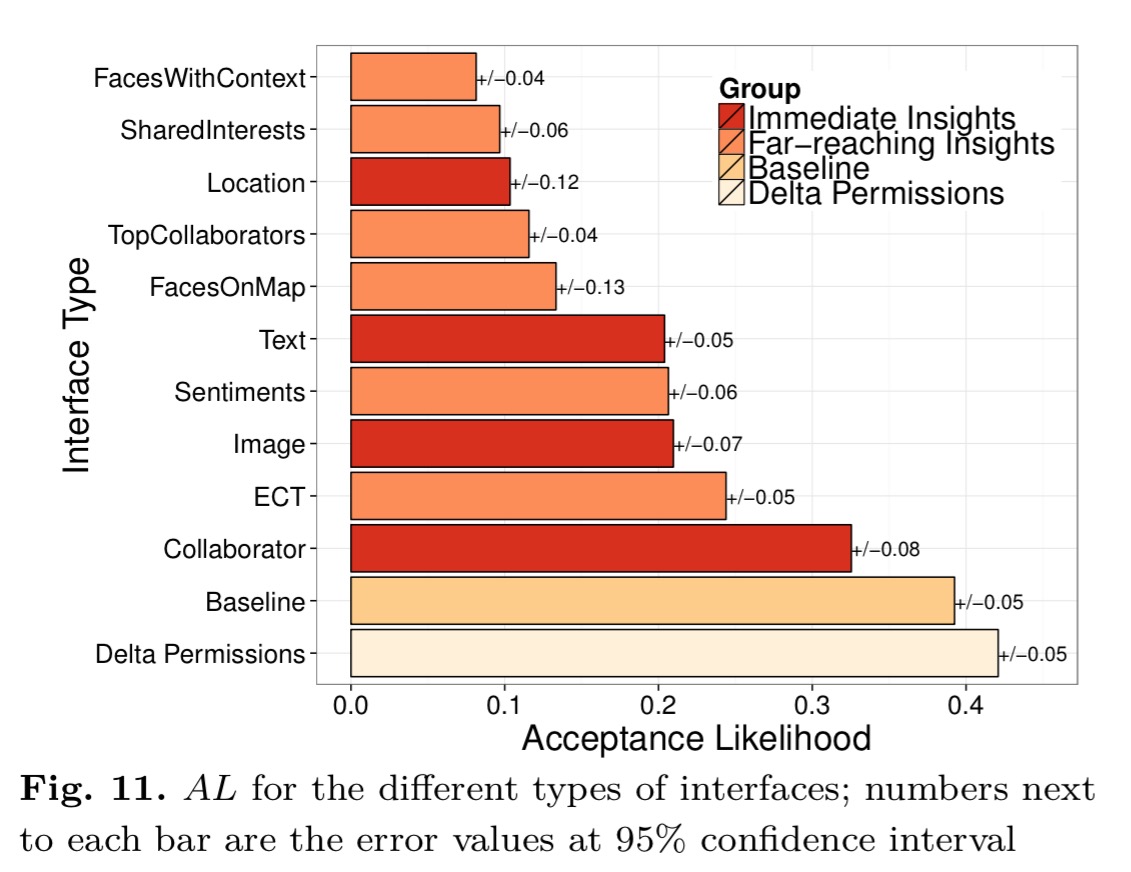

The evaluation was done with 210 users, against a baseline of the current permissions model. The efficacy of each model is measured using an acceptance likelihood (AL) metric that measures the percentage of the times a user choses to actually install an app after being presented with a permissions dialog for it.

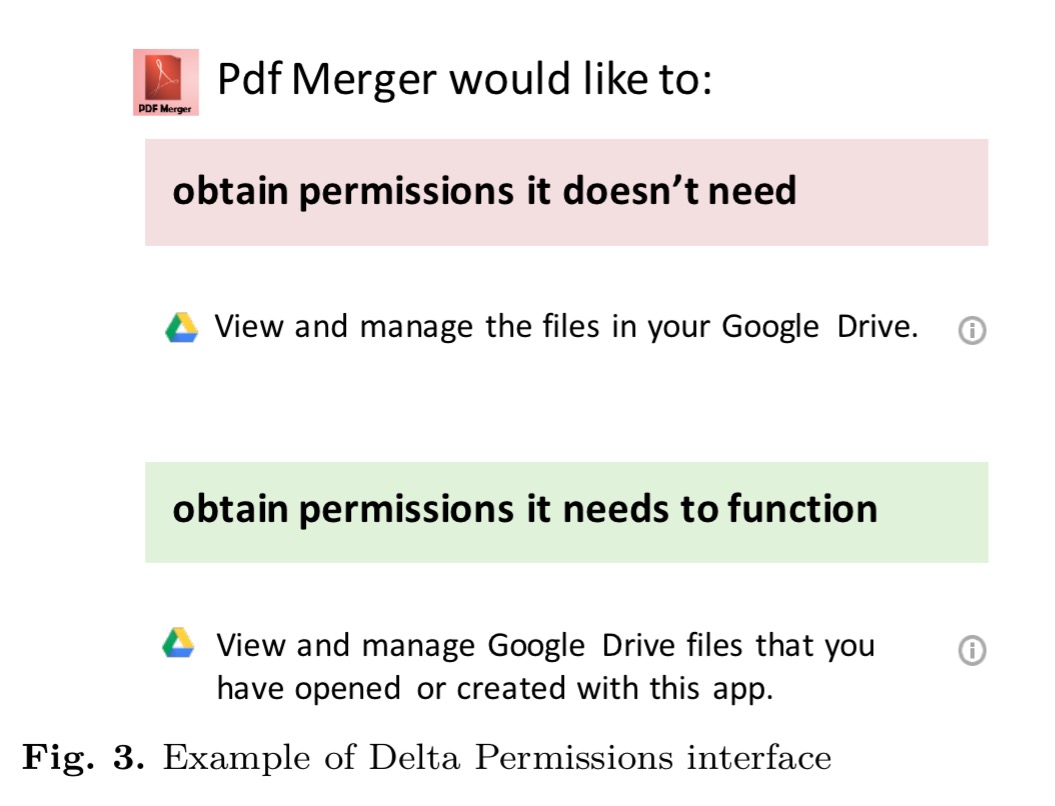

The first model is called Delta Permissions. In this model the permission dialog is modified to explicitly point out when an app requests more permissions than it actually needs, based on the hypothesis that users are less likely to install apps requesting unnecessary permissions.

That looks like it should raise a red flag in the user’s mind… And yet, “we found no evidence of any advantage that delta permissions can introduce, which means that telling our experiment’s participants explicitly about unneeded permissions did not help deter them from installing over-privileged apps.” A great reminder of the value of doing actual studies versus just coming up with a design you think is going to work well and shipping it!

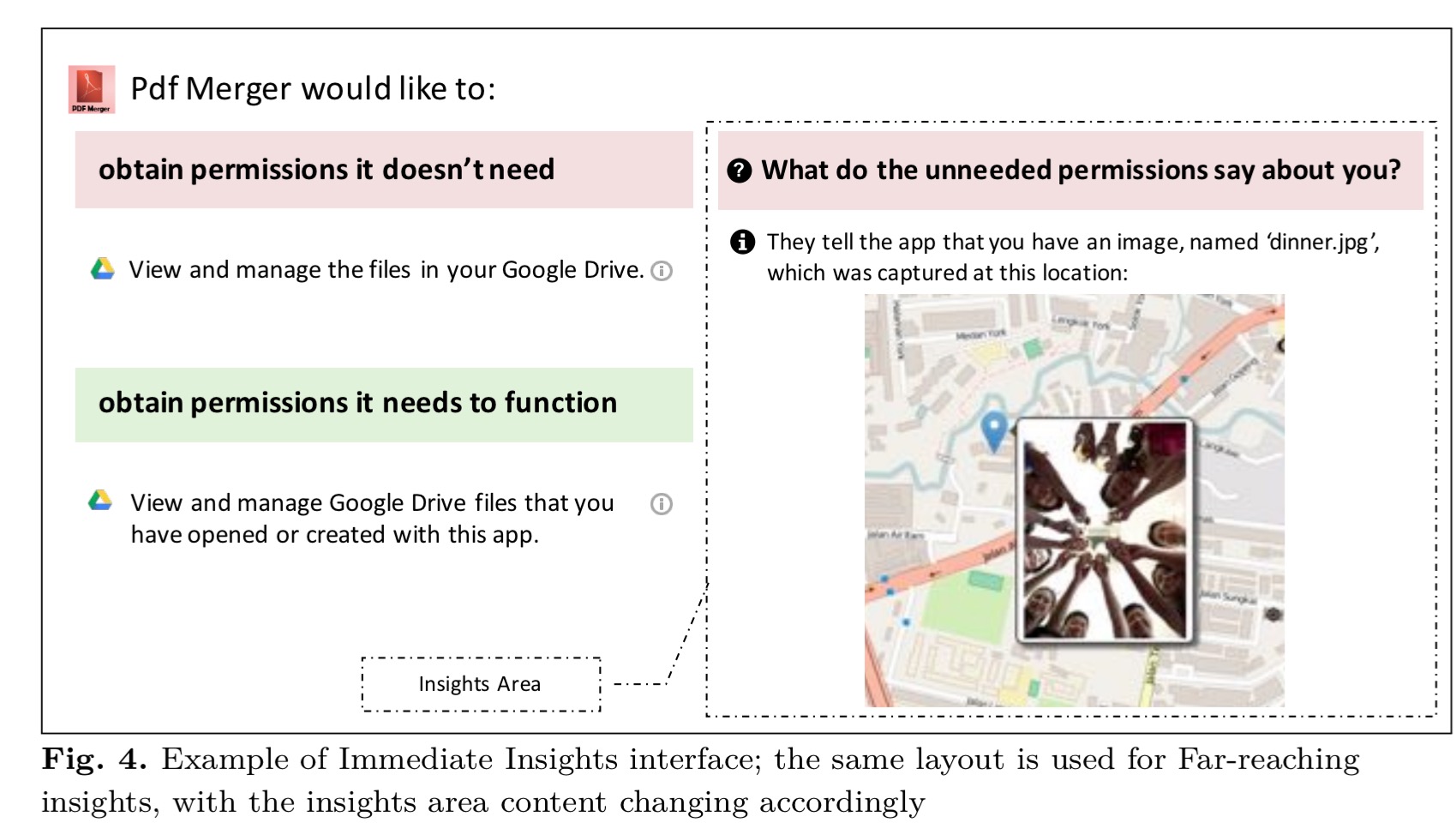

The second model is called Immediate Insights, based on the hypothesis that users shown samples of the data that can be extracted from the unneeded permissions are less likely to install apps requesting them. The delta permissions dialog is expanded with a new panel on the right with the question “what do the unneeded permissions say about you?” and insights that can be:

- an image selected at random from the user’s image files

- a photo from the user’s image files, which includes GPS location information, placed on a map

- an excerpt from the beginning of a randomly chosen text file

- the profile picture and name of a randomly chosen collaborator

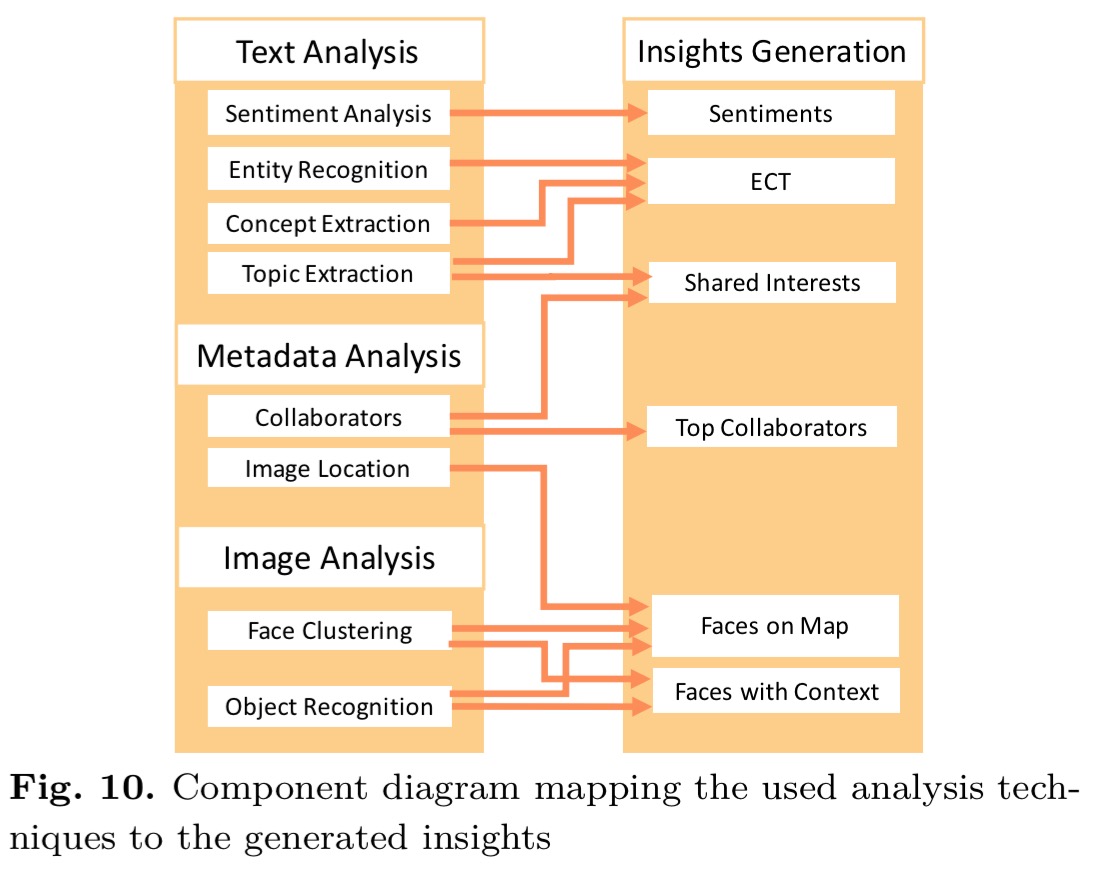

The third model is called Far-reaching Insights based on the hypothesis that “when the users are shown the far-reaching information that can be inferred from the unneeded permissions granted to apps, they are less likely to authorize these apps.” The appearance is similar to the immediate insights dialog, but the panel is replaced with one that shows deeper inferences from the data in six different categories:

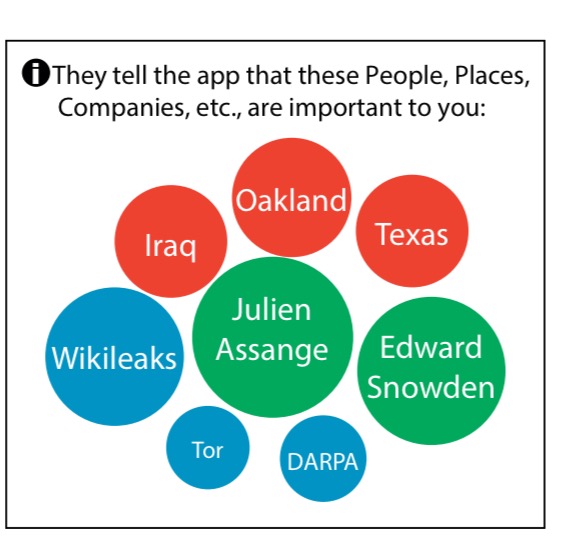

- Entities, concepts, and topics extracted by using NLP techniques on user’s textual files:

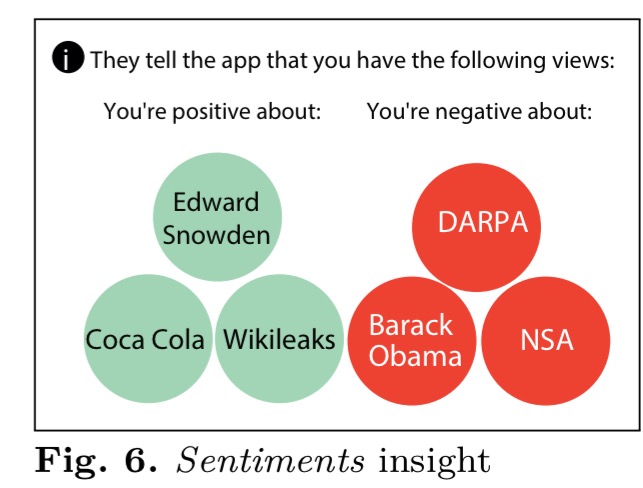

- The Sentiment of the user towards entities with the most positive or negative sentiments:

- The top collaborators a user has, based on the analysed files.

- The user’s shared interests with collaborators:

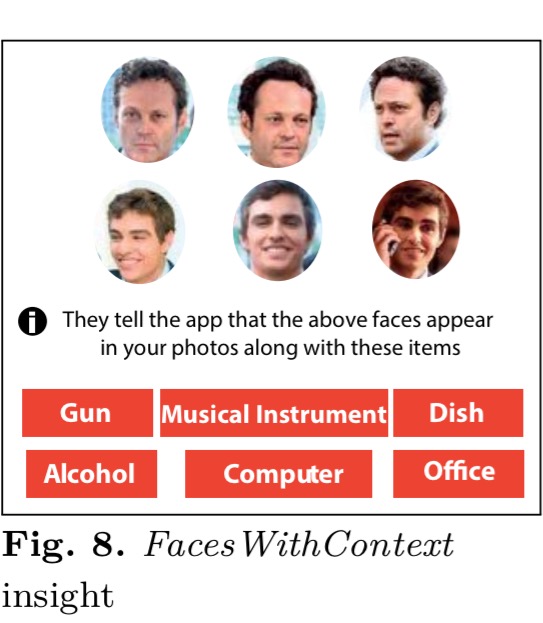

- Faces with context – faces of the most frequent people featuring in the user’s images, together with the concepts that appear in the same images.

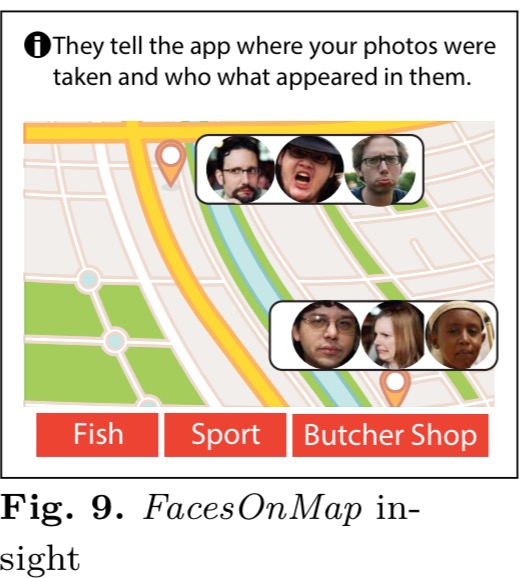

- Faces on a map – shows a user where selected photos were taken, together with the faces and items in those photos:

The techniques used to generate the inferences are shown in the following figure.

The experiment reveals which techniques are most successful in discouraging users from installing apps which request permissions they don’t need:

Overall, immediate insights are roughly twice as good as the baseline at discouraging the acceptance of such apps, but far-reaching insights are up to twice as good again as the immediate insights.

The category of personal insights relating to the user himself or herself (image & text from immediate insights, entities-concepts-topics and sentiments from far-reaching insights) are all associated with a significantly higher acceptance likelihood than the far-reaching insights faces-with-context, top-collaborators, and shared-interests.

We denote these as relational insights. From our results, we can conclude that relational insights promote greater privacy awareness in users, as such insights are more likely to dissuade them from installing over-privileged apps.

Also interesting here is that the top-collaborators insight, shown to be something that users care about with respect to their privacy, is something that can be inferred even if an app only has metadata permissions, and not full file access. §7.2 of the paper explores in more detail what can be learned from metadata alone.

PrivySeal

We could all wait for Google to implement something like the far-reaching insights in their app store – but that might take some time if ever (and even then, only covers a subset of apps).

… we decided not to wait and chose an alternative approach, which is independent of the company’s plans and is ready for user utilization immediately. We built PrivySeal, a privacy-focused store for Google Drive apps, which is readily available at https://privyseal.epfl.ch.

To generate its far-reaching insights of course, PrivySeal itself requires access to all your files. If such a solution where hosted by the cloud service provider themselves, similar considerations would of course apply – unless a user-key encryption scheme is in use. From the 1440 registered users of PrivySeal at the time the paper was compiled, there were 662 unique apps installed in their Google Drive accounts (many of these installed before the users connected to PrivySeal of course). These came from the Google Chrome Web Store, other Google stores (add-ons and apps marketplace), or outside of Google’s stores altogether. The following summary shows that apps in unofficial stores tend to be in the majority, as well as being much more cavalier with the permissions they request. Improving the Chrome Web Store permissions model may help a bit therefore, but an ideal solution would be independent of the various stores apps can be found in.

How can we do better?

The authors recommend four steps in additional to PrivySeal that would users make informed privacy decisions:

- Providing finer-grained permission models to help apps request only what they truly need

- A privacy-preserving intermediate API layer that sits over the top of existing cloud service APIs providing finer grained control, permissions reviews, and transparency dashboards.

- Transparency dashboards – a post-installation technique to deter developers from abusing user data. Transparency dashboards allow the user to see which files have been downloaded by each 3rd party app and when such operations took place. This either needs to be integrated into the platform itself, or built into a privacy preserving intermediate API that extensions work with.

- Insights based on used data, “unlike external solutions that can only determine what data can be potentially accessed, the cloud platform can provide users with insights based on the data that developers (vendors) have previously downloaded.“

See the related work in section 9.1 of the paper for references to studies looking at user consent mechanisms and their efficacy in the context of Facebook apps, Android apps, and Chrome extensions.

The privacy informing user interfaces in this work were designed in accordance with the principles set out in “A design space for effective privacy notices,” which is also well worth checking out. With new laws coming into effect soon that require informed consent, lets hope we start to see more of this sort of thing in the wild.