If you can’t beat them, join them: a usability approach to interdependent privacy in cloud apps Harkous & Aberer, CODASPY ’17

I’m quite used to thinking carefully about permissions before installing a Chrome browser extensions (they all seem to want permission to see absolutely everything – no thank you!). A similar issue comes up with third-party apps authorised to access documents in cloud storage services such as Google Drive and Dropbox. Many (64%) such third-party apps ask for more permissions than they really need, but Harkous and Aberer’s analysis shows that even if they don’t you can still suffer significant privacy loss. Especially revealing is the analysis that shows how privacy loss propagates when you collaborate with others on documents or folders, and one of your collaborators installs a third-party app which suddenly gains access to some of your own files, without you having any say in the matter.

Based on analyzing a real dataset of 183 Google Drive users and 131 third party apps, we discover that collaborators inflict a privacy loss which is at least 39% higher than what users themselves cause.

Given this state of affairs, the authors consider what practical steps can be taken to make users more aware of potential privacy loss, and perhaps change their decision making processes, when deciding which third-party apps should have access to files. Their elegant solution involves a privacy indicator (extra information shown to the user when deciding to grant a third-party app permissions) which a user study shows significantly increases the chances of a user making privacy-loss minimising decisions. Extrapolating the results of this study to simulations of larger Google Drive networks and an author collaboration network show that the indicator can help reduce privacy loss growth by 40% and 70% respectively.

Privacy loss metrics

With every app authorization decision that users make, they are trusting a new vendor with their data and increasing the potential attack surface… An additional intricacy is that when users grant access to a third-party app, they are not only sharing their own data but also others’ data. This is because cloud storage providers are inherently collaborative platforms where users share and cooperate on common files.

The main concept used in the paper to evaluate privacy loss is the notion of vendor file coverage (VFC). For a single vendor, this is simply the percentage of a users files that the vendor has access to, i.e., a number in the range 0..1. For V vendors we simply add up their coverage scores, giving a result in the range 0..V.

The set of vendors of interest for a given user u comprises those that u has explicitly authorised (Self-VFC) together with the set of vendors that collaborators of u have authorised (Collaborators-VFC). This combination is the Aggregate-VFC. Whenever the paper mentions privacy loss, it means as measured by this VFC metric.

This metric choice allows relaying a message that is simple enough for users to grasp, yet powerful enough to capture a significant part of the privacy loss… telling users that a company has 30% of their files is more concrete than a black-box description informing them that the calculated loss is 30%.

The impact of collaboration

The first part of the study looks at a Google Drive based collaboration network collected during PrivySeal research that we will look at tomorrow. The dataset comprises 3,422 users, who between them had installed 131 distinct Google Drive apps from 99 distinct vendors.

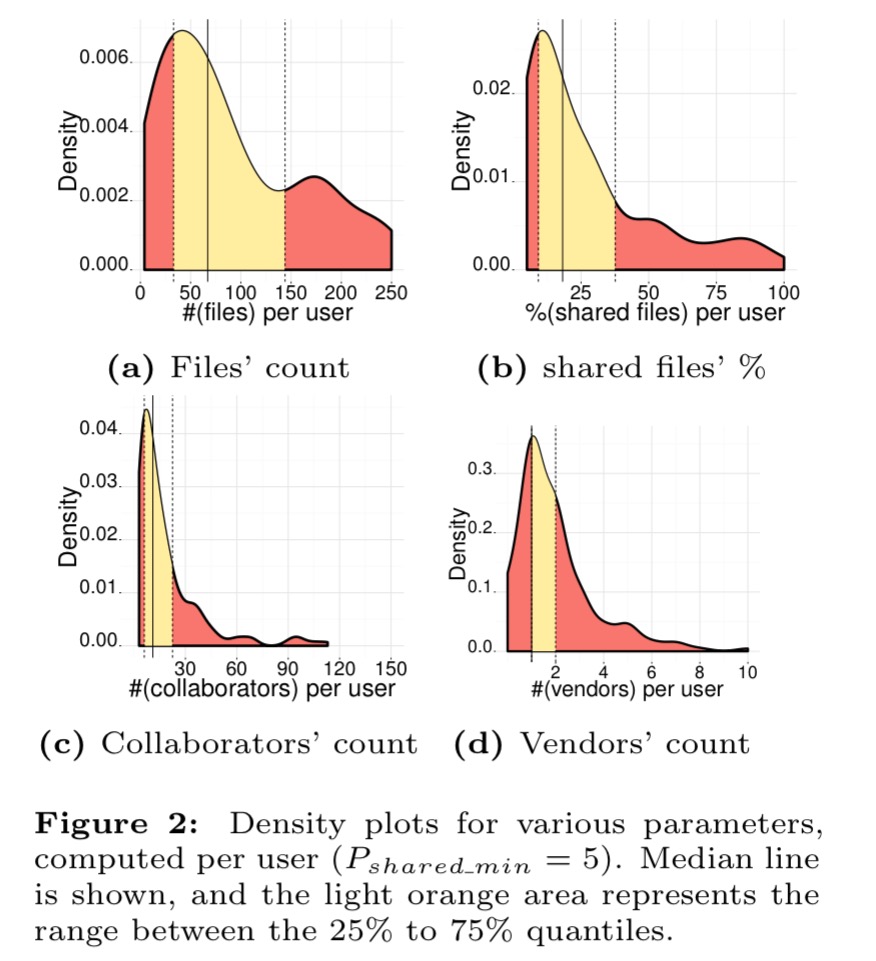

The charts below show the distributions of files, sharing, collaborators, and vendors per user.

We computed the Self-VFC, the Collaborators-VFC, and the Aggregate-VFC for users in the PrivySeal dataset.

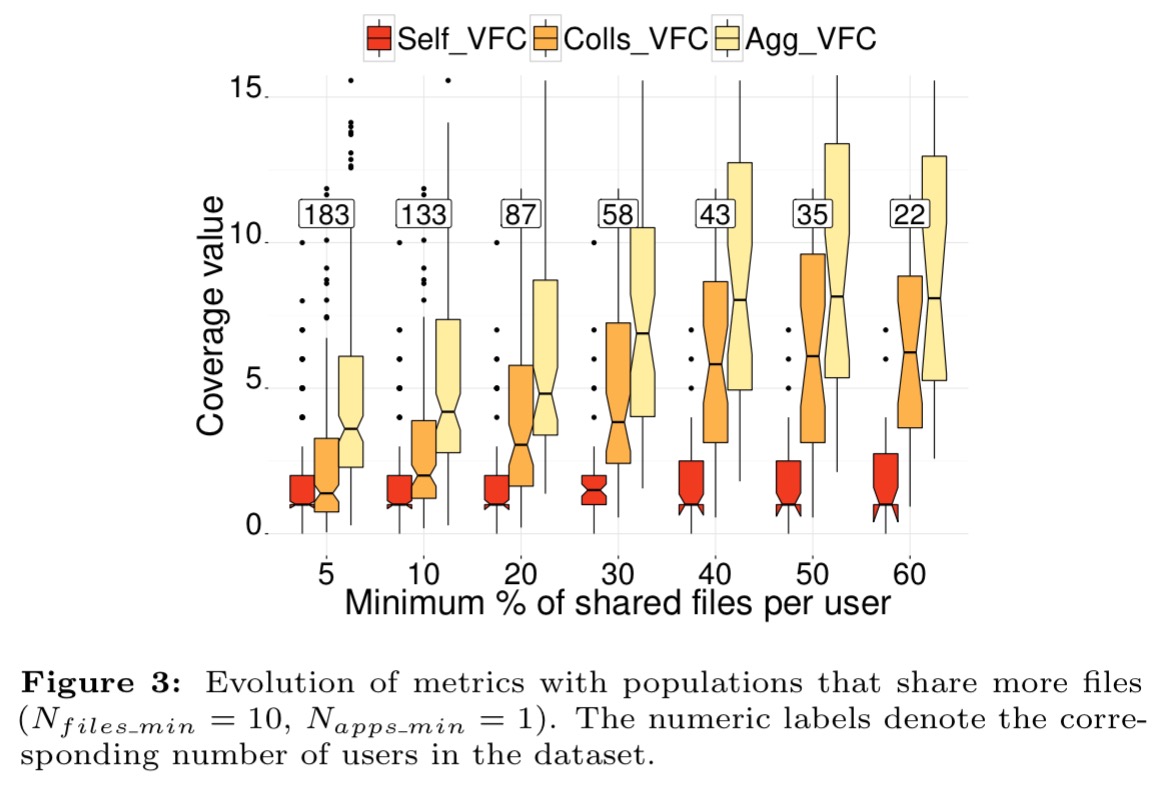

The chart below shows the impact of sharing on privacy loss, broken down into populations that share differing percentages of their files. Even at 5% sharing, the median Collaborators-VFC score is 39% higher than the corresponding Self-VFC score – and at 60% sharing this jumps to a 100% increase in VFC score.

- Collaborator’s app adoption decisions make a significant contribute to a user’s privacy loss.

- The more collaborators, the worse it gets…

Reducing privacy loss through privacy indicators

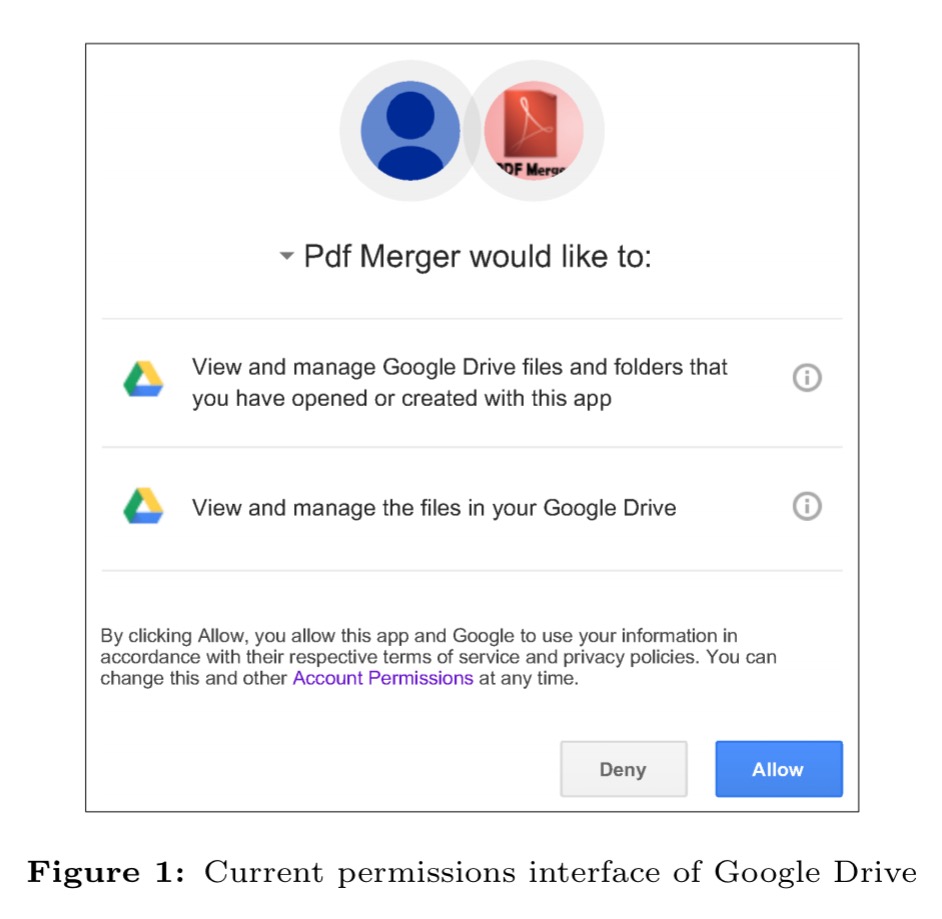

Now the question becomes: how can we help users minimise their privacy loss when selecting third-party applications? The standard Google Drive application permissions dialog looks like this:

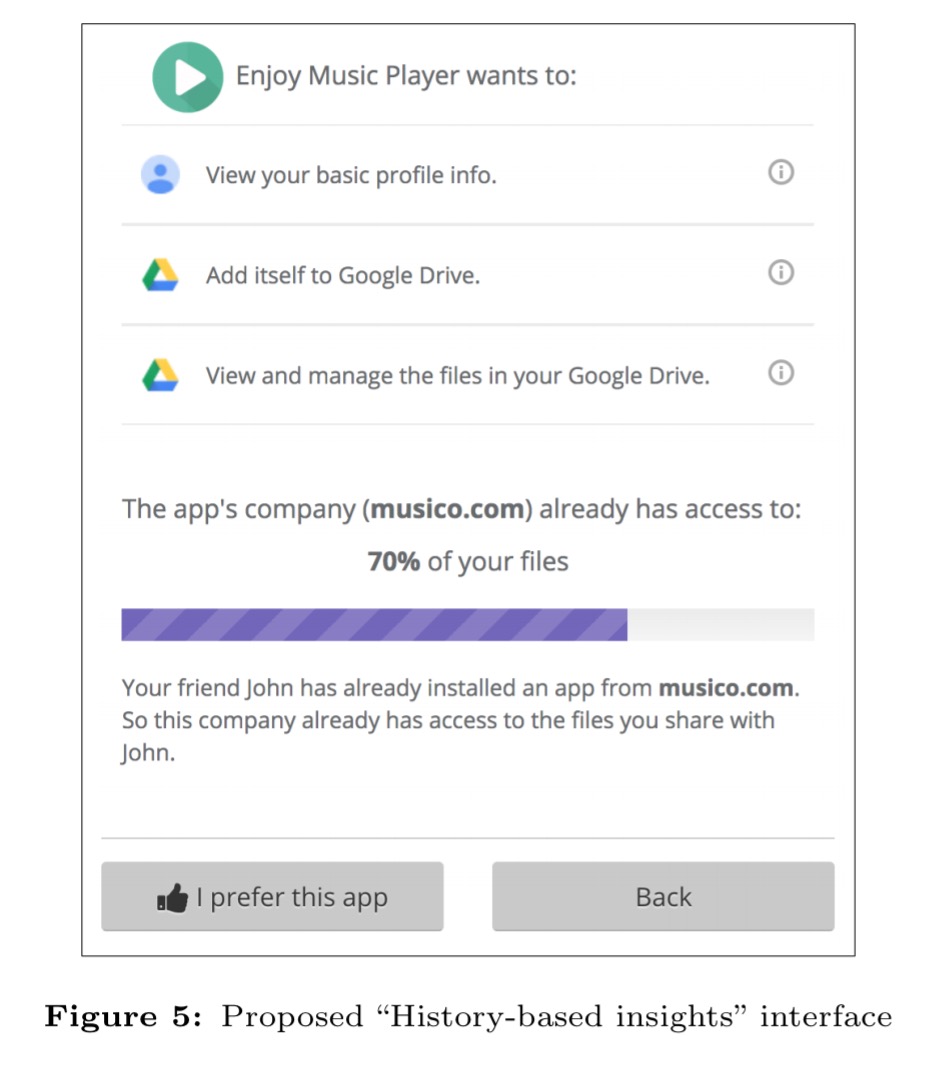

The authors very carefully design an enhanced permissions dialog incorporating privacy indicators that looks like this:

We call our proposed privacy indicators “History-based Insights” as they allow users to account for the previous decisions taken by them or by their collaborators.

Note that the enhanced dialog shows the percentage of files readily accessible by the vendor. The best strategy for the user to minimise privacy loss when considering a new app (if there are several alternatives available) is to select the vendor that already has access to the largest percentage of the user’s files.

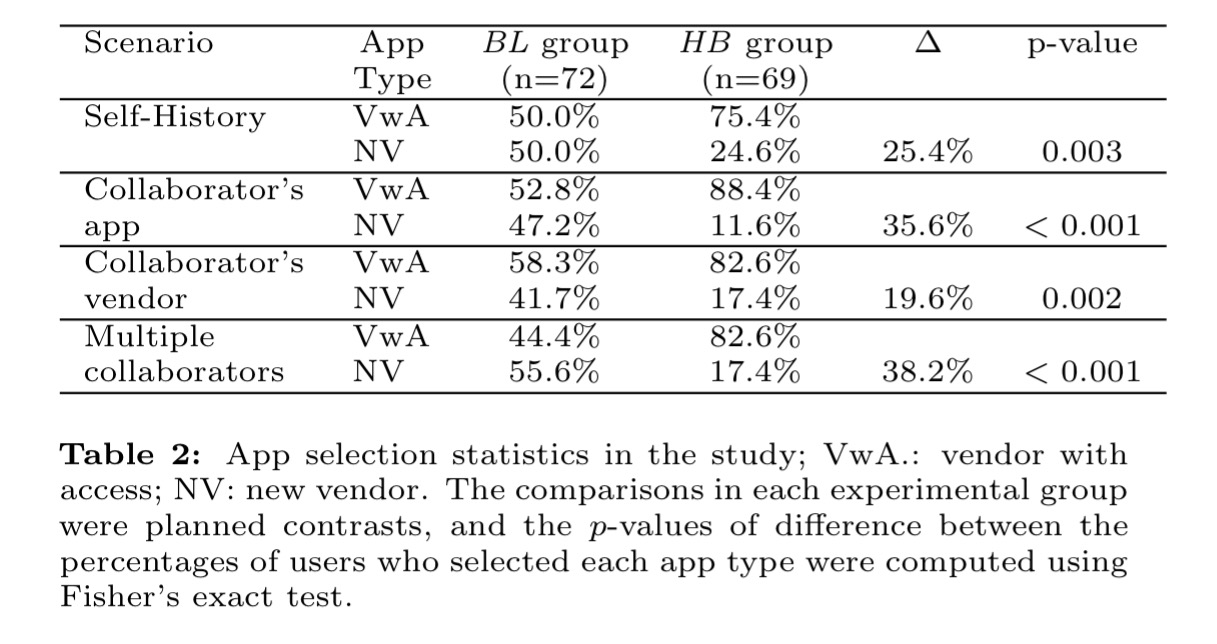

157 study participants were divided into two groups: one group (BL) performed a set of tasks using the standard permissions dialog, and the other group (HB) performed the same tasks but were shown the enhanced history-based insights dialog. The participants were told nothing more than that the study was designed to ‘check how people make decisions when they install third-party apps.’

There are four scenarios:

- Self-history: tests whether a user is more likely to install an app from a vendor they have installed from before. For the baseline (BL) group with the standard dialog, there was no indication of any privacy considerations in the selection of a subsequent app. They chose based on description, logo, name or the perceived trustworthiness of the URL. In the HB group though, 72% of participants chose the app from the vendor that they previously granted permission to. Quoting one user: “This company has access to all my files, so I woud choose them as I don’t want to have 2 companies with full access to my files.“

The new privacy indicator leads users to more frequently choose apps from vendors they have already authorized.

- Collaborator’s app: tests whether a user is more likely to install the same app that a collaborator has used. The baseline group show a fairly even split between the two choices presented, whereas in the HB group 88% choose the app their collaborator is using. “Thanks to John, they already have access to 70% of my data. Sharing the last 30% isn’t as bad as sharing 100% of my data with [the other vendor].”

- Collaborator’s vendor: as the previous test, except instead of being offered a choice of an app that is the same as that used by a collaborator, participants are offered the choice of an app from the same vendor as another app used by a collaborator. Most users didn’t really distinguish between the concept of a vendor and the concept of an app, and behaved very similarly to the previous scenario.

- Multiple collaborators: given two collaborators, one with which the user shares many files, and one few, does the user chose to install an app that is in common with the higher-sharing collaborator? In the baseline group just less than half choose this app, whereas in the HB group 83% did.

Here’s the summary table for these results:

Overall, we found out that … participants in the HB group were significantly more likely to install the app with less privacy loss than those in the BL group.

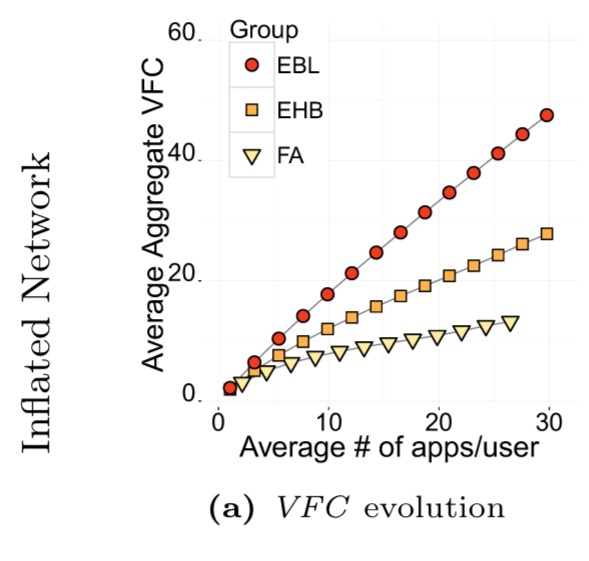

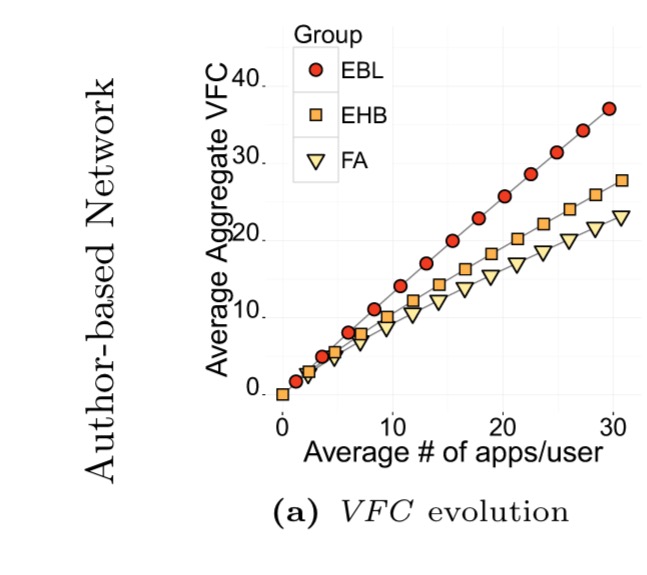

The changes in behaviour from this study were extrapolated to two simulated larger networks: one Google Drive network seeded from the PrivySeal Dataset and inflated while retaining input degree distribution; and one network based on author collaborations on papers in the Microsoft Academic graph.

The charts below show the privacy loss under three scenarios:

- EBL is the experimental baseline, i.e. no bias towards minimising privacy loss

- EHB is the experimental HB model, where the user takes decisions in accordance with the preferences discovered in the preceding user study

- FA is a ‘fully aware’ model in which the user always makes the best privacy loss minimisation decision

The privacy loss in the EHB group drops by 41% (inflated network) and 28% (authors network) respectively when compared to the baseline.

One of the major outcomes is that a user’s collaborators can be much more detrimental to her privacy than her own decisions. Consequently, accounting for collaborator’s decisions should be a key component of future privacy indicators in 3rd party cloud apps… Finally, due to their usability and effectiveness, we envision History-based Insights as an important technique within the movement from static privacy indicators towards dynamic privacy assistants that lead users to data-driven privacy decisions.

2 thoughts on “If you can’t beat them, join them: a usability approach to interdependent privacy in cloud apps”

Comments are closed.