Scaling symbolic evaluation for automated verification of systems code with Serval Nelson et al., SOSP’19

Serval is a framework for developing automated verifiers of systems software. It makes an interesting juxtaposition to the approach Google took with Snap that we looked at last time out. I’m sure that Google engineers do indeed take extreme care when building the low level networking code that powers Google’s datacenters, but their fundamental design point was to enable frequent releases for fast iteration, feedback on their designs, and yes, early detection of problems.

Formal verification is at the other end of the spectrum. In theory it enables you to eliminate whole classes of problems and vulnerabilities entirely (in practice perfection is still hard to come by), and so it can be especially valuable in security sensitive situations. But it comes with a very high price tag:

Writing proofs requires a time investment that is usually measured in person-years, and the size of the proofs can be several times or even more that an order of magnitude larger than that of implementation code.

That’s both very expensive and an incredibly long wait for feedback. To invest in formally modelling something, you really need to have a very good idea of what the software needs to do. Since so much of our learning comes at the point where our ideas engage with the real world, I think that means you probably want to have built at least a couple of versions of it already, and only then try to build the ‘bullet-proof’ version. Just for ordinary software development I often find myself writing parts of software three times: once in my notebook away from the keyboard, sketching out the ideas, shape, and requirements; a first implementation that works but invariably feels less than satisfying once it is done, and reveals along the way a bunch of things I hadn’t initially understood about the problem; and then the third cleaner implementation that might finally be worthy of a pull request. (And btw., while there could be many justifiable complaints about the software I’ve written over the years, never yet has anyone complained that I’m slow!).

If we could bring down the excessive costs of formal verification, we would be able to use it in more situations. This is the goal of push-button verification : you still have to produce a rigorous specification of how the system is intended to behave, but instead of then laboriously proving that it does so, you just ‘push a button’ and let the machine spit out a proof (or contradiction) for you.

To make push-button verification tractable, you need to accept certain limitations in your implementation – chiefly that operations be finite so that their semantics can be expressed as a set of traces of bounded length. (Just think of it like a stricter version of designing your software in such a way that it is easily testable).

Given the problem of verifying a finite implementation against its specification, a domain-specific automated verifier reduces this problem to a satisfiability query using symbolic evaluation and discharges the query with a solver such as Z3.

Prior work has demonstrated symbolic evaluation for specific systems, but Serval introduces a general purpose approach of reusable and interoperable verifiers.

Through this paper we address the key concerns facing developers looking to apply automated verification: the effort required to write verifiers, the difficulty of diagnosing and fixing performance bottlenecks in these verifiers, and the applicability of this approach to existing systems. Serval enables us, with a reasonable effort, to develop multiple verifiers, apply the verifiers to a range of systems, and find previously unknown bugs.

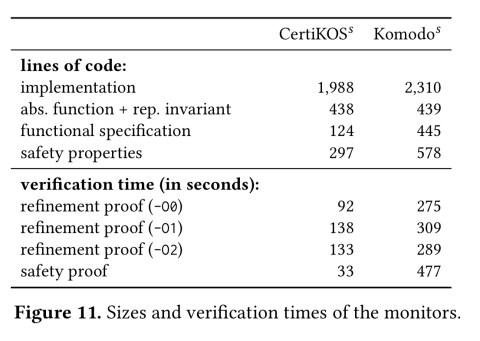

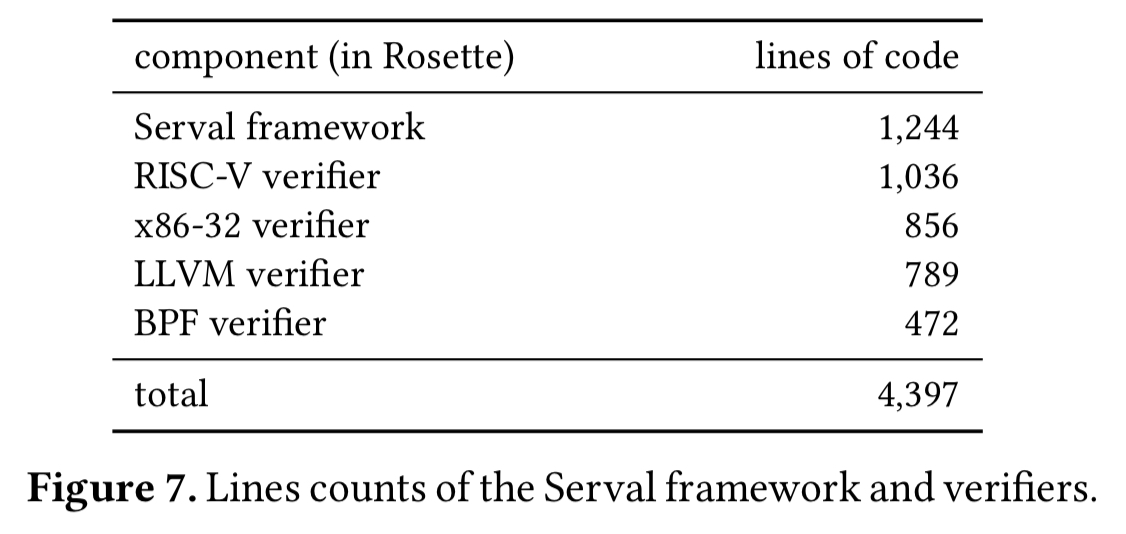

You’re entitled to wonder what ‘reasonable effort’ is here (there’s a lot of room for improvement when the starting point is measured in person-years!). The authors port both CertiKOS (from x86) and Komodo (from ARM) to a unified RISC-V platform and verify their new implementations. The port and verification processes took about four-person weeks each (impressively little time, but remember these are world-experts in verification methods doing the work!). Here are the resulting code and specification sizes, together with the verification runtimes (impressively short!).

Symbolic evaluation primer

A little background on symbolic evaluation is helpful for following along with the paper. The wikipedia entry on symbolic execution is a good place to start. The basic idea is to execute the program, but with symbolic values for inputs instead of actual inputs. Say we start with an integer input assigned the symbol , after executing a portion of the program we might now know that the value is e.g.

. When it comes to branches etc., we have to explore all possible branches that could be activated based on the current constraints (‘forking’ the symbolic execution and pursuing all paths).

When the paper refers to a variable “going symbolic” (and hence causing a state space explosion), what it means is that we no longer have a concrete definitive value for a variable, and hence have to explore many parallel universes for all of the allowed values. For example:

… When the program counter becomes symbolic, evaluation of subsequent instruction fetch and execution wastes time exploring infeasible paths, resulting in complex constraints or divergence.

How Serval works

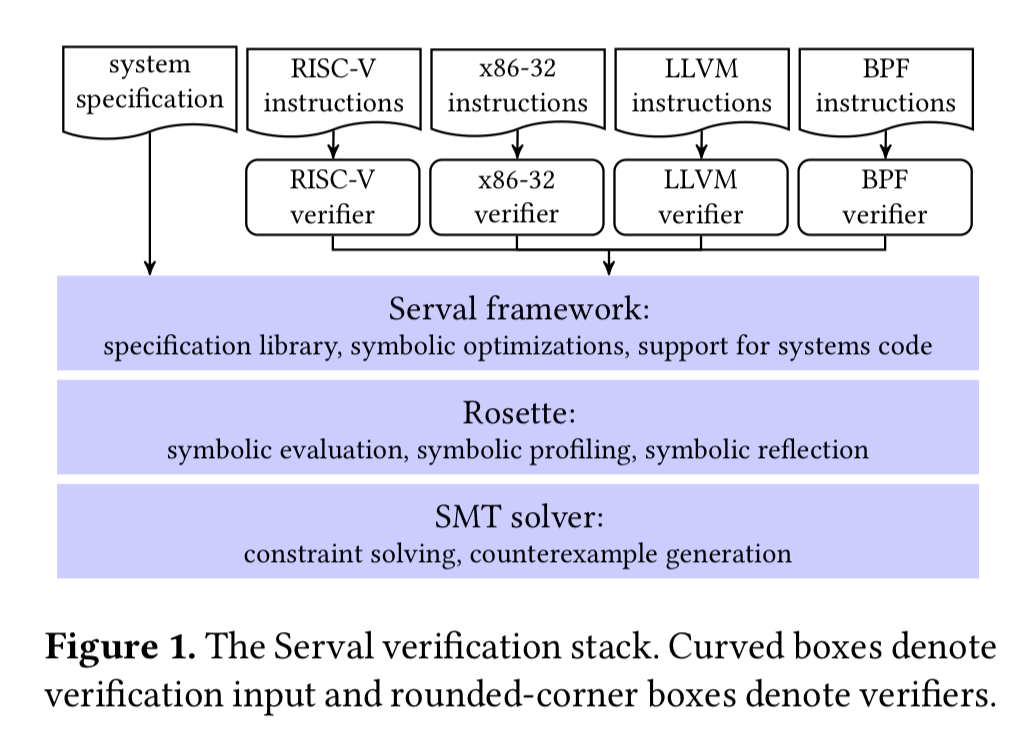

A key part of the Serval difference is that you write your application code in your favourite language, and compile it into a binary using your standard toolchain. So that Serval knows what properties it should verify, you also need to write a specification of the desired system behaviour. You do that using the Rosette language.

The Rosette language provides a decidable fragment of first-order logic: booleans, bitvectors, uninterpreted functions, and quantifiers over finite domains. Serval provides a library to simplify the task of writing specifications, including state-machine refinement and non-interference properties.

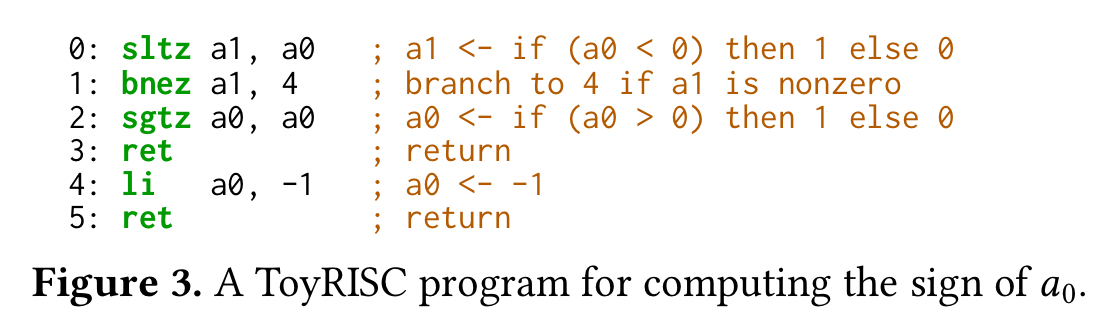

Here’s a program in a toy RISC instruction set which computes the sign of its argument:

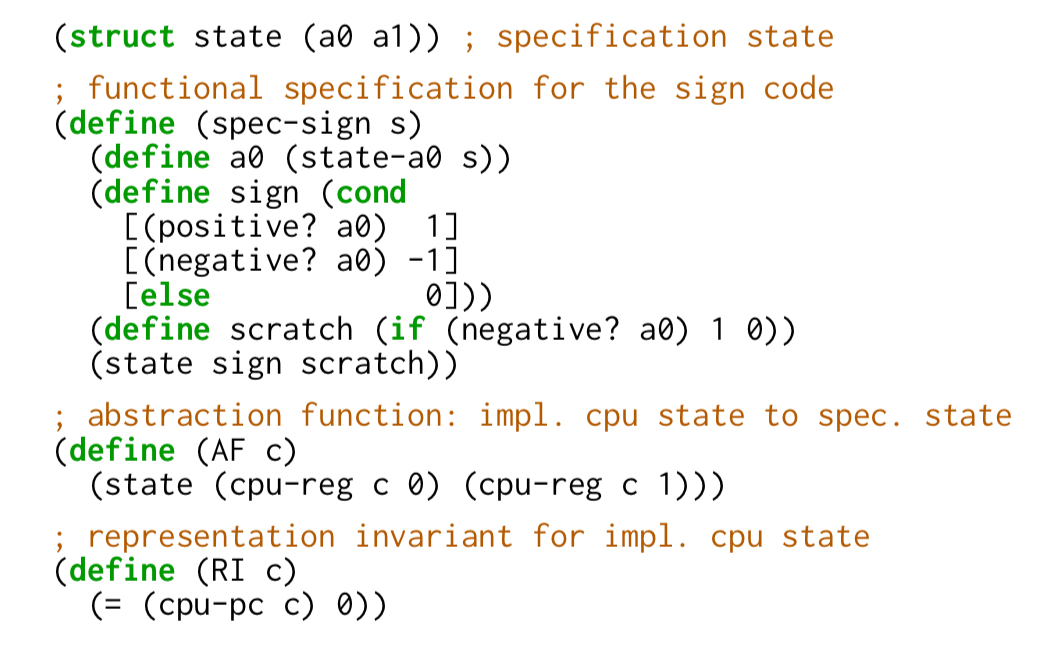

And here’s what a Rosette specification for the desired behaviour of that program looks like:

Hello Lisp (well, s-expressions anyway)!

In this instance the spec is longer than the program, but typically a specification will be at a higher level of abstraction that the program code, and hence considerably shorter.

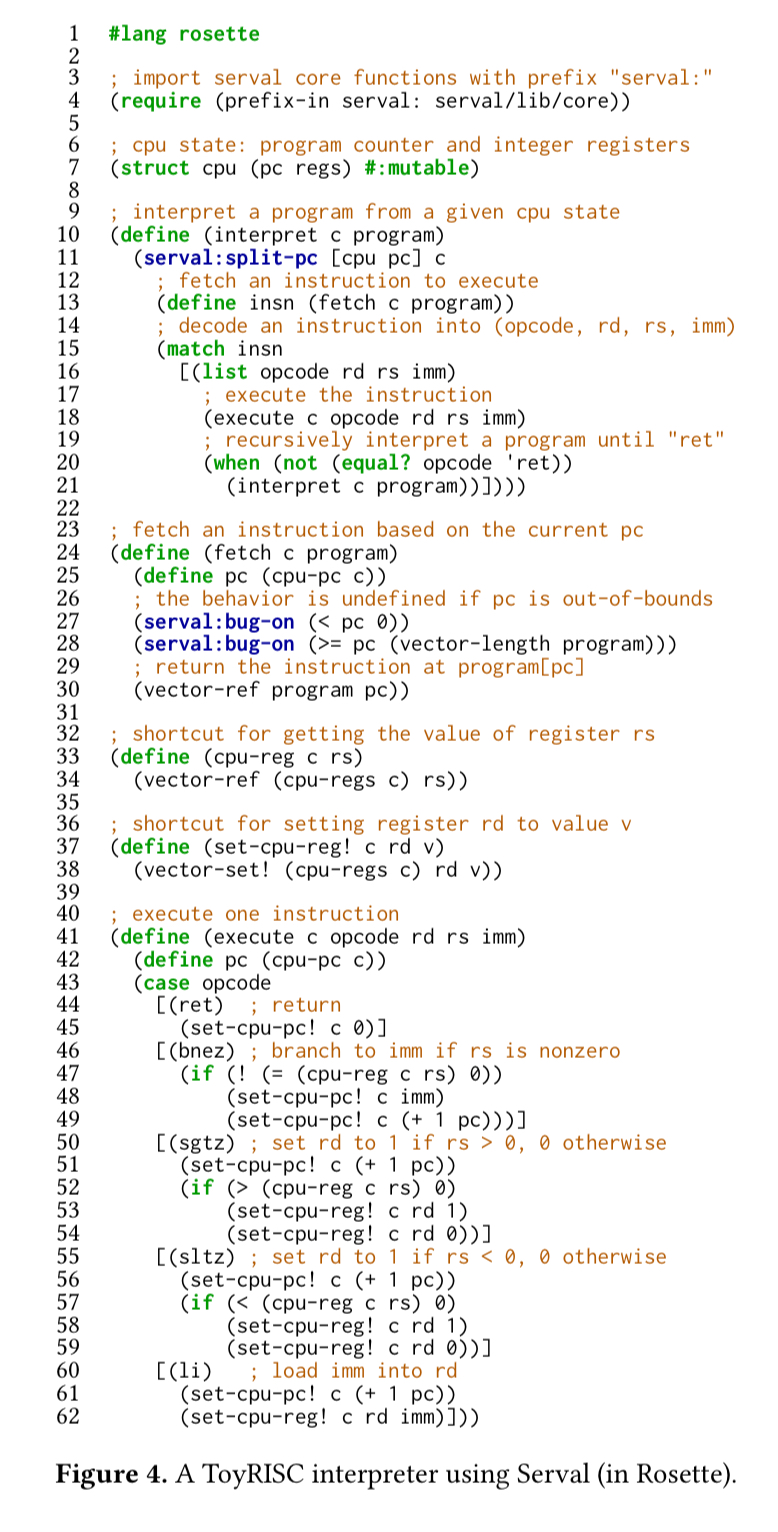

Now it’s just a small matter of proving that the binary faithfully implements the specification (or finding a contradiction of course). If you’ve chosen to compile your program down to a RISC-V, x86-32, LLVM, or BPF (Berkeley Packet Filter) instruction set, then you’re in luck, because the author’s provide Serval interpreters for those instruction sets out of the box. If your targeting something else, then I’m afraid it’s on you to write the interpreter. Here’s an example for the same toy RISC instruction set we saw before:

These interpreters are actually surprisingly small in terms of lines of code: about a Kloc max.

We’ve been talking about interpreters, but the table above shows the code sizes for verifiers. What’s that all about? Since the interpreters are written in Rosette, they can be used to symbolically evaluate program. This is the capability that turns an interpreter into a verifier!

Serval employs Rosette to produce SMT constraints from symbolic values (that encode the meaning of specifications or implementations) and invokes a solver to check the satisfiability of these constraints for verification. If verification fails (e.g., due to insufficient specification, or incorrect implementation), the solver generates a counter-example, which is visualized by Rosette for debugging.

Making verification scalable

On a first try, it’s quite possible that the symbolic evaluation of a program will be too slow or even hang. This generally happens when key variables ‘go symbolic’. At this point you can use the Rosette symbolic profiler to find out where the bottleneck is. Serval provides a set of reusable symbolic optimisations out of the box to get past common log jams.

For example, if the program counter becomes symbolic then we’re going to evaluate a lot of subsequent instruction fetch and executions. The split-pc optimisation helps in this case by splitting execution into a smaller number of concrete values, one for each conditional branch, and later merging them. If the program counter is wholly unconstrained (e.g. a blind jump from an untrusted source with no checking) then this tactic won’t work – but in such a case you usually have other problems anyway!

What kinds of properties can Serval verify?

Serval can be used to verify…

- The absence of undefined behaviour, i.e. the program never wanders into a territory where the behaviour of the instruction set is undefined.

- That an implementation correctly refines an abstract state machine (you provide the specification, the abstraction mapping function, and a representation invariant over the implementation)

- Safety properties such as reference-count consistency and non-interference

- And presumably anything else that you can express in Rosette.

One caveat though is that Serval does not support reasoning about concurrent code.

Serval in action

CertiKOS and Komodo are both formally verified security monitors. CertiKOS was developed using Coq, through an impressive 32 layers of refinement. It runs to about 200K lines of specification and proof. Komodo was developed using Dafny for verification, and Vale for the implementation.

The authors port both CertiKOS and Komodo to C and assembly implementations, and verify them using Serval, in about four-person weeks each!. In both cases we’re looking at approximately 2Kloc of code, and 1Kloc of specification related material.

Serval can also be used to prove partial specifications for existing systems. The authors try out this approach with the Keystone security monitor, and found two undefined behaviour bugs, which have since been fixed by the Keystone developers.

You can try download Serval and try it for yourself via the links on the Serval project page.

2 thoughts on “Scaling symbolic evaluation for automated verification of systems code with Serval”

Comments are closed.