Securing wireless neurostimulators Marin et al., CODASPY’18

There’s a lot of thought-provoking material in this paper. The subject is the security of a class of Implantable Medical Devices (IMD) called neurostimulators. These are devices implanted under the skin near the clavicle, and connected directly to the patient’s brain through several leads. They can help to relieve chronic pain symptoms and movement disorders (e.g., Parkinsons). The latest generation of such devices come with remote monitoring and reprogramming capabilities, via an external device programmer. The manufacturers seem to have relied on security through obscurity (when will we ever learn!) with the very predictable result that the interface turns out not be secure at all. So we end up with a hackable device connected directly to someone’s brain. Some of the possible attacks, and also the secure alternative mechanism that Marin et al. develop, sound like they come straight out of a sci-fi novel.

It’s not at all surprising to learn that you can do a lot of harm to a patient by sending signals to their brain. “For example, adversaries could change the settings of the neurostimulator to increase the voltage of the signals that are continuously delivered to the patient’s brain. This could prevent the patient from speaking or moving, cause irreversible damage to their brain, or even worse, be life-threatening.”

What I hadn’t foreseen is the possible privacy attacks, especially when future devices support sending of the P-300 wave brain signals (which will allow more precise and effective therapies):

… adversaries could capture and analyze brain signals such as the P-300 wave, a brain response that begins 300ms after a stimulus is presented to a subject. The P-300 wave shows the brain’s ability to recognize familiar information. Martinovic et al. performed a side-channel attack on a Brain Computer Interface (BCI) while connected to several subjects, and showed that the P-300 wave can leak sensitive personal information such as passwords, PINs, whether a person is known to the subject, or even reveal emotions and thoughts.

Implantable medical devices, a sorry story

This isn’t the first time that IMDs have been shown to have poor security. Hei et al. showed a denial of service attack that can deplete an IMD’s resources and reduce battery lifetime from several years to a few weeks (replacement requires surgery). Halperin et al. showed attacks on implantable cardioverter defibrillators, and Marin et al. showed how to do the same using standard hardware without needing to be close to the patient. Li et al. demonstrated attacks against insulin pumps.

IMD devices are resource-constrained with tight power and computational constraints and a limited battery capacity. They lack input and output interfaces, and can’t be physically accessed once implanted.

IMDs need to satisfy several important requirements for proper functioning, such as reliability, availability, and safety. Adding security mechanisms into IMDs is challenging due to inherent tensions between some of these requirements and the desirable security and privacy goals.

Previous work has looked at out-of-band (OOB) channels for exchanging cryptographic keys to secure communication, including the heart-to-heart (H2H) protocol based on the inter-pulse interval. Unfortunately IPI is fairly easy to remotely monitor (even using a standard webcam), and the protocol has a large communication and computation cost.

Reverse engineering a neurostimulator

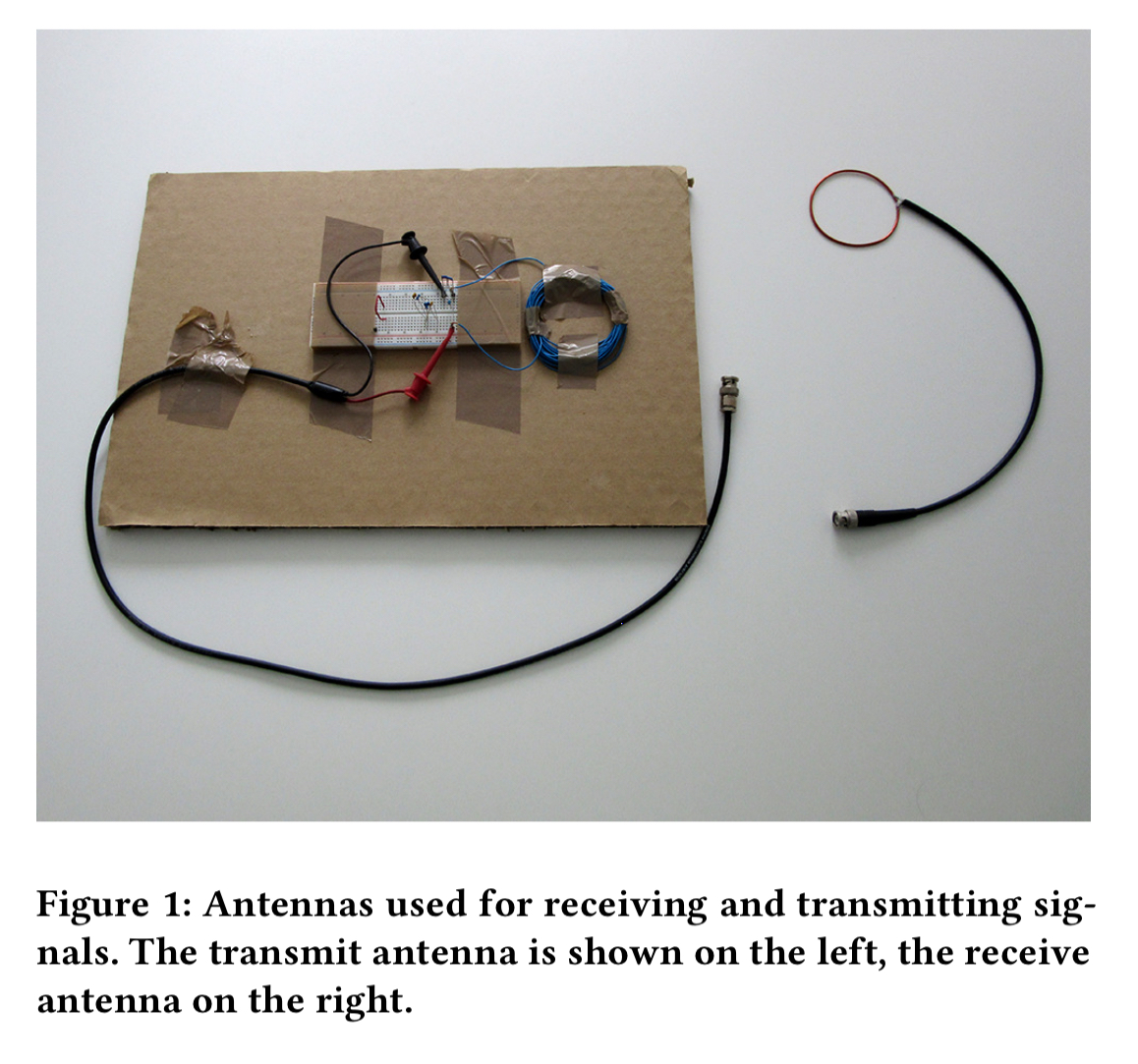

The authors build a couple of simple antennas, change neurostimulator settings using a device programmer, inspect the format of the transmitted messages.

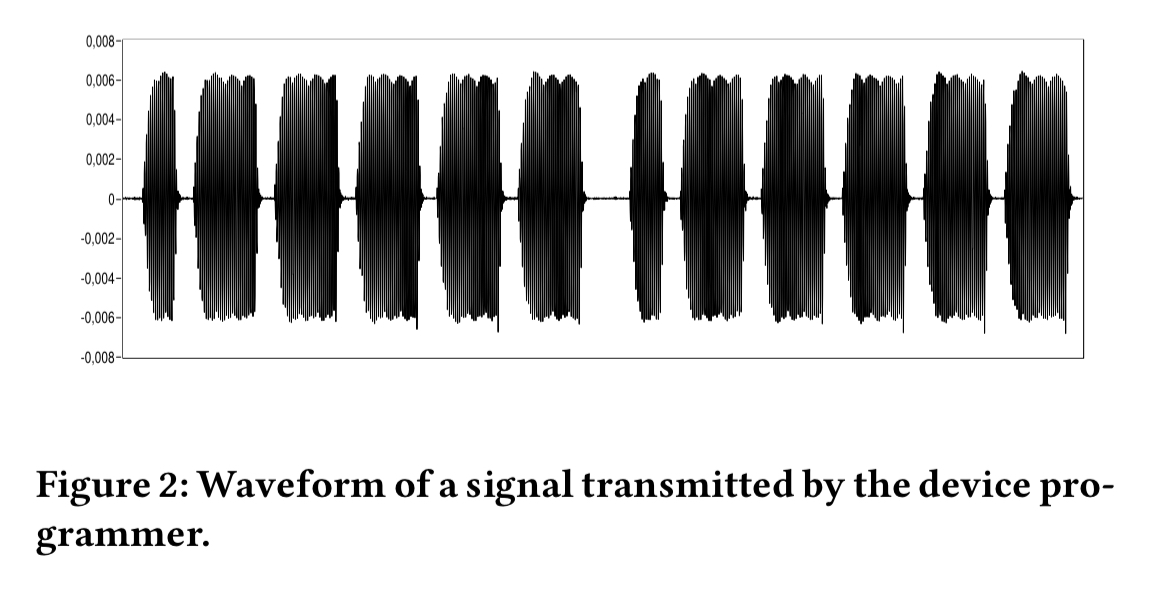

Using this method, it was possible to reverse engineer the protocol. The devices transmit at 175 KHz using an on-off keying (OOK) modulation scheme. An example signal looks like this:

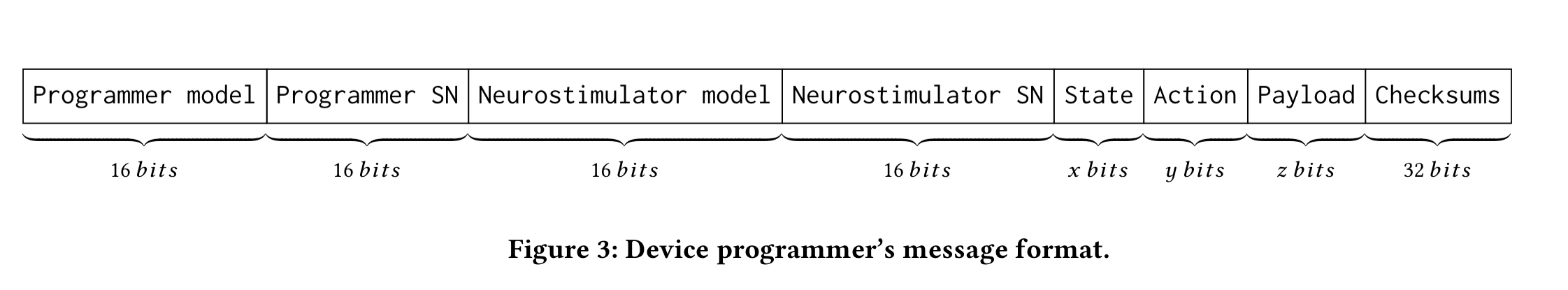

After a little bit of detective work, we arrive at this:

Communication between a device programmer and the neurostimulator happens in three phase. In the initialization phase a handshake swaps model and serial number of the device programmer and neurostimulator. After this, in the reprogramming phase the device programmer can modify the settings as many times as desired within the some protocol session. The termination phase ends a session, allowing the neurostimulator to switch back to a power saving mode.

Demonstrated attacks

- Replay attacks: we were able to modify any of the neurotransmitter settings by intercepting and replaying past transmissions sent by legitimate device programmers.

- Spoofing attacks: we were able to create any arbitrary message and send it to the neurostimulator. This should require knowledge of the neurostimulators serial number (which can be obtained via interception). however, the team found an easier way in that messages were accepted with an empty neurostimulator SN field! “The impact of this attack is quite large, because an adversary could create a valid message, without a SN, and reuse it for all neurostimulators.“

- Privacy attacks:

I generated this encryption key using signals from my brain…

So far we’ve seen that neurostimulators don’t offer any security. How can we do better? We need to establish a secure communication channel between the device programmer and the neurostimulator, which can be accomplished using a shared session key and symmetric encryption. There are two main challenges here: how to generate the key in first place, and how to securely transmit the generated session key to the other party.

Generating the key on the device programmer is appealing in the sense that we have more powerful hardware available to us. Unfortunately, “most OOB channels that allow to transport a key from the device programmer to the IMD, are shown to be vulnerable to security attacks or require to add extra hardware components in the neurostimulator.” So plan B is to generate the key in the neurostimulator itself.

Unfortunately, IMDs typically use microcontroller-based platforms and lack dedicated true random number generators (TRNGs), making it non-trivial to generate random numbers on these devices… Recently, the use of physiological signals extracted from the patient’s body has been proposed for generating cryptographic keys.

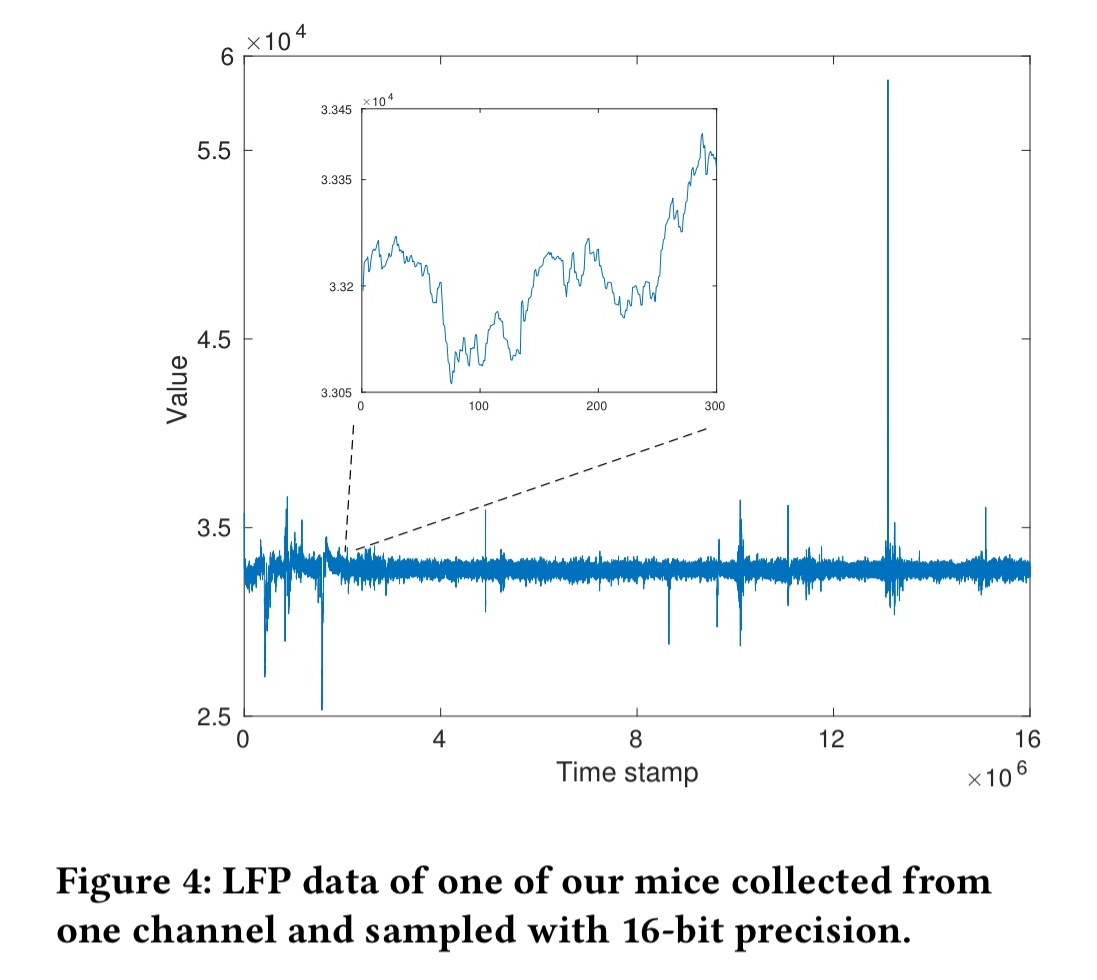

The brain produces a signal called the local field potential (LFP), which refers to the electrical potential in the extracellular space around neurons. Neurotransmitters can measure this signal with the existing lead configurations, but it cannot be obtained remotely – you need direct contact with the brain. So that makes it hard to attack! Here’s an example of LFP data obtained from a mouse brain:

The least significant bits of the sampled LFP data turn out to be a good initial source of a raw random number. This is then passed through a two-stage parity filter to increase entropy density. The resulting bits are relatively uniformly distributed. The estimated Shannon-entropy is around 0.91/bit.

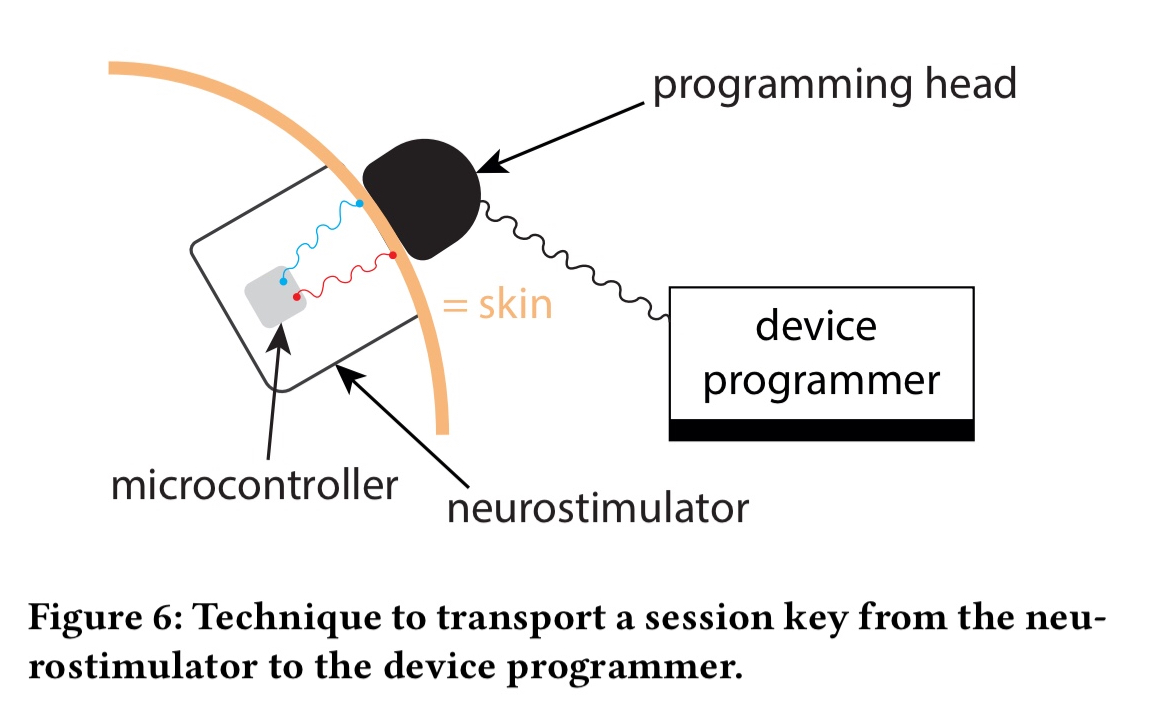

Once we’ve generated a session key from the LFP of the patient’s brain, we need a secure way to transmit it to the programming device:

Our technique for key transportation leverages the fact that both the patient’s skin and the neurostimulator’s case are conductive. We propose to apply an electrical signal (with the key bit embedded in it) to the neurostimulator’s case such that the device programmer can measure this signal by touching the patient’s skin.

To prevent eavesdropping attacks, a low transmission power can be used. The signal can still be received with direct contact using an amplitude less than 1mV. Even at a distance of a few centimetres though, eavesdropping is not possible. This comes at the cost of a lower data rate, but that’s not an issue for this use case. In fact, having a requirement for skin contact for a few seconds (currently about 1.5) is an advantage because it’s assumed a patient would notice an adversary contacting their skin in this way. (Attacks such as hacking a smart watch worn by the patient are currently out of scope).

3 thoughts on “Securing wireless neurostimulators”

Comments are closed.