My VM is lighter (and safer) than your container Manco et al., SOSP’17

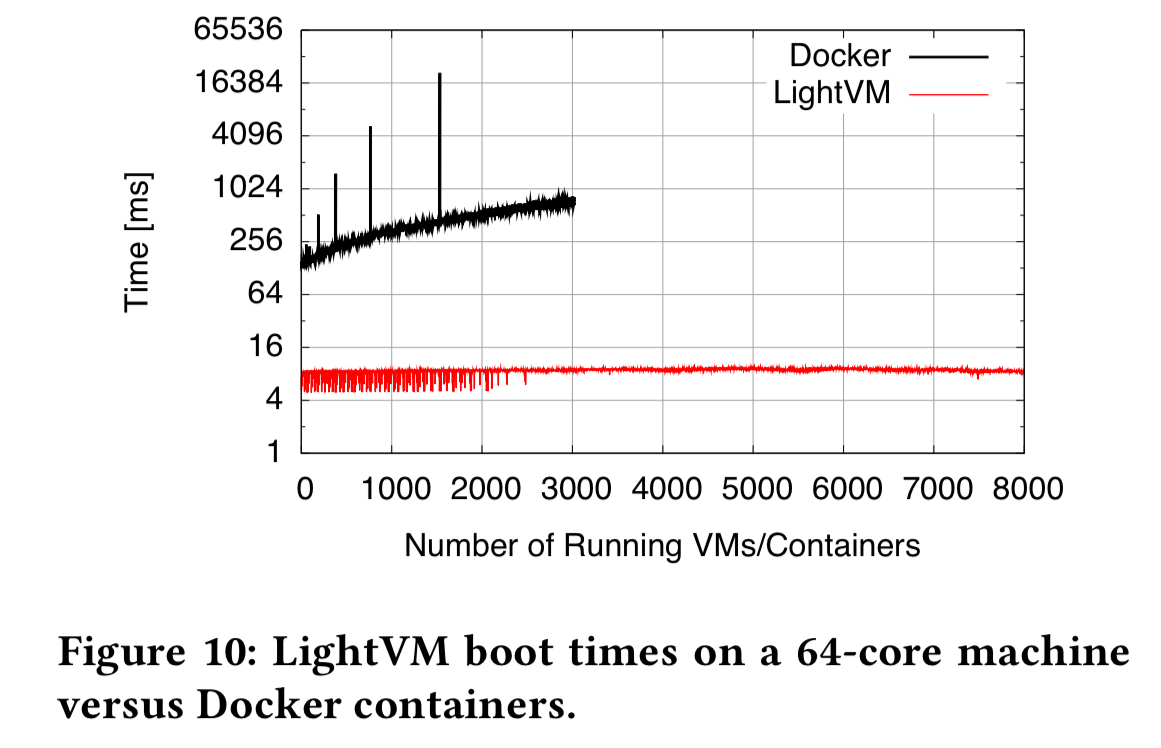

Can we have the improved isolation of VMs, with the efficiency of containers? In today’s paper choice the authors investigate the boundaries of Xen-based VM performance. They find and eliminate bottlenecks when launching large numbers of lightweight VMs (both unikernels and minimal Linux VMs). The resulting system is called LightVM and with a minimal unikernel image, it’s possible to boot a VM in 4ms. For comparison, fork/exec on Linux takes approximately 1ms. On the same system, Docker containers start in about 150ms.

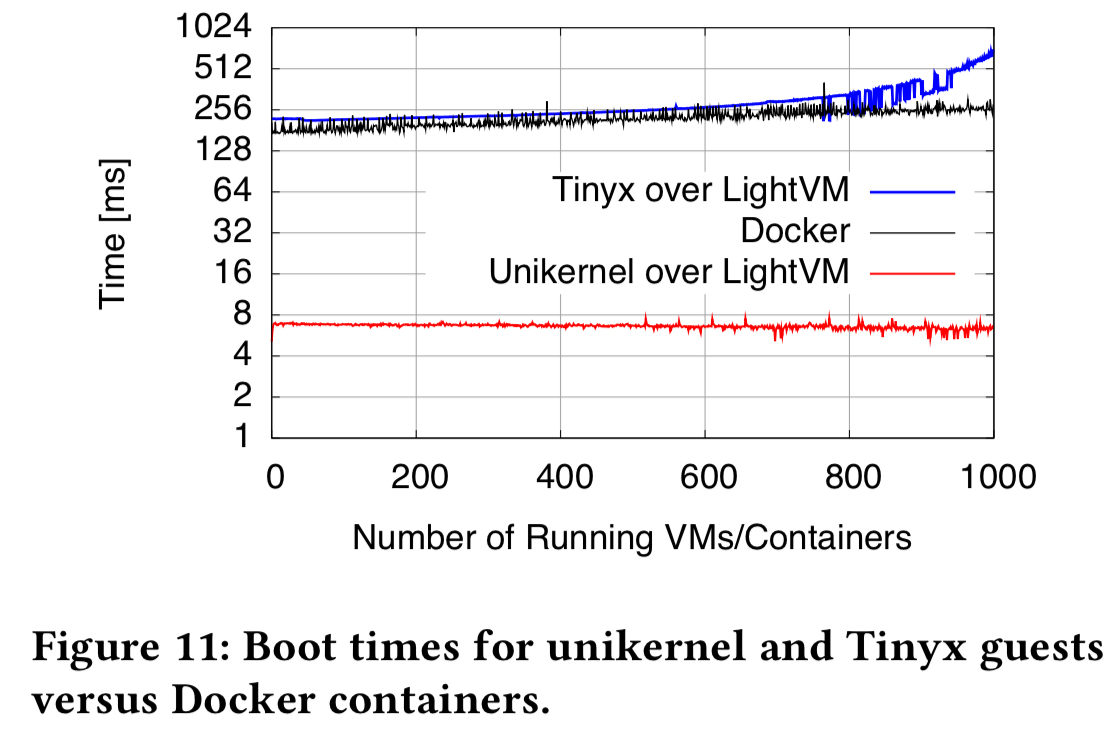

These results are obtained when the LightVM guest is a unikernel. You’re probably only going to create a unikernel in specialised cases. (One interesting such case in the paper is a Micropython-based unikernel that can be used to support serverless function execution). The authors also create an automated build system called TinyX for creating minimalistic Linux VM images targeted at running a single application. If we look at boot times for a TinyX VM as compared to Docker, the performance is very close up to about 250 VMs/containers per core.

Beyond that point, Docker starts to edge it, since even the idle minimal Linux distribution created by TinyX does run some occasional background tasks.

How does Xen scale with number of VMs, and where are the bottlenecks?

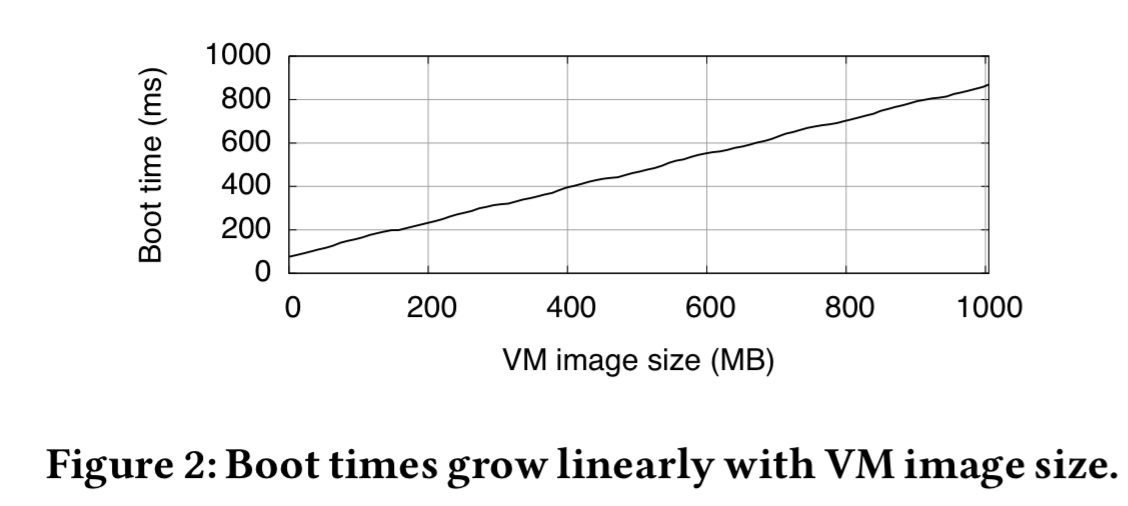

As the following chart shows, the biggest single factor limiting the scalability and performance of virtualisation is the size of the guest VMs. To produce that chart, a unikernel VM was booted from ramdisk, with varying sizes of binary objects injected into the uncompressed image file. So all the effects are due to image size.

So if we want fast booting, we know that image size is going to matter. We’ve looked at unikernels on The Morning Paper before, and they give you the smallest possible guest image. In this paper, the authors use Mini-OS to create a variety of unikernels, including the ‘daytime’ unikernel implementing a TCP servic that returns the current time. This is 480KB uncompressed, and runs in 3.6MB of RAM. This unikernel is used to test the lower bound of memory consumption for possible VMs.

Making your own unikernel image based on Mini-OS is probably more work than many people are prepared to do though, so the authors also created Tinyx.

Tinyx is an automated build system that creates minimalistic Linux VM images targeted at running a single application. The tool builds, in essence, a VM consisting of a minimalistic, Linux-based distributed along with an optimized Linux kernel. It provides a middle point between a highly specialized unikernel, which has the best performance but requires porting of applications to a minimalistic OS, and a full-fledged general-purpose OS VM that supports a large number of applications out of the box but incurs performance overheads.

Tinyx creates kernel images that are half the size of typical Debian kernels, and have significantly smaller runtime memory usage (1.6MB for Tinyx vs 8MB for Debian).

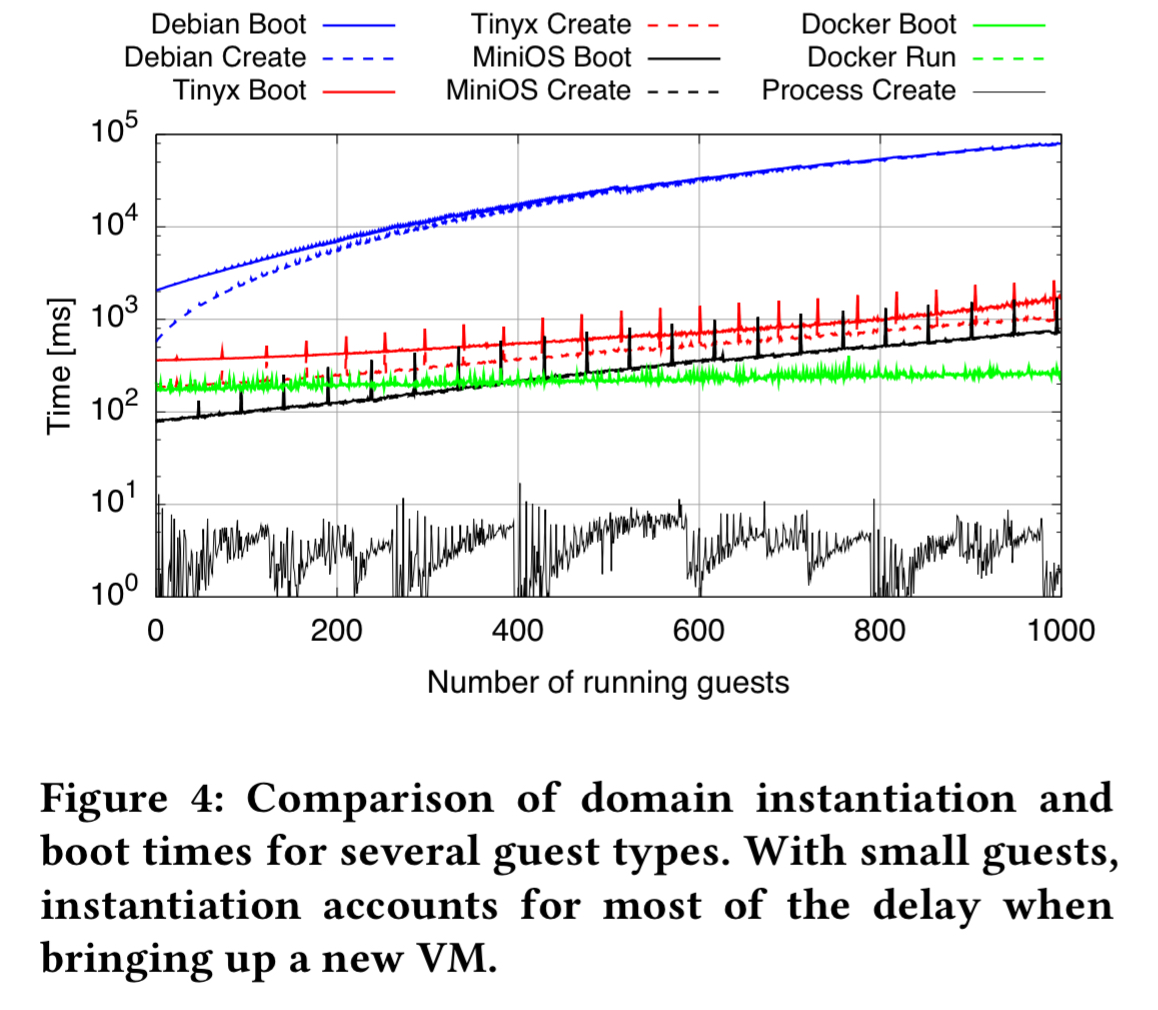

Using the small VM images thus obtained, we can probe the behaviour of Xen itself when launching lots of VMs. When launching 1000 guests, here are the boot and create times for Debian minimal install), Tinyx, MiniOS (unikernel) and for comparison on the same hardware: Docker containers and simple process creation.

As we keep creating VMs, the creation time increases noticeably (note the logarithmic scale): it takes 42s, 10s and 700ms to create the thousandth Debian, Tinyx, and unikernel guest, respectively.

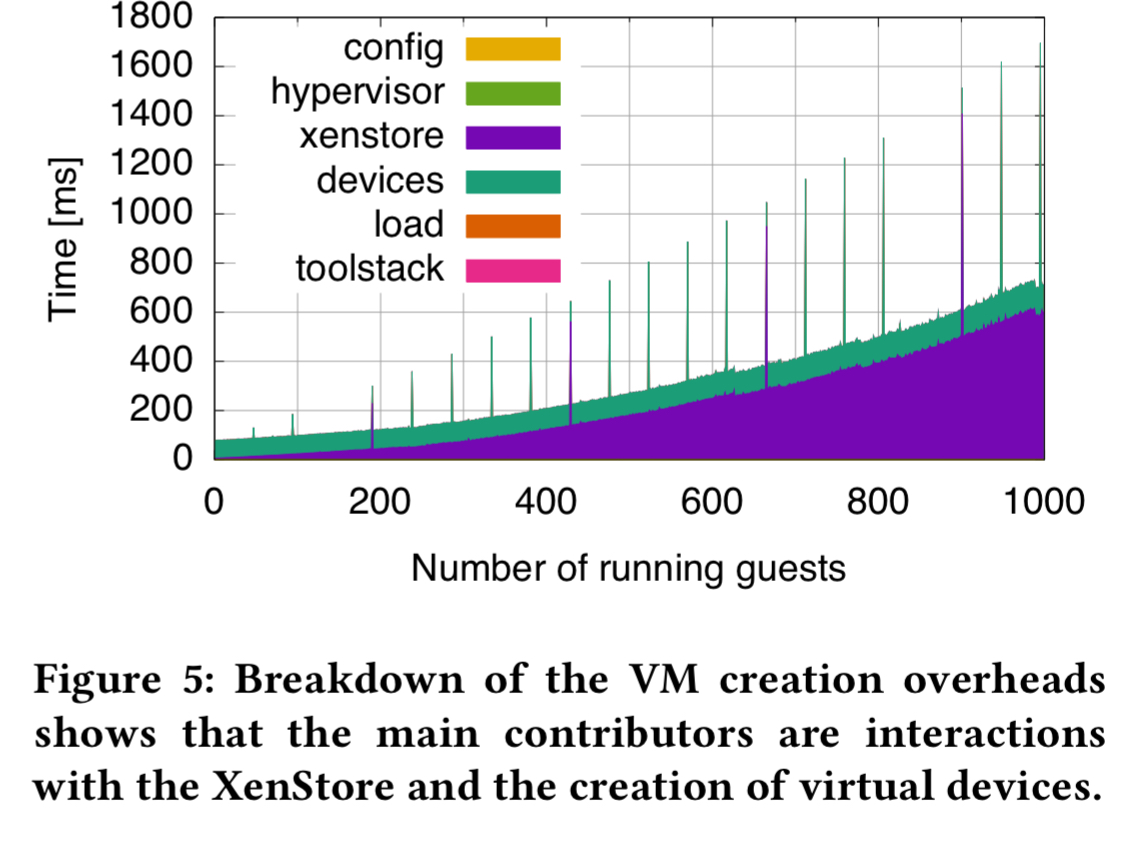

As the size of the VM decreases, the creation time is responsible for ever larger portions of the overall time taken to get to availability. To understand where all the time was going, the team instrumented Xen to reveal this picture:

XenStore interaction and device creation dominate. Of these, the device creation overhead is fairly constant, but the XenStore overhead grows superlinearly.

The design of LightVM

Our target is to achieve VM boot times comparable to process startup times. Xen has not been engineered for this objective, as the results in the previous section show, and the root of these problems is deeper than just inefficient code. For instance, one fundamental problem with the XenStore is its centralized, filesystem-like API which is simply too slow for use during VM creation and boot, requiring tens of interrupts and privilege domain crossings.

I bet it was hard to conceive of anyone launching 1000 guest VMs when that design was first created!

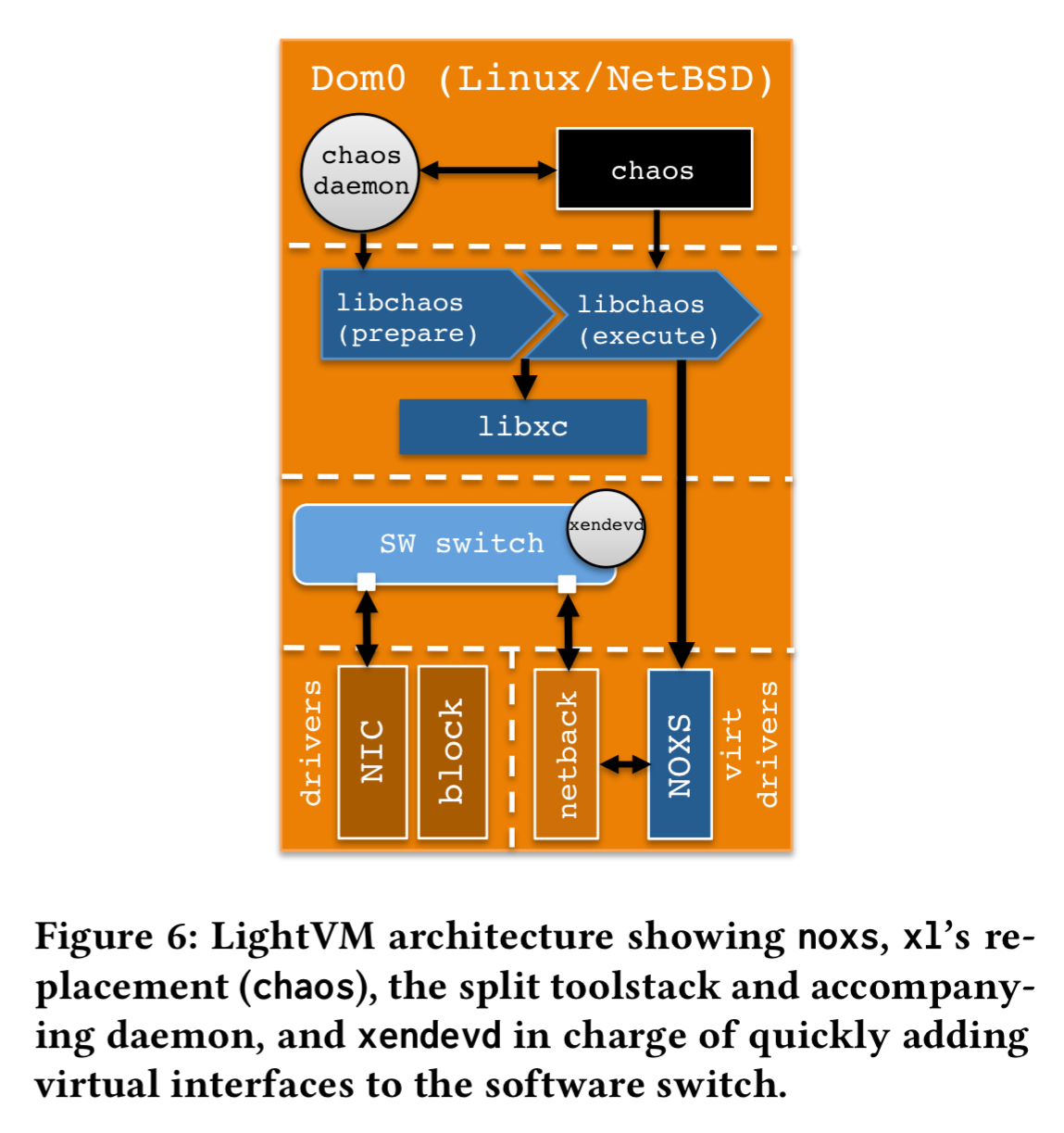

LightVM redesigns the Xen control plane with a lean driver called noxs (for ‘no XenStore’) that replaces the XenStore and allows direct communication between front-end and back-end drivers via shared memory.

LightVM also keeps on hand a pool of pre-prepared VM shells, through which all the processing common to all VMs is done in the background. When a VM creation command is issued, a suitable shell fitting the VM requirements is taken from the pool and only the final initialisation steps such as loading the kernel image into memory and finalising device initialisation need to be done.

Device creation in standard Xen ends up calling bash scripts, which is a slow process. LightVM replaces this with a binary daemon that executes a pre-defined setup with no forking or bash scripts.

Performance

We saw the boot times for LightVM with a variety of images at the start of this post. Furthermore, LightVM can save a VM in around 30ms, and restore it in 20ms. Standard Xen needs 128ms and 550ms respectively.

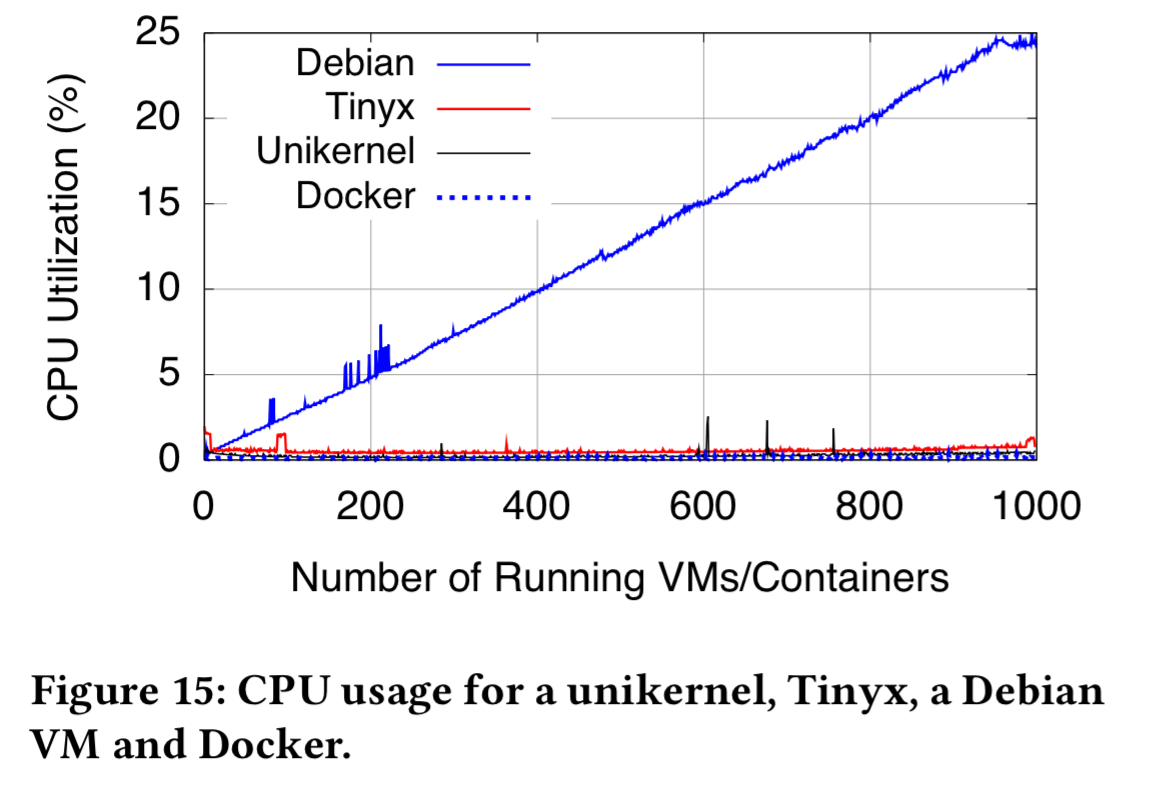

Unikernel memory usage is fairly close to Docker containers. Tinyx needs more, but only 22GB more across 1000 guests. That’s a small fraction of the RAM of current servers.

CPU usage for VMs can also be on a par with containers, so long as the VMs are trimmed to include only the necessary functionality:

Use cases

The authors present four different use cases where LightVM + lightweight VMs can shine.

In all the following scenarios, using containers would help performance but weaken isolation, while using full-blown VMs would provide the same isolation as lightweight VMs, but with poorer performance.

- Personal firewalls per mobile user, running in mobile gateways at or near cellular base stations (mobile edge computing – MEC). Here a ClickOS unikernel image is used, and 8000 firewalls can be run on a 64-core AMD machine with 10ms boot times. A single machine running LightVM at the edge in this way can run personalized firewalls for all users in a cell without becoming a bottleneck.

- Just-in-time service instantiation in mobile edge computing (similar to JITSU).

- High-density TLS termination at CDNs, which requires the long term secret key of the content provider. Hence strong isolation between different content provider’s proxies is desirable.

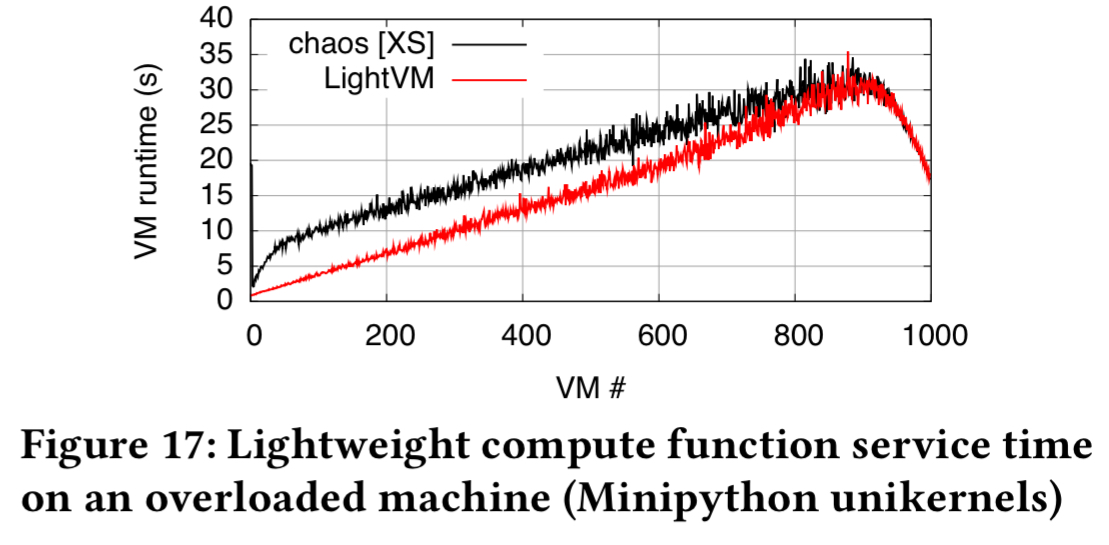

- Creation of a lightweight compute service such as AWS Lambda. For this use case they use a Micropython-based unikernel to run computations written in Python. It takes about 1.3ms to boot and start executing a function. When the system is deliberately stressed with more requests arriving than the test machine can cope with, service time goes up fairly linearly until about 800 VMs.

The use cases we presented show that there is a real need for lightweight virtualization, and that it is possible to simulataneously achieve both good isolation and performance on par or better than containers.

A recent post on the Google Cloud Platform blog, ‘Demystifying container vs VM-based security: security in plaintext’ provides an interesting perspective on container security and isolation, from a company that have been running a container-based infrastructure for a very long time.

Is this open source?

https://github.com/sysml/lightvm

https://github.com/hyperhq/hyperd, https://github.com/hyperhq/runv

It would be nice if we could see Intel Clear Containers added to this comparison.

My FreeBSD jail is lighter (and safer) than your linux container or VM. :)

You actually make it seem so easy along with your presentation but I find this topic to be actually one thing which I feel I might by no means understand. It kind of feels too complex and very huge for me. I’m looking ahead for your next publish, I’ll try to get the hold of it!

I am not positive the place you’re getting your information, but great topic. I must spend a while studying more or working out more. Thanks for excellent information I used to be on the lookout for this information for my mission.

You really make it appear so easy with your presentation but I to find this topic to be really one thing which I believe I would by no means understand. It kind of feels too complex and very wide for me. I am looking ahead to your subsequent post, I will try to get the grasp of it!