Serverless computing: economic and architectural impact Adzic et al., ESEC/FSE’17

Today we have another paper inspired by talks from the GOTO Copenhagen conference, in this case Gojko Adzic’s talk on ”Designing for the serverless age.” It’s a case study on how serverless computing changes the shape of the systems that we build, and the (dramatic) impact it can have on the cost of running those systems. There’s also a nice section at the end of the paper summarising some of the current limitations of the serverless approach.

In practice the paper describes experiences building systems with AWS Lambda, but we can still think about serverless more generally. And to do that, we need a definition.

‘Serverless’ refers to a new generation of platform-as-a-service offerings where the infrastructure provider takes responsibility for receiving client requests and responding to them, capacity planning, task scheduling, and operational monitoring. Developers need to worry only about the logic for processing client requests.

Instead of continuously-running servers, functions operate as event handlers, and you only pay for CPU time when the functions are actually executing. The economic and architectural implications of serverless are inter-twined, each influencing the other.

The serverless impact on system designs

Perhaps the most obvious thing about serverless is the division of the system logic into a collection of independent functions. Since billing is proportional to actual usage, there is no penalty for creating many functions. Contrast this with server-based designs, where you pay per (long-running) instance. In such a world it is hard to justify having a dedicated service instance for infrequent but important tasks (let alone two if you have a primary and fail-over). You have a strong economic incentive to bundle responsibility for many tasks into the same instance.

For serverless architectures, billing is proportional to usage, not reserved capacity. This removes the economic benefit of creating a single service package so different tasks can share the same reserved capacity. The platform itself provides security, fail-over and load-balancing, so all benefits of bundling are obsolete… Without strong economic and operational incentives for bundling, serverless platforms open up an opportunity for application developers to create smaller, better isolated modules, that can more easily be maintained and replaced.

In other words, with serverless we have for the first time a platform and billing model which actually works with the desire to split a system up into independently delivered units, rather than fighting against that trend at some level. And of course, we get all the benefits of fault isolation and independent scaling that we hoped for in the first place before resorting to bundling.

Authorisation is also different in a serverless world:

Applications based on serverless designs have to apply distributed, request-level authorization. A request to a Lambda function is equally untrusted whether it comes from a client application indirectly or from another Lambda function.

With no gatekeeper server process, each request to traditional back-end services (storage, database and so on) needs to be separately authorised. But once you’ve put in place the necessary authorisation, this also opens up new opportunities:

This means that it is perfectly acceptable, even expected, to allow client applications to directly access resources traditionally considered ‘back-end’. AWS provides several distributed authentication and authorization mechanisms to support those connections. This is a major change from the traditional client/server model, where direct access from clients to back-end resources, such as storage, is a major security risk.

(There’s a trade-off here, bear in mind you’ll also have much less encapsulation of back-end design decisions).

Here’s an example: instead of having end-user analytics captured in the client and send to a server-side application for processing and storage, AWS Cognito can be used to allow end-user devices to directly connect to Amazon Mobile Analytics. Putting a Lambda function in-between would do nothing to improve security, but it would add latency and cost!

The platform’s requirement for distributed request-level authorization causes us to remove components from our design, which would traditionally be required to perform the role of gatekeeper, but here only make our system more complex and costly to operate.

Finally, each deployment of a Lambda function is assigned a unique numeric identifier. Since once again we don’t need to have long running instances around for each version, but only pay for what actually executes, there is no penalty for having multiple versions of a service available concurrently. Techniques such as A/B testing and gradual releases of features can be exploited to the full.

How serverless can help to slash your AWS bill

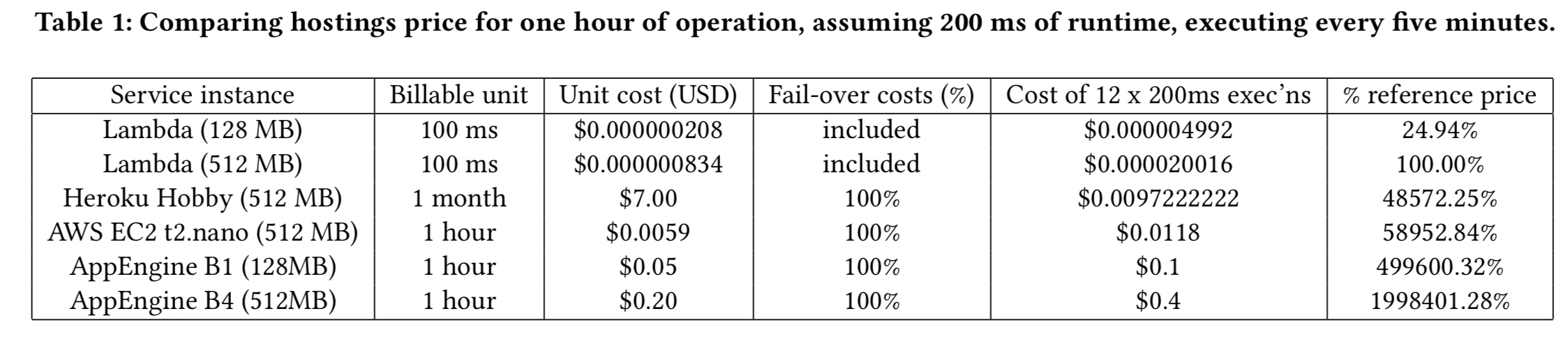

Not paying for idle capacity is one of the most obvious wins. For example, a 200ms service task that needs to run every five minutes would need a dedicated service instance (or two!) in a traditional model, but with Lambda we will only be billed for 200ms out of every five minutes. Depending on the instance types you pick, the potential savings range from 99.8% to 99.95%!

Designs that cut out the middle-man and allow clients direct access to back-end services also remove the need to pay for long-running gatekeeper processes.

The financial benefits of direct access to back-end services go deeper than this though: you can often move compute from back-end Lambdas (where you pay for it) into other AWS Services (where you may not, at least not directly).

Given the fact that different AWS services are billed according to different utilisation metrics, it is possible to significantly optimise costs by letting client applications directly connect to resources… Lambda costs increase in proportion to maximum reserved memory and processing time, so any service that does not charge for processing time is a good candidate for such cost shifting.

In the Amazon Mobile Analytics example we looked at earlier, the first 100 million events are free each month, and after that the service charges $1 per million recorded events. A Lambda function can be used just to authorize write-only access to the analytics service, and then get out of the way. A similar example is allowing clients to upload directly to s3 after authorisation (and using a trigger to process the file once upload is complete).

Case studies at MindMup and Yubl

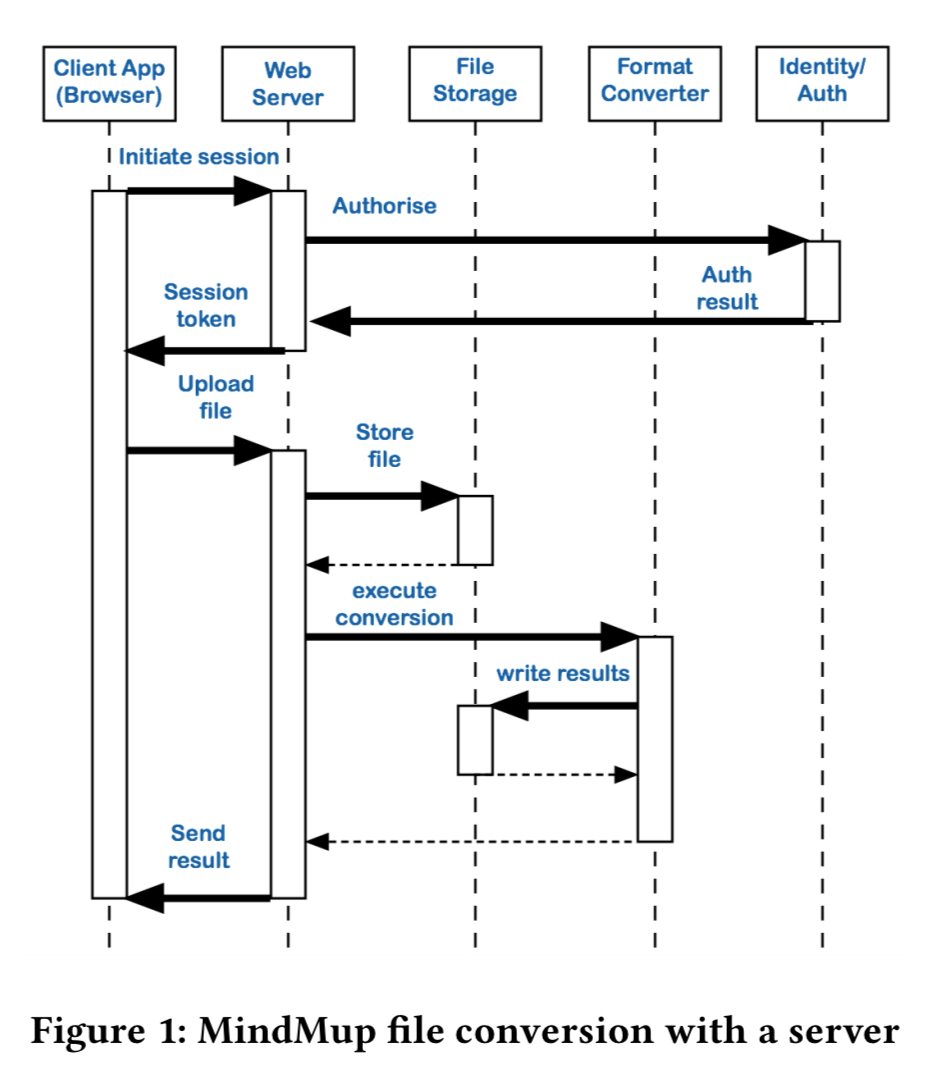

MindMup is a commercial online mind-mapping application. In 2016 it migrated from Heroku to AWS Lambda. The Heroku server-centric design looked like this:

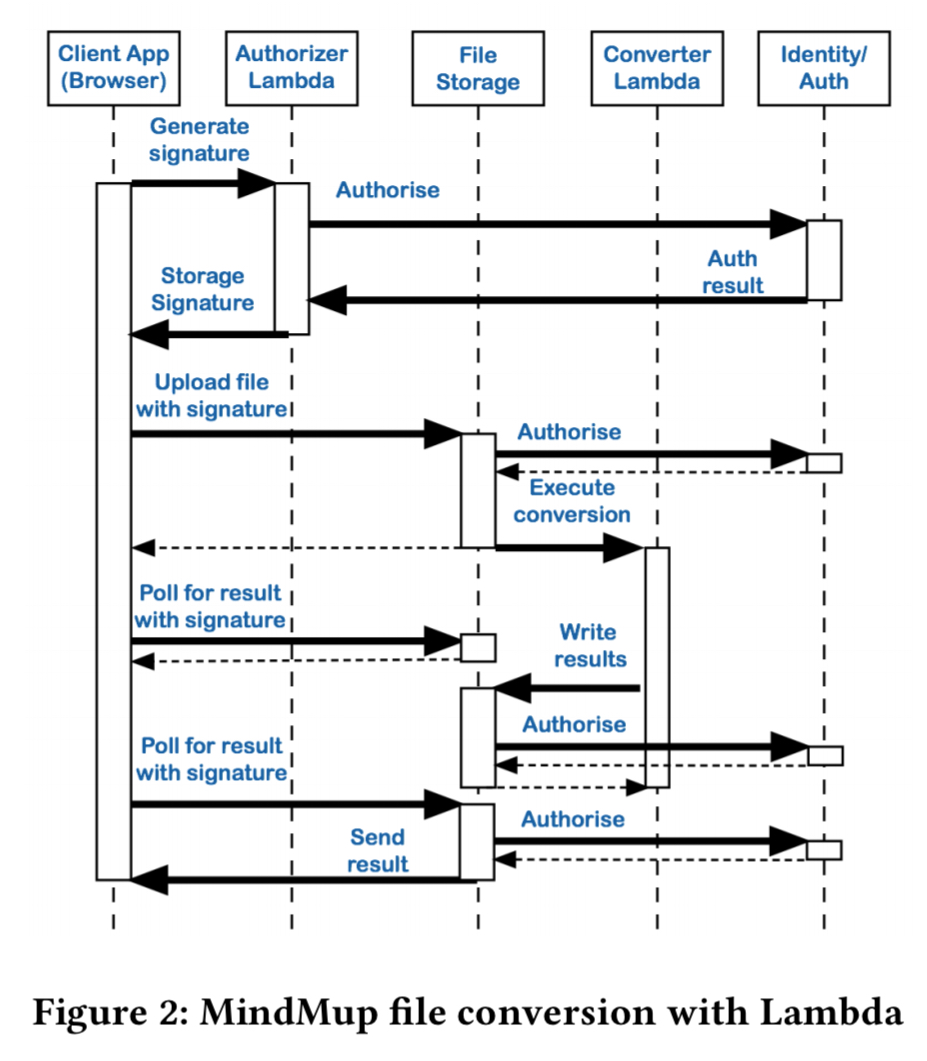

Moving to Lamdba enabled the removal of a lot of glue code in the format conversion functionality, and enabled the various services to be unbundled. The after picture looks like this:

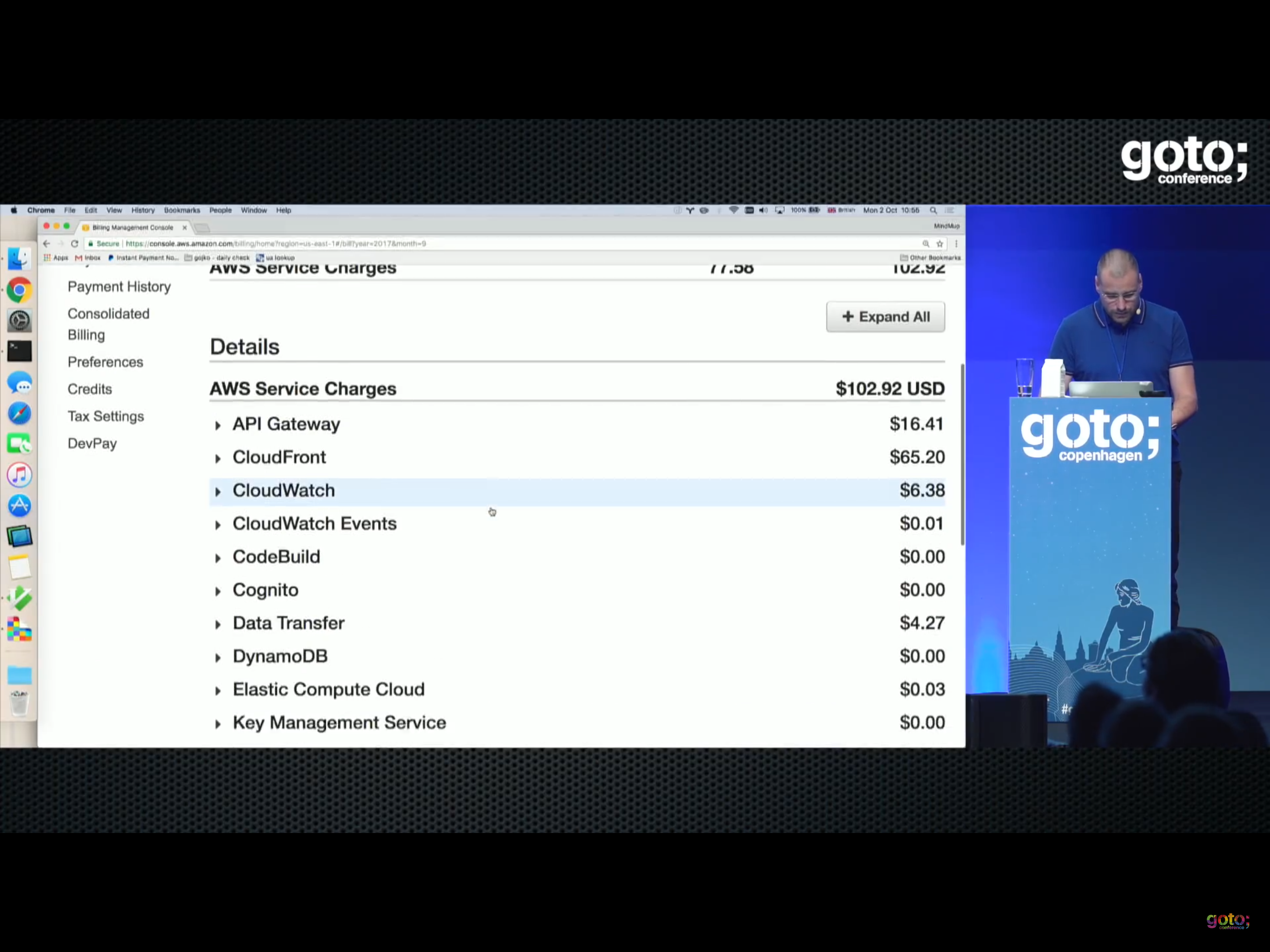

Between February 2016 (running on Heroku) and February 2017 (running on Lambda), the number of active users supported by the platform grew by more than 50%, but the hosting costs dropped by slightly less than 50%. (In his GOTO conference talk, the first author shared details of the operating costs for MindMup, and it is on the order of $100/month to support 400,000 active users. The Lambda part of that is just $0.53!).

Yubl was (they went out of business sadly) a London-based social networking company. Before they closed down, they had migrated large parts of their backend system to run on Lambda, from a collection of node.js instances on EC2. Their old system was costing around $5000/month, the new system with around 170 Lambdas cost less than $200/month.

When to use serverless and what to be aware of

… serverless platforms today are useful for important (but not five-nines mission critical) tasks, where high-throughput is key, rather than very low latency, and where individual requests can be completed in a relatively short time window. The economics of hosting such tasks in a serverless environment make it a compelling way to reduce hosting costs significantly, and to speed up time to market for delivery of new features.

Here are six things to be aware of before you dive in:

- There are not yet any uptime or operational service agreements for AWS Lambda

- You have no control over the number of active instances of any function, and creating a new Python or JavaScript function instance seems to take on the order of 1 second. Empirical evidence suggests inactive functions may hang around for about three minutes before being destroyed. This is the area where I have the most concern. We saw recently how critical performance is to your key business metrics. Beyond the start-up latency, you also need to consider the latency introduced by multiple hops, and tail-effects as the number of functions involved in processing a request increases. “At least at the time of writing, it is not possible to guarantee low latency for each request to a service deployed on Lambda.”

- AWS Lambda (at time of paper writing) is not included in any of the Amazon Compliance Services in Scope data sheets, so certain regulated functions cannot execute directly within Lambda instances. Update: Chris Munns from the AWS Serverless team got in touch to let me know that PCI for Lambda was added on June 13, 2017, and HIPPA coverage was announced in Sept. 2017.

- Lambda function execution time is capped at five minutes.

- You can’t run a fully simulated Lambda environment on your local machine. Update: Since this paper was originally written, AWS have introduced SAM local to support local testing. Thanks DJ Boz for the pointer!

- Although the Lambda functions themselves that you write may be fairly portable, the reality is that a Lambda-based application is likely to heavily tied to the AWS platform due to all services it consumes (and things like direct client access etc.).