Cloak and dagger: from two permissions to complete control of the UI feedback loop Fratantonio et al., IEEE Security and Privacy 2017

If you’re using Android, then ‘cloak and dagger’ is going to make for scary reading. It’s a perfect storm of an almost undetectable attack that can capture passwords, pins, and ultimately obtain all permissions (aka, a ‘god app’), that can leave behind almost no trace, and that may not require the user to view and (knowingly) accept even a single permissions dialog. Oh, and there’s no easy fix: “we responsibly disclosed our findings to Google; unfortunately, since these problems are related to design issues, these vulnerabilities are still unaddressed.”

At the core of the attack are two permissions: SYSTEM_ALERT_WINDOW and BIND_ACCESSIBILITY_SERVICE. If an app can gain both of these permissions, it’s game on:

… we demonstrate the devastating capabilities the “cloak and dagger” attacks offer an adversary by showing how to obtain almost complete control over the victim’s device. In this paper, we will demonstrate how to quietly mount practical, context-aware clickjacking attacks, perform (unconstrained) keystroke recording, steal user’s credentials, security PINs, and two factor authentication tokens, and silently install a God-mode app with all permissions enabled.

The SYSTEM_ALERT_WINDOW permission lets an app draw overlays on top of other apps, and is widely used. For example, the Facebook, Twitter, LastPass, and Skype apps all use it, as do about 10% of the top apps on Google Play Store. Fortunately for our attacker, the permission is automatically granted to any app in the official Play Store, and by targeting SDK level 23 or higher, the user will not be shown the list of required permissions at installation time (the idea is to defer asking until runtime when the permission is actually required for the first time). So that’s one permission down, one to go – and the user has no way of knowing.

We note that this behaviour seems to appear to be a deliberate decision by Google, and not an oversight. To the best of our understanding, Google’s rationale behind this decision is that an explicit security prompt would interfere too much with the user experience, especially because it is requested by apps used by hundreds of millions of users.

The BIND_ACCESSIBILITY_SERVICE permission grants an app the ability to discover UI widgets displayed on the screen, query the content of those widgets, and interact with them programmatically. It is intended to help make Android devices more accessible to users with disabilities. Because of the security implications, this permission needs to be manually enabled through a dedicated menu in the settings app, it can be done in just three clicks, but they have to be the right three clicks of course. If you have the SYSTEM_ALERT_WINDOW permission though, getting the user to make those three clicks is fairly easy.

The example attack app built by the authors presents a simple tutorial with three buttons: “Start Tutorial”, then “Next” and a final “OK” button. When the OK button is clicked, the app shows a short video that lasts about 20 seconds. Except things are not what they seem. The tutorial app UI is actually an on-top opaque overlay and the “Start Tutorial” button click actually clicks on the accessibility service entry, the “Next” button click actually clicks on the ‘on/off’ switch, while the “OK” button click is clicking on the real OK button from the Settings app (the overlay UI has a carefully crafted hole at this exact point). The SYSTEM_ALERT_WINDOW settings are such that if a click is sent through to the underlying window from an overlay, then the overlay is not told the whereabouts of that click. Traditionally that makes click-jacking attacks difficult because you don’t know where the user clicked – the event reports coordinates (0,0) – and hence you don’t know when to update your overlay (fake) UI in response to a click so that the user remains unsuspecting.

Our technique works by creating a full screen opaque overlay that catches all a user’s clicks, except for the clicks in a very specific position on the screen (the point where the attacker wants the user to click). This is achieved by actually creating multiple overlays so as to form a hole: the overlays around the hole capture all the clicks, while the overlay on the hole is configured to let clicks pass through… since there is only one hole, there is only one way for a user’s click to not reach the malicious app.

In other words, if you see an event with coordinates (0,0), you know that the user clicked in the hole!

There are four fundamental attack primitives which can be combined in many creative ways, once the two permissions are obtained:

- You can modify what the user sees (as in the click-jacking example above)

- You can know what is currently displayed

- You can inject user input

- You can know what the user inputs and when

Here are 12 example attacks:

- Context-aware clickjacking – we just saw this in action to fool the user into granting the

BIND_ACCESSIBILITY_SERVICEpermission, overlays are used to lure the user into clicking on target parts of the screen. - Context-hiding – using overlays that cover the full screen apart from carefully designed ‘holes’, so that the user is completely fooled concerning the true context of the button or other UI element that they click on.

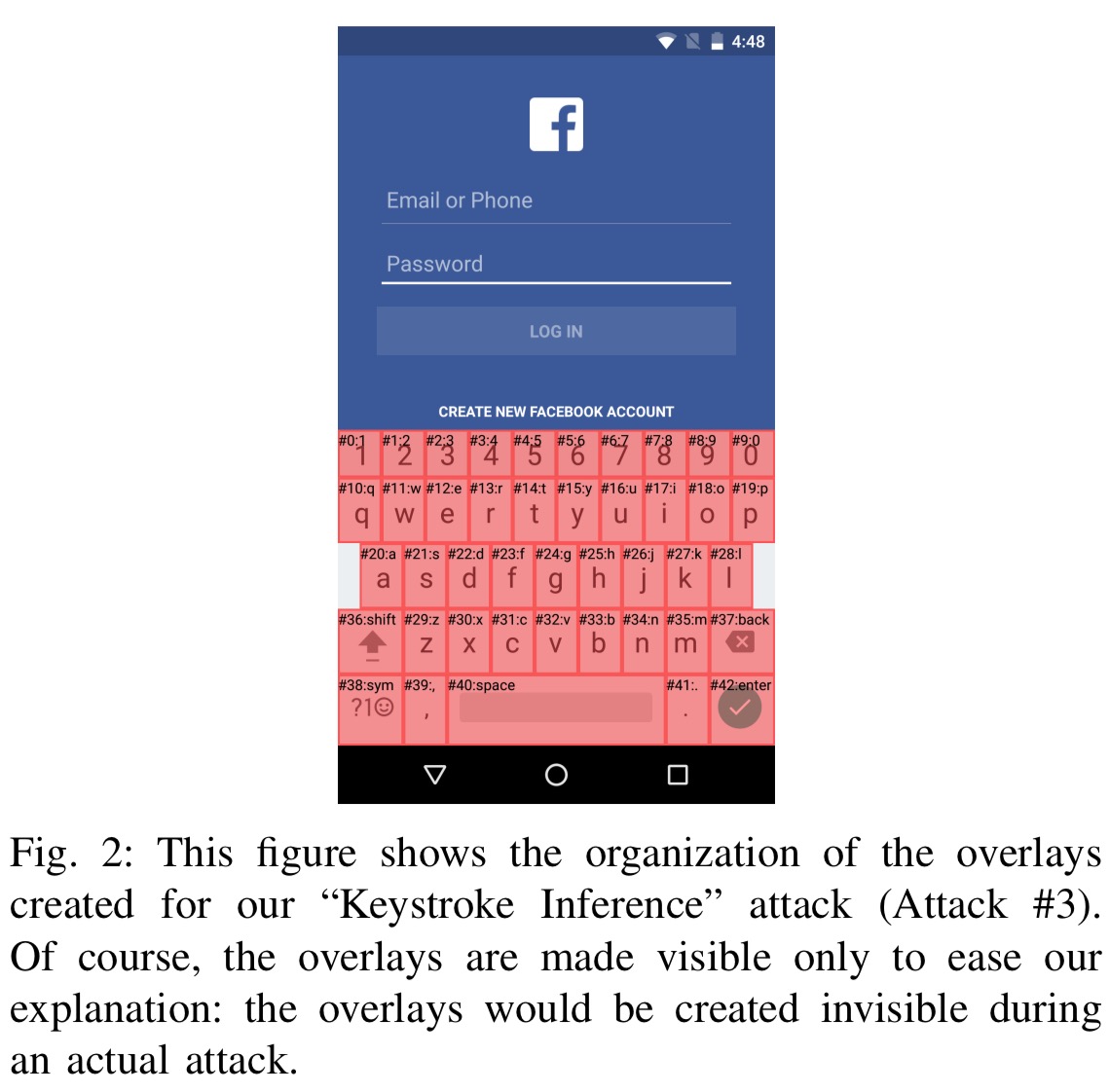

- Keystroke inference – it turns out that even an app that only has the

SYSTEM_ALERT_WINDOWpermission can learn what keys you touch on the onscreen keyboard, including any private messages, passwords etc.. This works by creating several small transparent overlays, one on top of each key on the keyboard. The overlays don’t intercept any clicks, so these go to the underlying keyboard – however, they do receive MotionEvents for touches outside of their area. TheFLAG_WINDOW_IS_OBSCUREDflag is set to true if a click event passed through a different overlay before reaching its final destination. Combine this with the MotionEvents, and a careful stacking of the overlays so that each has a different Z-level, and you can tell based on obscured flag which key was actually pressed.

- Keyboard app hijacking – normally when an app attempts to get the text of a password field, the getText method just returns an empty string, but with the

BIND_ACCESSIBILITY_SERVICEpermission the keyboard app itself is treated as a normal unprivileged app, and each of the key widgets generates accessibility events through which all keystrokes can be recorded, including passwords. - Targeted password stealing Use the accessibility service to detect that the user just launched e.g. the Facebook app login activity. Now draw a visible overlay that looks like the username and password EditText widgets, and an overlay on top of the login button. When the user clicks on the login button overlay, record the credentials and then pass them through to the real Facebook app. At the end of the attack, the user is logged into their real Facebook account and is completely unsuspecting.

- Security PIN stealing – if you use a security PIN, the security screen pad generates accessibility events which an accessibility app can see even when the screen is locked.

- Phone screen unlocking – accessibility apps can also inject events while the phone is locked. If a secure lock screen is not used, an accessibility app can inject events to enter the PIN, unlock the phone, and then go on to do whatever it wants. All this can be done while the phone screen remains off. “It is simple to imagine a malware that would unlock the phone during the night, and it would go on the web and click ads…” If you use a swipe pattern though, there is no way for an accessibility app to inject swipes.

- Silent app installation the initial malicious app with only the two permission can contain within itself an APK for another app which requests all permissions. It is possible to install this app while stealthily covering the screen with an on-top overlay. Remember the 20 second video that played as part of our tutorial earlier? That’s the overlay which gives the attacking app 20 seconds to go through the god-app installation process behind it, completely unbeknownst to the user. The installed app can be configured to not appear in the launcher, and to have device admin privileges so that its uninstall button is disabled. Disguising it as a legitimate system app (e.g.. “Google Parental Control”) should complete the deception. The full power is this is best understood when you see it in action: [youtube https://www.youtube.com/watch?v=RYQ1i03OVpI&w=560&h=315]

- Enabling all permissions – with the app installed as in the previous example, it is possible to automatically click and enable all the requested permissions while a full screen overlay is on top.

- 2FA token stealer – “for SMS-based tokens, the accessibility service can be configured to be notified for an new notification, which contains the required token.” What if you’re using an authenticator app though? It turns out a malicious app can just open the activity displaying the tokens and read them off the screen – none of the views containing the tokens are protected in e.g., the Google Authenticator App. Why not combine this with #7 and grab a bunch of tokens at night with the screen off?

- Ad hijacking – an accessibility app can figure out where and when ads are shown in an app, and draw an invisible on top overlay that sends clicks to an ad of the attackers choice, generating revenue.

- Browse the web – the accessibility service has full access to the browser, so you can make Chrome visit any page, click on ‘likes,’ post content on behalf of the user, and so on. “Moreover, since all HTML elements are nicely exposed, we believe it would be simple to extend the password stealer attacks we described earlier to web-based forms.“

A user study with 20 users showed that none of the users suspected anything at all while interacting with the tutorial in the app, and after those three clicks their devices were fully compromised and a God-mode app installed.

None of the users was able to even suspect that they were under attack. What is more worrisome is that none of the users actually managed to understand what happened even after we told them the app they played with was malicious and even after we gave complete and free access to the compromised device. We also stress that the subjects could not detect anything even when they had a chance to directly compare what happened against a “benign” baseline: In a real-world scenario, there would not be any baseline to use as reference.

Section IX in the paper outlines some possible defences that Google could implement to prevent such ‘cloak and dagger’ attacks, which centre around a set of system and API modifications. These would clearly take some time to roll out. In the short term, not automatically granting SYSTEM_ALERT_WINDOW permissions to Play Store apps, and more closely scrutinising apps that request both permissions (the authors had such a test app easily accepted) would both help.

We responsibly disclosed all our findings to the Android security team, which promptly acknowledged the security issues we reported. When available, the fixes will be distributed through an over-the-air (OTA) update as part of the monthly Android Security Bulletins. Unfortunately, even if [though?] we reported these problems several months ago, these vulnerabilities are still unpatched and potentially exploitable. This is due to the fact that the majority of presented attacks are possible due to inherent design issues outlined in Section V. Thus, it is challenging to develop and deploy security patches as they would require modifications of several core Android components.

If you want to see videos of some of the attacks in action, as well as a table indicating which versions of Android are affected (as of time of writing, the word ‘vulnerable’ appears in every cell!), then check out http://cloak-and-dagger.org/.