The truth, the whole truth, and nothing but the truth: A pragmatic guide to assessing empirical evaluations Blackburn et al. ACM Transactions on Programming Languages and Systems 2016

Yesterday we looked at some of the ways analysts may be fooled into thinking they’ve found a statistically significant result when in fact they haven’t. Today’s paper choice looks at what can go wrong with empirical evaluations.

Let’s start with a couple of definitions:

- An evaluation is either an experiment or an observational study, consisting of steps performed and data produced from those steps.

- A claim is an assertion about the significance and meaning of an evaluation; thus, unlike a hypothesis, which precedes an evaluation, a claim comes after the evaluation.

- A sound claim is one where the evaluation provides all the evidence necessary to support the claim, and does not provide any evidence that contradicts the claim.

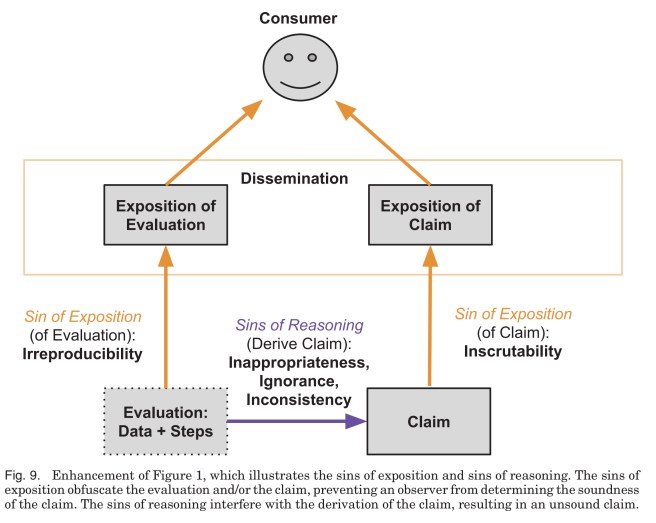

Assuming honest researchers, things can go wrong in one of two basic ways: sins of reasoning are those where the claim is not supported by the evaluation; and sins of exposition are those where the description of either the evaluation or the claim is not sufficient for readers to evaluate and/or reproduce the results.

As the authors show, it’s not easy to avoid these traps even when you are doing your best. We’ll get into the details shortly, but for those authoring (or reviewing) evaluations and claims, here are five questions to help you avoid them:

- Has all of the data from the evaluation been considered, and not just the data that supports the claim?

- Have any assumptions been made in forming the claim that are not justified by the evaluation?

- If the experimental evaluation is compared to prior work, is it an apples-to-oranges comparison?

- Has everything essential for getting the results been described?

- Has the claim been unambiguously and clearly stated?

The two sins of exposition

The sin of inscrutability occurs when poor exposition obscures a claim. That is, we can’t be sure about what is really being claimed! The most basic form of inscrutability is simply omission – leaving the reader to figure out what the claims might be by themselves! Claims may also be ambiguous – for example, “improves performance” by 10% (throughput or latency?), or “reduces latency by 10%” (at what percentile and under what load?). Distorted claims are clear from the reader’s perspective, but don’t actually capture what the author intended.

The sin of irreproducability occurs when poor exposition obscures an evaluation: the evaluation steps, the data, or both. The evaluation may not be reproducible because key information is omitted, imprecise language and lack of detail may lead to ambiguity. One important kind of omission that is hard to guard against is an incomplete understanding of the factors that are relevant to the evaluation.

Experience shows that whole communities can ignore important, though non-obvious, aspects of the empirical environment, only to find out their significance much later, throwing into question years of published results. This situation invites two responses: (i) authors should be held to the community’s standard of what is known about the importance of the empirical environment, and (ii) the community should intentionally and actively improve this knowledge, for example, by promoting reproduction studies.

A good example of an unknown significant factor turns out to be the size of environment variables when measuring speed-ups of gcc in Linux! The size of the environment affects memory layout, and thus the performance of the program. An evaluation only exploring one (unspecified) environment size leads to the sin of irreproducibility. Researchers knew of course that memory layout affects performance, but it wasn’t obvious that environment variables would affect it enough to make a difference… until someone measured it.

The three sins of reasoning

The three sins of reasoning are the sin of ignorance, the sin of inappropriateness, and the sin of inconsistency. The sin of ignorance occurs when a claim is made that ignores elements of the evaluation supporting a contradictory alternative claim (i.e., selecting data points to substantiate a claim while ignoring other relevant points).

In our experience, while the sin of ignorance seems obvious and easy to avoid, in reality, it is far from it. Many factors in the evaluation that seem irrelevant to a claim may actually be critical to the soundness of the claim.

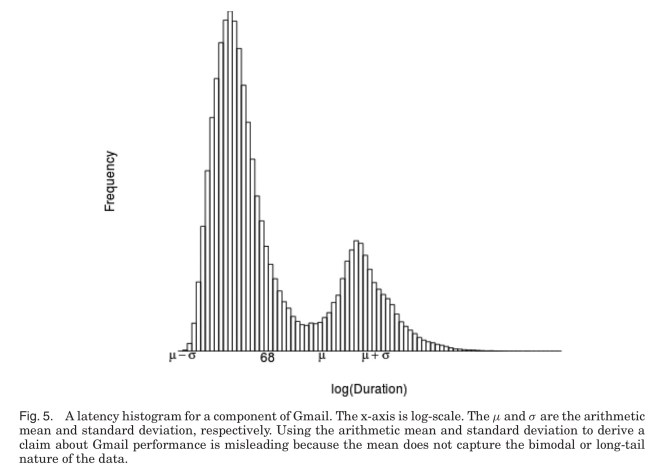

Consider the following latency histogram for a component of Gmail that has both a fast-path and a slow-path through it.

The distribution is bimodal, which means that commonly used statistical techniques making assumptions the data is normally distributed do not apply. If a claim is made on the basis of such techniques, it is unsound and we have committed the sin of ignorance. This is easy to see when you have the histogram, but suppose the experiment calculated mean and standard-deviation on the fly – then it may not be so obvious to us at all!

The sin of inappropriateness occurs when a claim is made that is predicated on some fact that is absent from the evaluation.

In our experience, while the sin of inappropriateness seems obvious and easy to avoid, in reality, it is far from it. Many factors that may be unaccounted for in evaluation may actually be important to derive a sound claim.

Consider an evaluation trying to measure energy consumption. Often execution time is measured as a proxy for this, but it turns out that other factors can also affect energy consumption, and thus execution time does not support a claim about energy consumption. Knowing that execution time is not always an accurate proxy for energy consumption is not obvious – until it was pointed out in a 2001 paper. As another example, before it was widely understood that heap sizes have a big impact on garbage collector performance, many papers derived a claim from an evaluation on only one heap size, and did not report it.

The sin of inconsistency is a sin of faulty comparison – two systems are compared, but each is evaluated in a way that is inconsistent with the other.

In our experience, while the sin of inconsistency seems obvious and easy to avoid, in reality, it is far from it. Many artifacts that seem comparable may actually be inconsistent.

Consider the use of hardware performance counters to evaluate performance…

…the performance counters that are provided by different vendors may vary, and even the performance counters provided by the same vendor may vary across different generations of the same architecture. Comparing data from what may seem like similar performance counters within an architecture, across architectures, or between generations of the same architecture may result in the sin of inconsistency, because the hardware performance counters are counting different hardware events.

Comparing against inconsistent workloads would be another way of falling foul of this sin: for example, testing one algorithm during the first half of a week, and another algorithm during the second half of a week, when the underlying distribution of workload is not uniform across the week.

Adrian,

coming from a practitioner’s perspective I have rarely encountered such a well-rounded and well-grounded view on the “data field” such as yours. What a breath of fresh air in all the hype. Thanks!

Reblogged this on Site Title.