Hotspot Patterns: The formal definition and automatic detection of architecture smells – Mo et al. International Conference on Software Architecture, 2015

Yesterday we looked at Design Rule Spaces (DRSpaces) and how some design rule spaces seem to account for large numbers of the error-prone files within a project. Today’s paper brings us up to date with what the authors learned after examining hundreds of error-prone DRSpaces…

After examining hundreds of error-prone DRSpaces over dozens open source and commercial projects, we have observed that there are just a few distinct types of architecture issues, and these occur over and over again. We saw these issues in almost every error-prone DRSpace, in both open source and commercial projects. Most of these issues, although they are associated with extremely high error-proneness and/or change-proneness, cannot be characterized by existing notions such as code smells, or anti-patterns, and thus are not automatically detectable using existing tools.

There are five architectural hotspot patterns that are frequently the root causes of high-maintenance costs:

- Unstable interface

- Implicit cross-module dependency

- Unhealthy interface inheritance hierarchy

- Cross-module cycle

- Cross-package cycle

Where ‘modules’ are mutually independent file groups as revealed by a design rule hierarchy. Files involved in the above patterns have significantly higher bug and change rates than the average file in the same projects. Moreover, some files are involved in multiple of these patterns, in which case their bug rate and change rate increases dramatically.

The authors studied nine open source projects (Avro, Camel, Cassandra, CXF, Hadoop, HBase, Ivy, OpenJPA, and PDFBox) and one commercial project to undertake both a quantitative and qualitative assessment of the patterns predictive qualities. For these projects, information about the number of instances of each pattern, and the number of files involved in them can be found in the table below (click for larger view):

Architecture hotspots are indeed ubiquitous. Every project had at least one instance of every type of hotspot, and in some cases there were thousands of instances and thousands of files implicated. Architecture hotspots are real, and they are not rare.

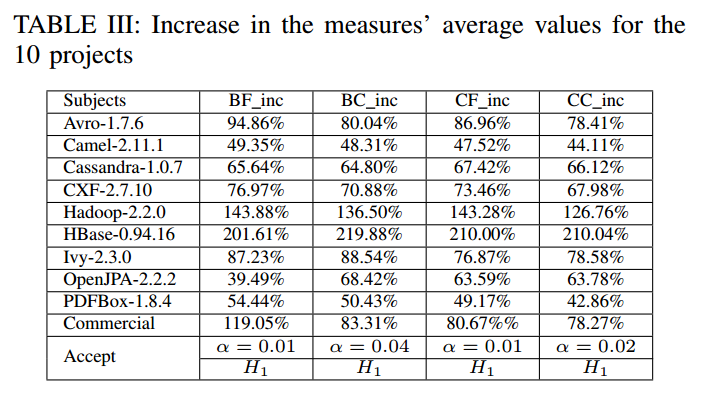

By mining the project history (source code control and issue tracker) the authors obtained bug frequency (BF), bug churn (BC, the number of lines of code commited to fix a bug), change frequency (CF) and change churn (CC, the number of lines of code committed to make a change) metrics. Table III below shows how much these metrics increase compared to the average file in the project, for files involved in a hotspot pattern.

The four measures are consistently and significantly greater for the files that exhibit architecture issues. Therefore, we have strong evidence to believe that files exhibiting architecture issues will cause more project maintenance effort.

Of all the different hotspot patters, the greatest impact is attributable to the Unstable Interface and Cross-Module cycle patterns:

That is to say, while all of the hotspots contribute to bug frequency, change frequency, bug churn, and change churn, Unstable Interface and Cross-Module Cycle contribute the most by far.

For the commercial project (800 files, 56K Sloc, 4 years of development) the team identified 3 groups of files with Unhealthy Inheritance Hierarchies, 1 group with Implicit Cross-Module dependency (involving 27 files), and 2 groups of files with Unstable Interfaces (with 26 and 52 files respectively).

We communicated these issues, in the form of 6 DRSpaces, back to the chief architect…

- All of the hotspots bar one of the Unhealthy Inheritance Hierarchy groups were confirmed by the architect to be significant high-maintenance architecture issues.

- The architect confirmed they now intended to refactor the five high maintenance architecture issues.

When one of the Unstable Interface instances was reported, our collaborator realized that the interface was poorly designed; it was overly complex, and had become a God interface. Divide-and-conquer would be the proper strategy to redesign the interface. Based on the analysis results we provided, CompanyS is currently prosecuting a detailed refactoring plan to address these issues one by one.

I suppose I’d better give you the details of the architectural hotspot patterns so you can look out for them!

Unstable interface

Based on design rule theory, the most influential files in the system—usually the design rules—should remain stable. In a DRSpace DSM, the files in the first layer are always the most influential files in the system, that is, they are the leading files of the DRSpace. In most error-prone DRSpaces, we observe that these files frequently change with other files, and thus have large co-change numbers recorded in the DSM. Moreover, such leading files are often among the most error-prone files in the system, and most other files in their DRSpace are also highly error-prone. We formalize this phenomenon into an Unstable Interface pattern… If a highly influential file is changed frequently with other files in the revision history, then we call it an Unstable Interface.

Implicit cross-module dependency

Truly independent modules should be able to change independently from each other. If two structurally independent modules in the DRSpace are shown to change together frequently in the revision history, it means that they are not truly independent from each other. We observe that in many of these cases, the modules have harmful implicit dependencies that should be removed.

Unhealthy interface inheritance hierarchy

We have encountered a surprisingly large number of cases where basic object-oriented design principles are violated in the implementation of an inheritance hierarchy. The two most frequent problems are: (1) a parent class depends on one of its children; (2) a client class of the hierarchy depends on both the base class and all its children. The first case violates the design rule theory since a base class is usually an instance of design rules and thus shouldn’t depend on subordinating classes. Both cases violate Liskov Substitution principle since where the parent class is used won’t be replaceable by its subclasses. In these cases, both the client and the hierarchy are usually very error-prone.

Cross-module cycle

We have observed that not all cyclical dependencies among files are equally harmful, and that the cycles between modules—defined in the same way as in the Implicit Cross-module Dependency—in a DRSpace are associated with more defects… If there is a dependency cycle and not all the files belong to the same closest module, we consider this to be a Cross-Module Cycle pattern.

Cross-package cycle

Usually the package structure of a software system should form a hierarchical structure. A cycle among packages is typically considered to be harmful.

Hotspot detector tool

The Hotspot Detector automatically detects instances of the hotspot patterns, outputting a summary of all the architecture issues, the files involved in each issue, and the DSMs containing the DRSpaces with these issues. I couldn’t find any reference to a publicly available version you can download and experiment with though.