SoK: Eternal War in Memory – Szekeres et al. 2013

SoK stands for ‘Systematization of Knowledge’ – and in this case Szekeres et al. provide a wonderful conceptual framework and overview of memory corruption attacks and the defenses against them. As you’ll see, defending against these attacks is non-trivial – especially if you are concerned about space and time overheads. The best solution is to use a type-safe language. Failing that, well, it’s complicated…

Memory corruption bugs are one of the oldest problems in computer security. Applications written in low-level languages like C or C++ are prone to these kinds of bugs. The lack of memory safety (or type safety) in such languages enables attackers to exploit memory bugs by maliciously altering the program’s behavior or even taking full control over the control-flow. The most obvious solution would be to avoid these languages and to rewrite vulnerable applications in type-safe languages. Unfortunately, this is unrealistic not only due to the billions of lines of existing C/C++ code, but also due to the low-level features needed for performance critical programs (e.g. operating systems).

Memory corruption bugs are considered one of the top three most dangerous software errors.

A multitude of defense mechanisms have been proposed to overcome one or more of the possible attack vectors. Yet most of them are not used in practice, due to one or more of the following factors: the performance overhead of the approach outweighs the potential protection, the approach is not compatible with all currently used features (e.g., in legacy programs), the approach is not robust and the offered protection is not complete, or the approach depends on changes in the compiler toolchain or in the source-code while the toolchain is not publicly available.

Memory Corruption Attacks

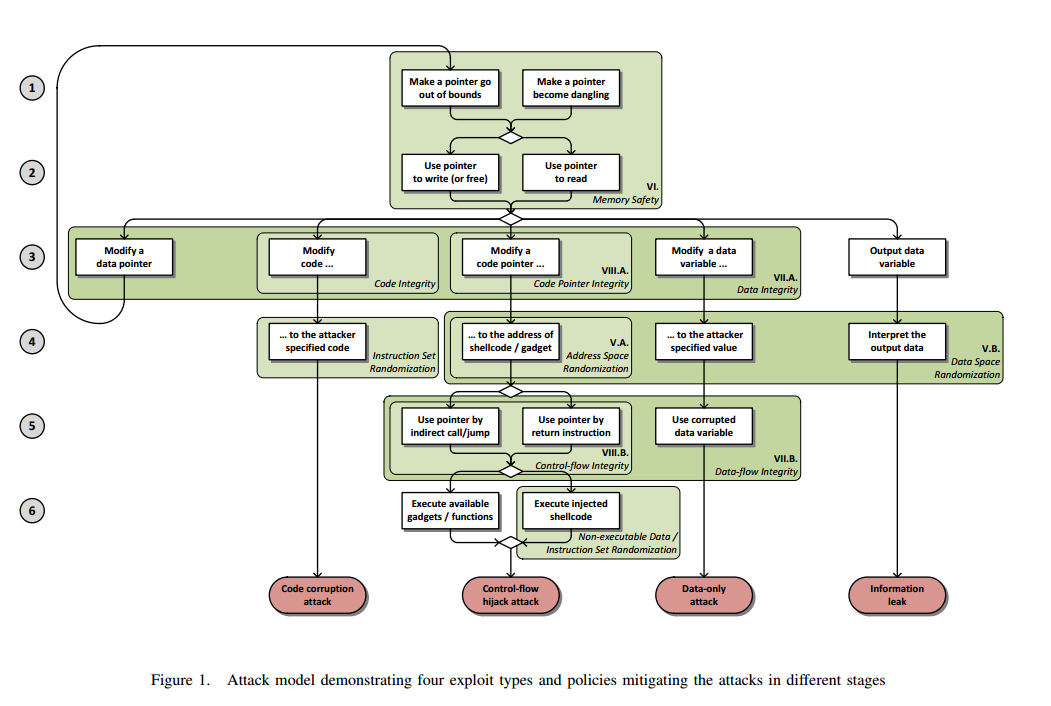

In order to understand the various defenses, we first need to understand what we’re defending against. The paper provides a nice flow-chart summarizing the attack models – handy for a potential attacker! The same flow chart also shows the four basic exploit types, and the primary defenses against them.

The four basic attack vectors are code corruption, control-flow hijacking, data-only attacks, and information leaking. In a code corruption attack the program code is overwritten in memory to perform whatever function the attacker desires.

In a control-flow hijack the attacker overrides a return address or jump address to direct execution to a code segment of their choice. Non-executable data pages made it harder to jump to attacker provide code, but not to worry because there’s normally plenty of suitable code already available!

To bypass the non-executable data policy, attackers can reuse existing code in memory. The reused code can be an existing function (“return-to-libc” attack) or small instruction sequences (gadgets) found anywhere in the code that can be chained together to carry out useful (malicious)

operations. This approach is called Return Oriented Programming (ROP), because the attack chains the execution of functions or gadgets using the ending return instructions. Jump Oriented Programming (JOP) is the generalization of this attack which leverages indirect jumps as well for chaining. There is no policy which can stop the attack at this point, since the execution of valid and already existing code cannot be prevented.

A data-only attack does not modify control flow, but instead manipulates critical data to grant additional permissions, or leak information. “Consider, for instance, the modification of the

isAdmin variable via a buffer overflow after logging into the system with no administrator privileges:”

bool isAdmin = false;

...

if (isAdmin) // do privileged operations

An information leak attack is designed to leak secrets known at runtime, and often to circumvent probabilistic defenses based on randomization – by revealing the actual locations of code and data segments etc.. In this latter case, the information leak is simply a building block on the way to one of the other attacks.

Crafting an attack

There are up to six steps involved in crafting an attack, as show in the previous figure.

Since the root cause of all vulnerabilities discussed in this systematization of knowledge paper is memory corruption, every exploit starts by triggering a memory error. The first two steps of an exploit in Figure 1 cover the initial memory error. The first step makes a pointer invalid, and the second one dereferences the pointer, thereby triggering the error.

A pointer can become invalid by forcing it to go ‘out of bounds’ (e.g. point beyond the end of an array), or by leaving it pointing at a deleted object (a dangling pointer). An ‘out of bounds’ pointer leads to a spatial error, a dangling pointer leads to a temporal error. The second step simply requires the application to use the corrupted pointer – either for a write operation, or for a read. It may initially seem surprising that a read can cause problems (one of the recurring themes in this paper is just how dangerous even the tiniest chink in armour can be). Two examples are given – one in which the data being read is itself interpreted as a pointer (now you can send the application anywhere), and the other example is an information leak, where for example the format string for a printf is controlled by the hacker and can be used to print out memory contents.

Calling free() with an attacker controlled pointer can also be exploited to carry out arbitrary memory writes. Write dereferences can be exploited to leak information as well… Temporal errors, when a dangling pointer is dereferenced in Step 2, can be exploited similarly to spatial errors. A constraint for exploitable temporal errors is that the memory area of the deallocated object (the old object) is reused by another object (new object). The type mismatch between the old and new object can allow the attacker to access unintended memory.

If a dangling pointer is use to call free again (a Double-free) the attacker controlled contents of the freed object will be interpreted as heap metadata, which is also exploitable…

The third step involves exploiting the use of the invalid pointer to modify either code or data to the attackers advantage. For a control-flow attack the attacker also needs to know where execution should be redirected to. With defenses such as address-space randomization this will not be knowable up-front…

Most often, memory corruption exploits try to take control over the program by diverting its control-flow… Suppose the attacker can access and modify a return address due to a buffer overflow. For a successful exploit, the attacker needs to know the correct target value (i.e., the address of the payload) as well. We represent this as a separate fourth step in Figure 1.

Step 5 in control-flow attacks is to cause the program to use the modified pointer, and in data-only attacks, to use the modified data. Finally in step 6 for a control-flow attack, control is transferred to the attacker specified routine.

Defenses against the Dark Arts

The following categories of defense are identified:

- Memory Safety Policies

- Code Integrity Policies

- Code Pointer Integrity Policies

- Data Integrity Policies

- Randomization (of instruction sets, address space, and data space)

- Control-Flow Integrity Policies

- Data-Flow Integrity Policies

- Non-Executable Data

For each category the paper includes an overview of approaches and information about the typical performance overheads and memory usage. As a practical rule of thumb, it seems that any defense which adds more than ~5% overhead does not get used in practice. The most widely used protections: stack smashing protection, data execution protection (DEP), and address-space location randomisation (ASLR) all have low overheads.

Stack smashing protection defends against a common step-3 attack of modifying the saved return-address pointer on the stack as part of a control-flow hijack attack.

Stack smashing protection detects buffer overflows of local stack-based buffers, which overwrite the saved return address. By placing a random value (called cookie or canary) between the return address and the local buffers at function entries, the integrity of the cookie can be checked before the return of the function and thus the overflow can be detected. SafeSEH and SEHOP also validate exception handler pointers on the stack before they are used, which makes them, together with stack cookies, a type of Control-flow Integrity solution. These techniques provide the weakest protection: they place checks only before a small subset of indirect jumps, focusing checking the integrity of only some specific code pointers, namely saved return addresses and exception handler pointers on the stack. Furthermore, the checks can be bypassed. Cookies, for instance, can detect a buffer overflow attack but not a direct overwrite (e.g., exploiting an indexing error).

Data Execution Protection works by marking every page as exclusively for write (a data page) or for execution. Normally there are so many good targets already in executable pages that the attacker does not need to try and execute modified data in a data page as code though.

DEP/W⊕X can protect against code injection attacks, but does not protect against code reuse attacks like ROP. ROP exploits can be generated automatically, and large code bases like the C library usually provide enough gadgets for Turing-completeness.

JIT compilation also means that DEP cannot always be fully enforced…

Practically all modern CPUs support setting non-executable page permissions, so combined with non-writable code, enforcing W⊕X is cheap and practical. However in the case of JIT compilation or self-modifying code, W⊕X cannot be fully enforced. For the sake of completeness, we note that another randomization approach, Instruction Set Randomization (ISR) can also mitigate the execution of injected code or the corruption of existing code by encrypting it. But due to the support for page permissions, the much slower ISR has become less relevant and because of the limited space we will not cover it in more detail in this paper.

Address Space Randomization seeks to make step 4 harder – finding out the appropriate address to transfer control to.

ASLR provides the most comprehensive protection as the most widely deployed Address Space Randomization technique. It can randomize the locations of various memory segments, including data and code, so even if the attacker wants to reuse a gadget its location will be random. While some ASLR implementations have specific weaknesses (e.g., code regions are left in predictable locations, de-randomization attacks are possible due to the low entropy), the fundamental attack against it is information leakage.

Defenses that are not widely deployed

Let us now briefly look at some of the other defenses which have been researched but not widely deployed in practice. The main obstacles to deployment are one or more of performance overheads, memory overheads, source incompatibility (requires modification of the source), and binary incompatibility (cannot mix protected and unprotected binaries in the same executable). (Lack of) support for independent compilation of modules can also be an issue.

Enforcing Memory Safety stops all memory corruption exploits. For complete Memory Safety, both spatial and temporal errors must be prevented without false negatives. Type-safe languages enforce both spatial and temporal safety by checking object bounds at array accesses and using automatic garbage collection (the programmer cannot destroy objects explicitly). Our focus is transforming existing unsafe code to enforce similar policies by embedding low-level reference monitors. The instrumentation may be in the source code, intermediate representation, or binary level.

Complete spatial safety requires keeping track of all pointer bounds. This adds about 67% performance overhead and makes it hard to maintain full compatibility. To restore compatibility, object-bounds tracking stores bounds information with the objects being pointed to rather than with the pointers themselves. One of the fastest known approaches for this, Baggy Bounds Checking, still adds about 60% overhead.

There are several techniques to address temporal safety: special allocators (e.g. the Cling malloc replacement) restrict address space reuse (memory overhead); object-based approaches such as Valgrind’s Memcheck (10x overhead); and pointer-based approaches (incompatibility issues and 116% overhead);

Data Integrity and Data-flow Integrity are weaker policies than Memory Safety. They aim to protect against both control data (hijacking) and non-control data attacks, but not against e.g., information leaks. While the former policy prevents data corruption, the latter detects it… Data Integrity solutions enforce an approximation of spatial memory integrity. These techniques focus on the most common attacks, which start by writing through an out of bounds pointer. They do not enforce temporal safety, and they only protect against invalid memory writes, not reads. Furthermore, they only approximate the spatial integrity enforced by the previously covered bounds checkers in order

to minimize the performance overhead. In all cases the approximation is due to a static pointer analysis carried out prior to the instrumentation.

By identifying ‘unsafe’ pointers that can potentially go out of bounds via static analysis, together with the objects they can point to (points-to sets), extra runtime protection can be added in these places. The overhead is on the order of 50-100%. Write-Integrity-Testing takes this a step further by also restricting each pointer dereference to write only objects in its own points-to-set. The overhead is 5-25%, but there are binary compatibility issues.

Data-Flow Integrity (DFI) as proposed by Castro et al. detects the corruption of any data before it gets used by checking read instructions. DFI restricts reads based on the last instruction that wrote the read location. In program analysis terms, DFI enforces the reaching definition sets. The reaching definition set of an instruction is the set of instructions which might have last written (defined) the value that is used by the given instruction based on the control-flow graph. For instance, the policy ensures that the isAdmin variable was last written by the write instruction that the source code defines and not by some rogue attacker-controlled write.

DFI adds 50-100% overhead.

Code Pointer integrity aims to prevent the corruption of code pointers, while Code Flow integrity detects it.

While the integrity of some code pointers can and should be protected, enforcing Code Pointer Integrity alone is infeasible. Immutable code pointers, such as the ones in the Global Offset Table or in virtual function tables (vtable), can be easily protected by keeping them in read-only memory pages. Most code pointers however, such as programmer defined function pointers or saved return addresses, must remain writable.

Code Flow integrity protection can be dynamic or static. The dynamic approach protects against stack smashing with canaries and is widely deployed as previously discussed.

A secret value (cookie/canary) is placed between the return address and the local variables. If the return address is overwritten by a buffer overflow, the cookie changes as well, what is detected by the check placed before the return instruction. Stack cookies do not protect indirect calls and jumps, and they are vulnerable to direct overwrite attacks and information leaks. However, stack cookies are popular and widely deployed, because the performance overhead is negligible (less than 1%) and no compatibility issues are introduced.

Shadow stacks are a variation in which return addresses are also placed on a shadow stack, and compared against the original return address before being used.

Static control-flow graph integrity mechanisms can protect against all control-flow hijack attempts, including indirect calls and jumps. The Abadi implementation is binary incompatible and has 15-45% overhead.

There are many, many more details in the paper so if this subject interests you it is well worth a read.

~~