Dremel: interactive analysis of web-scale datasets – Melnik et al. (Google), 2010.

Dremel is Google’s interactive ad-hoc query system that can run aggregate queries over trillions of rows in seconds. It scales to thousands of CPUs, and petabytes of data. It was also the inspiration for Apache Drill.

Dremel borrows the idea of serving trees from web search (pushing a query down a tree hierarchy, rewriting it at each level and aggregating the results on the way back up). It uses a SQL-like language for query, and it uses a column-striped storage representation. It also supports a nested data model – Google’s Protocol Buffers

Column stores have been adopted for analyzing relational data [1] but to the best of our knowledge have not been extended to nested data models. The columnar storage format that we present is supported by many data processing tools at Google, including MR, Sawzall, and FlumeJava.

The way in which nested data structures are split into columns, and then re-assembled on demand in response to queries is central to Dremel, so let’s explore how that works. Here’s a sample Protocol Buffer schema for a Document entity:

message Document {

required int64 DocId;

optional group Links {

repeated int64 Backward;

repeated int64 Forward;

}

repeated group Name {

repeated group Language {

required string Code;

optional string Country;

}

optional String Url;

}

}

Notice a few things about this: there are repeated and optional components, and there is nesting. The first part of splitting this into columns is pretty straight-forward: make a column for each field, using the nested path names. So, for the schema above we have columns DocId, Links.Backward, Links.Forward, Name.Language.Code, Name.Language.Country, and Name.Url.

Focusing in on the Name.Language.Code column, we’re going to take the Code entries from multiple ‘rows’ (Documents) and put them all into this column. The first problem we have to solve comes from the fact that ‘Code’ can be repeated several times within the same Document. In the ‘DocId’ column, each entry represents a new Document, but in the Name.Language.Code column we need a way to know whether a given entry is a repeated entry from the current Document, or the start of a new Document. And if it is repeated, where does it belong in the nesting structure? Furthermore, given the fact that some fields are optional (may be missing), we’re going to need a way to take that into account too.

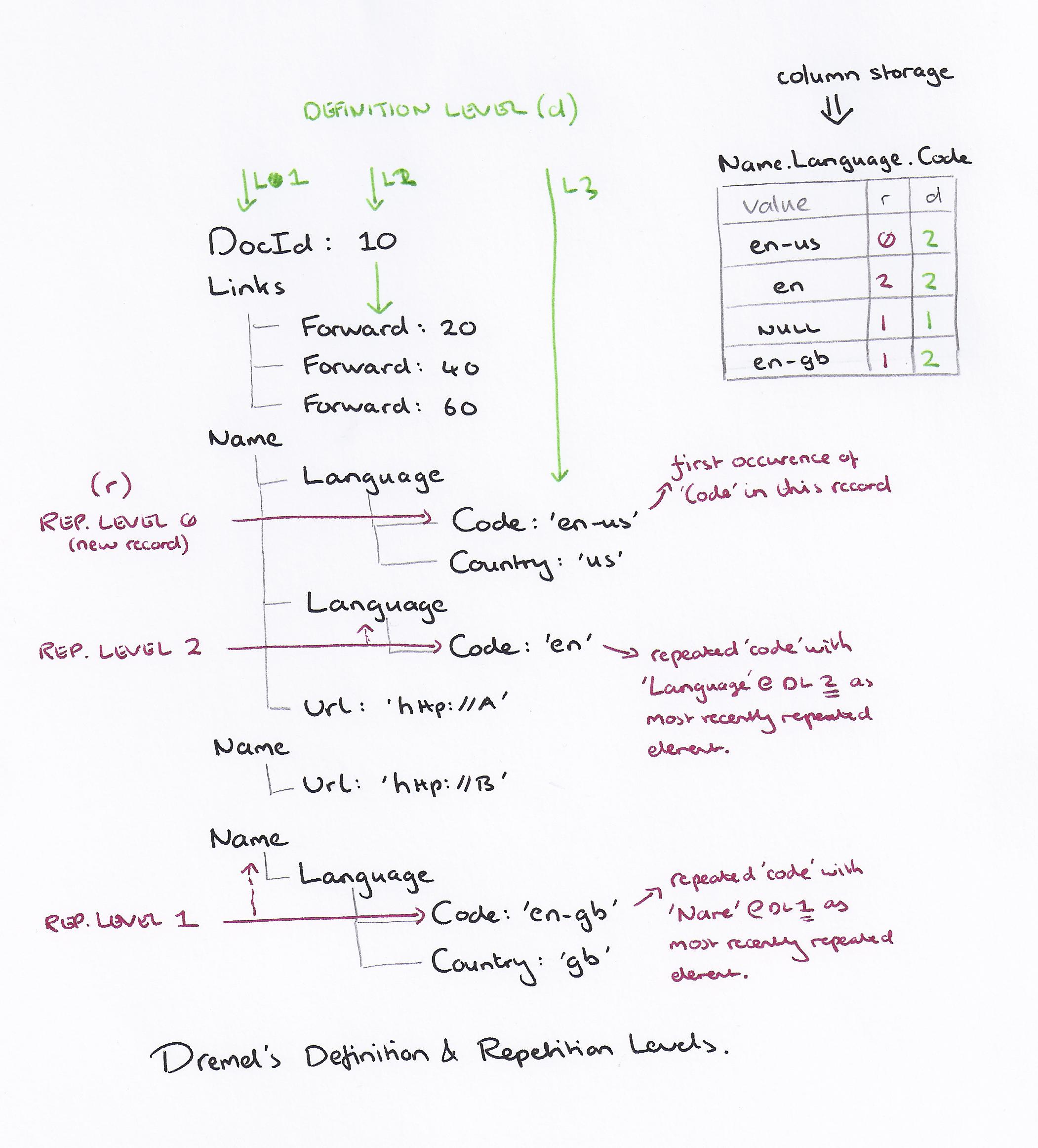

Dremel solves these problems by keeping three pieces of data for every column entry: the value itself, a repetition level, and a definition level. How it works is pretty subtle to wrap your head around, but I’ll try to explain it as clearly as possible. Take a good look at the sketch below from my notebook. It shows a Document record that we want to split into columns, and to the right, the column entries that result within the Name.Language.Code column – where r represents the repetition level, and d the definition level.

The first problem we mentioned was how to tell whether an entry is the start of a new Document, or another entry for the same column within the current Document. That’s an easy one to solve: the repetition level is set to 0 for the first occurence of a field within a record. Hence ‘en-us,’ which is the first Code within the document, is encoded with repetition level 0.

You might intuitively think that we’d simply increment the repitition level for each occurence (and hence the ‘en’ entry would have repetition level 1, and ‘en-gb’ repetitionlevel 2). But that’s not actually how it works. Notice that if we did that, we couldn’t distinguish between a Code that is in a repeated Language element of a given Name (‘en’ in our example), and a Code that is in a new Name element (the ‘en-gb’ case). So for all repeated column entries in a record after the first one, the repetition level value that is stored is instead the ‘level’ at which we’re repeating. For the nesting Name.Language.Code, Name is level 1, Language is level 2, and Code is level 3. Still with me? Good, so, when we come to encode the ‘en’ value in the column, we give it repetition level 2, because it is inside a replicated Language element. And when we come to encode the ‘en-gb’ value, we give it repetition level 1, because Name is the first level at which we’re repeating at this point in the record.

And that NULL value you see in the column? That’s there to record the fact that the second Name element doesn’t include a Language.Code value at all. It’s at repetition level 1 because the ‘Name’ element is the level we’re repeating at.

Now let’s talk about the definition level value, d. Intuitively you might think this is just the nesting level in the schema (so 1 for DocId, 2 for Links.Forward, 3 for Name.Language.Code etc.) – but again that’s not quite what it represents (and in fact, if we had access to the schema, it would be redundant to store that information with every column entry of course). Instead, the definition level indicates how many of the parent fields are actually defined. This is easier to understand by example. For the ‘en-us’ Code entry, it’s within a Language field, within a Name field – so this gets definition level 2. The same is true for the ‘en’ and ‘en-gb’ entries. For the NULL entry though, the enclosing ‘Name’ is present, but there is no ‘Language’ component at all. Therefore this gets definition level 1.

It turns out that by encoding these repitition and definition levels alongside the column value, it is possible to split records into columns, and subsequently re-assemble them efficiently. The algorithms for doing this are given in an appendix to the paper. Record assembly is pretty neat – for the subset of the fields the query is interested in, a Finite State Machine is generated with state transitions triggered by changes in repetition level.

Let’s cut to the chase now and look at what Google learned building and operating Dremel:

- Scan-based queries can be executed at interactive speeds on disk-resident datasets of up to a trillion records.

- Near-linear scalability in the number of columns and servers is achievable for systems containing thousands of nodes.

- MR can benefit from columnar storage just like a DBMS.

- Record assembly and parsing are expensive. Software layers (beyond the query processing layer) need to be optimized to directly consume column-oriented data.

- MR and query processing can be used in a complementary fashion; one layer’s output can feed another’s input.

- In a multi-user environment, a larger system can benefit from economies of scale while offering a qualitatively better user experience. (Splitting the work into more parallel pieces reduced overall response time, without causing more underlying resource, e.g. CPU, consumption)

- If trading speed against accuracy is acceptable, a query can be terminated much earlier and yet see most of the data.

- The bulk of a web-scale dataset can be scanned fast. Getting to the last few percent within tight time bounds is hard.

The last two points relate to the problems we looked at in The Tail at Scale – the outliers are always slower, and at enough scale you’re going to get tripped up by that. Dremel allows you to specify the percentage of data processed at which you’re happy to stop a query and return results. It sounds odd to say you want the results of a query without looking at all of the data – but consider for example a top-k query. Looking at 98% of the data makes it highly likely you’ll get the right answer, and cutting off the slow tail can give a response in under a minute as opposed to several minutes just waiting for that last 2%.