The QUIC transport protocol: design and Internet-scale deployment Langley et al., SIGCOMM’17

QUIC is a transport protocol designed from the ground up by Google improve the performance of HTTPS traffic. The chances are you’ve already used it – QUIC is deployed in Chrome, in the YouTube mobile app, and in the Google Search app on Android. It’s also used at Google’s front-end servers, handling billions of requests a day. All told, about 7% of global Internet traffic is now flowing over QUIC. There are three interesting aspects to the paper, that we can essentially divide into the Why, the What, and the How. How QUIC was built and evolved over time is an integral part of the story.

Why build a new protocol

Two forces combined to create the pressures that eventually led to QUIC. On the one hand, the use of the web as a platform for applications, and the latency sensitivity of those applications (and their key metrics), create a strong pressure to reduce web latency. On the other hand, the rapid shift towards securing web traffic adds delays. TLS adds two round trips to connection establishment. Both of these forces must contend with the speed of light that sets a lower bound on round-trip times.

Meanwhile, HTTP/2 allows multiplexing of object streams, but is still fundamentally limited by TCP’s bytestream abstraction that forces all application frames to pay a latency tax when wait for retransmission of lost TCP segments from any stream. Furthermore, rolling out changes to a typical TCP based stack takes a long time as it is embedded in the OS kernel (including on end user controlled devices). And if you could roll out a change, it’s likely that it will break due to entrenched middleboxes:

Middleboxes have accidentally become key control points in the Internet’s architecture: firewalls tend to block anything unfamiliar for security reasons and Network Address Translators (NATs) rewrite the transport header, making both incapable of allowing traffic from new transports without adding explicit support for them.

What is QUIC? An overview

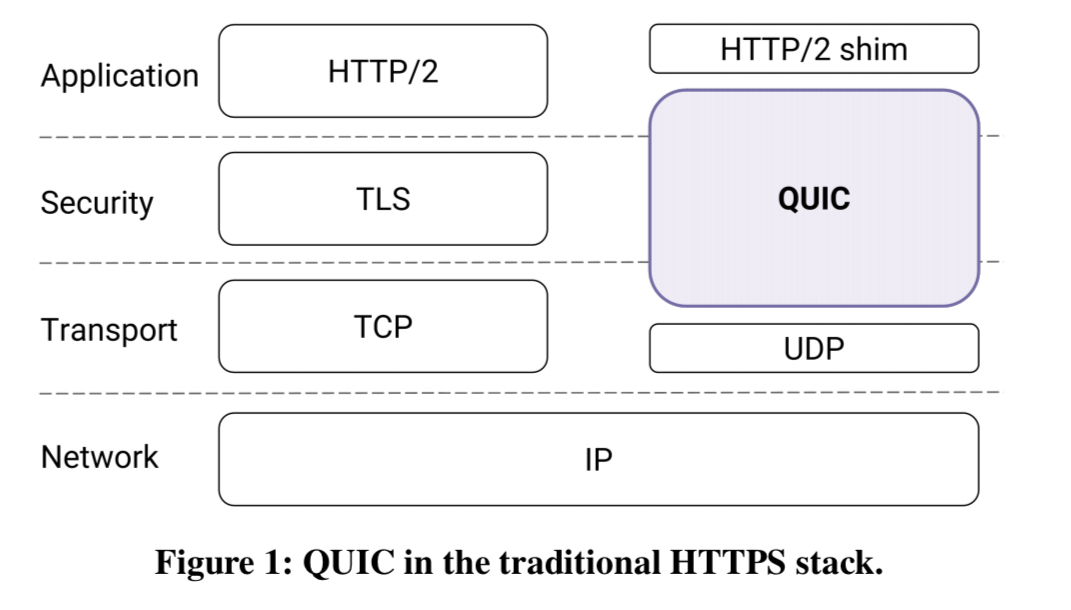

QUIC was designed to be easily deployable and secure, and to reduce handshake and head-of-line blocking delays. Security and deployability are both helped by one of the key QUIC decisions – to base on top of UDP.

QUIC encrypts transport headers and builds transport functions atop UDP, avoiding dependence on vendors and network operators and moving control of transport deployment to the applications that directly benefit from them.

As well as being more secure, encrypting everything also stops those pesky middleboxes from ossifying the protocol by baking in dependencies on specific formats etc..

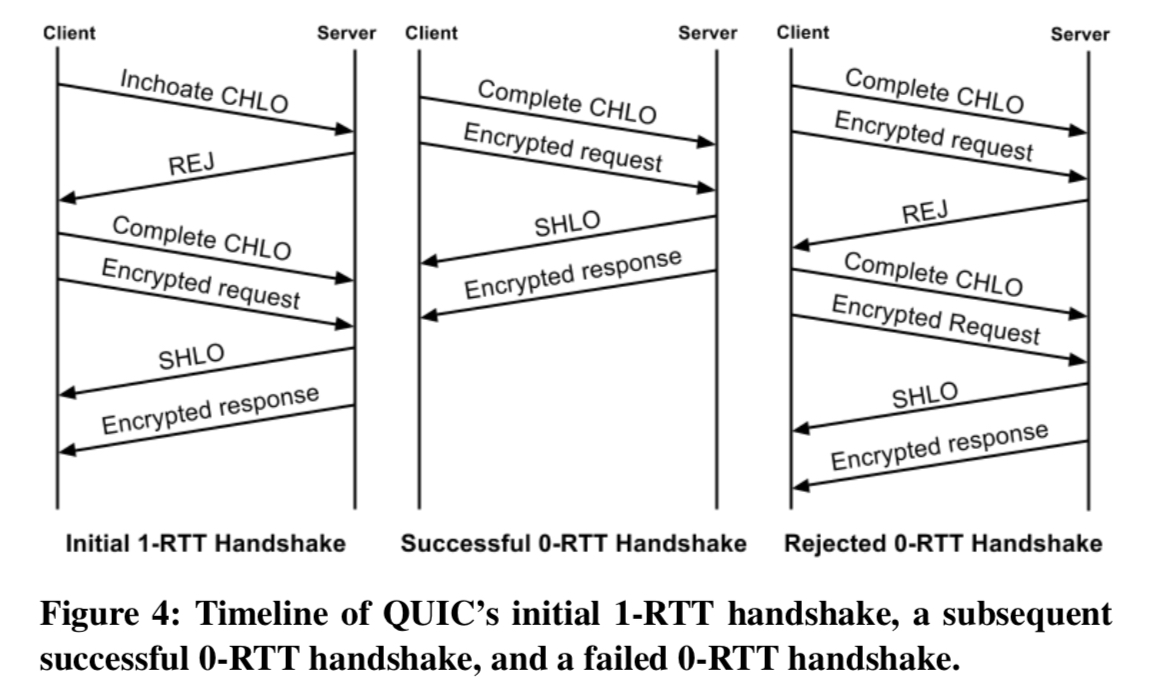

Establishing a connection

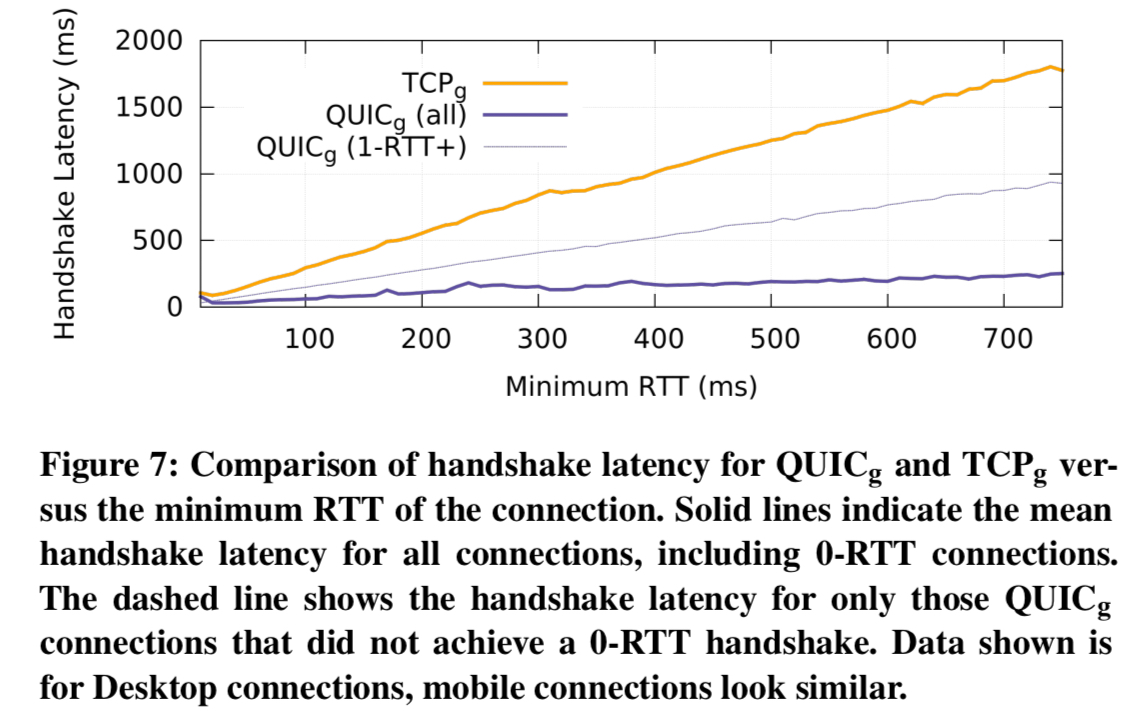

QUIC combines the cryptographic and transport handshake into one round trip when setting up a secure transport connection. If a connection is successfully established the client can cache information about the origin it connected to. When reconnecting to the same origin this cached information can be used to establish an encrypted connection with no additional round trips. Data can be sent immediately after sending the client hello, without even needing to wait for the the server’s reply (0-RTT). If the client’s cached information becomes out of date, then this 0-RTT handshake falls back into the 1-RTT variant.

Stream multiplexing

To avoid head-of-line blocking due to TCP’s sequential delivery, QUIC supports multiple streams within a connection, ensuring that a lost UDP packet only impacts those streams whose data was carried in the packet.

Streams are lightweight enough that a new stream can reasonably be used for each of a series of small messages.

Loss recovery

In TCP, retransmitted segments carry the same sequence numbers as the original packet, which complicates ACKs. In QUIC, every packet carries a new sequence number, including those carrying retransmitted data.

Stream offsets in stream frames are used for delivery ordering, separating the two functions [ordering and acknowledgement] that TCP conflates. The packet number represents an explicit time-ordering, which enables simpler and more accurate loss detection than in TCP.

The separation also allows more accurate network RTT estimation helping delay-sensing congestion controllers such as BBR and PCC.

Authentication and encryption

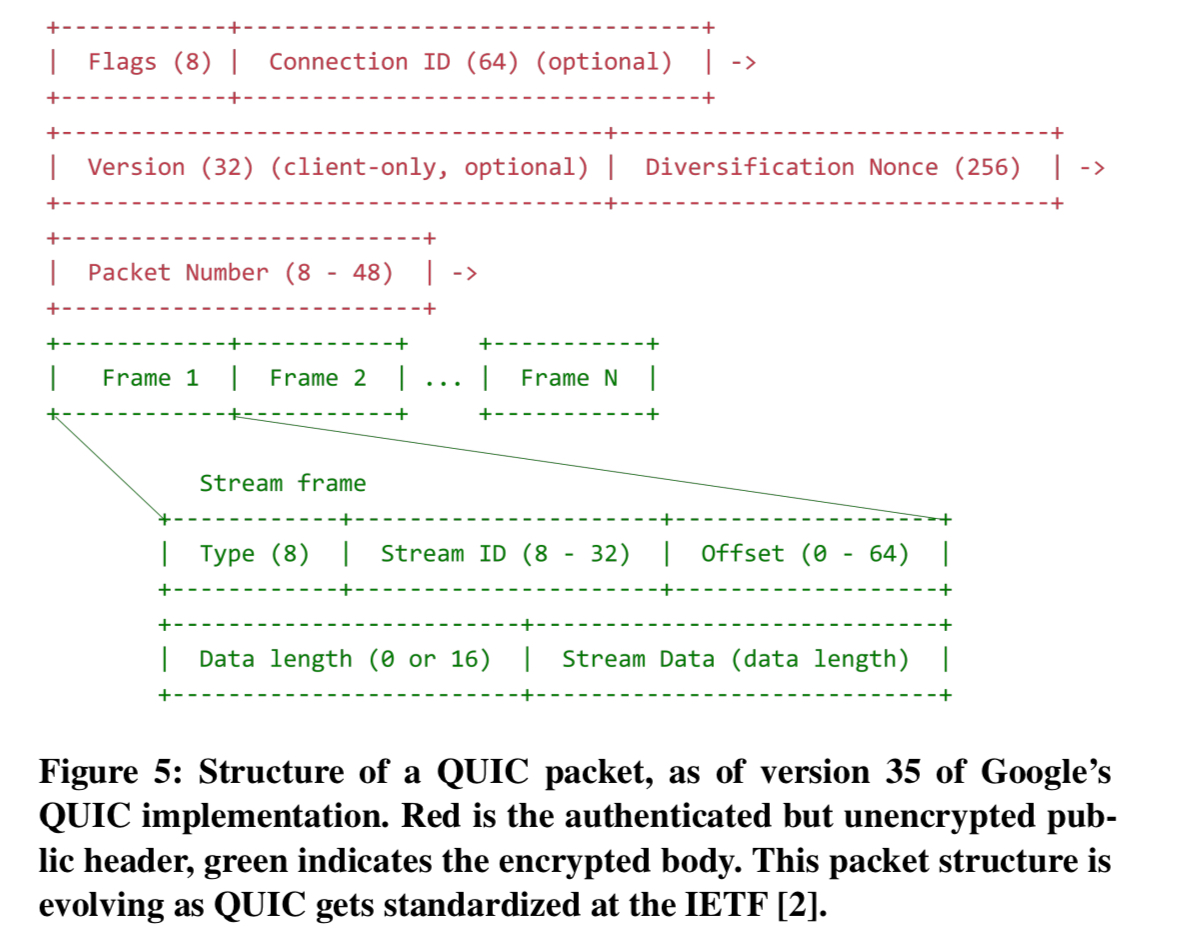

With the exception of the early handshake packets and reset packets, QUIC packets are fully authenticated and mostly encrypted, as shown below:

QUIC’s cryptography provides two levels of secrecy: initial client data is encrypted using initial keys, and subsequent client data and all server data are encrypted using forward-secure keys. All of the information sent in the clear is also included in the derivation of the final connection keys – so any in network tampering will be detected and cause the connection to fail.

Flow control

QUIC use stream-level flow control, in conjunction with an overall connection flow control mechanism. As in HTTP/2, the flow control mechanism is credit based:

A QUIC receiver advertises the absolute byte offset within each stream up to which the receiver is willing to receive data. As data is sent, received, and delivered on a particular stream, the receiver periodically sends window update frames that increase the advertised offset limit for that stream, allowing the peer to send more data on that stream.

Congestion control

Congestion control in QUIC is pluggable. The initial implementation uses Cubic.

How Google built and evolved QUIC

Our development of the QUIC protocol relies heavily on continual Internet-scale experimentation to examine the value of various features and to tune parameters… We drove QUIC experimentation by implementing it in Chrome, which has a strong experimentation and analysis framework that allows new features to be A/B tested and evaluated before full launch.

QUIC was also able to directly link experiments into the analytics of the application services using QUIC connections. This enabled the team to quantify the end-to-end effect on user and application centric performance metrics. QUIC support in the YouTube and the Android Google Search apps was also able to take advantage of their respective experimentation frameworks.

Through small but repeatable improvements and rapid iteration, the QUIC project has been able to establish and sustain an appreciable and steady trajectory of cumulative performance gains.

Is QUIC actually quick?

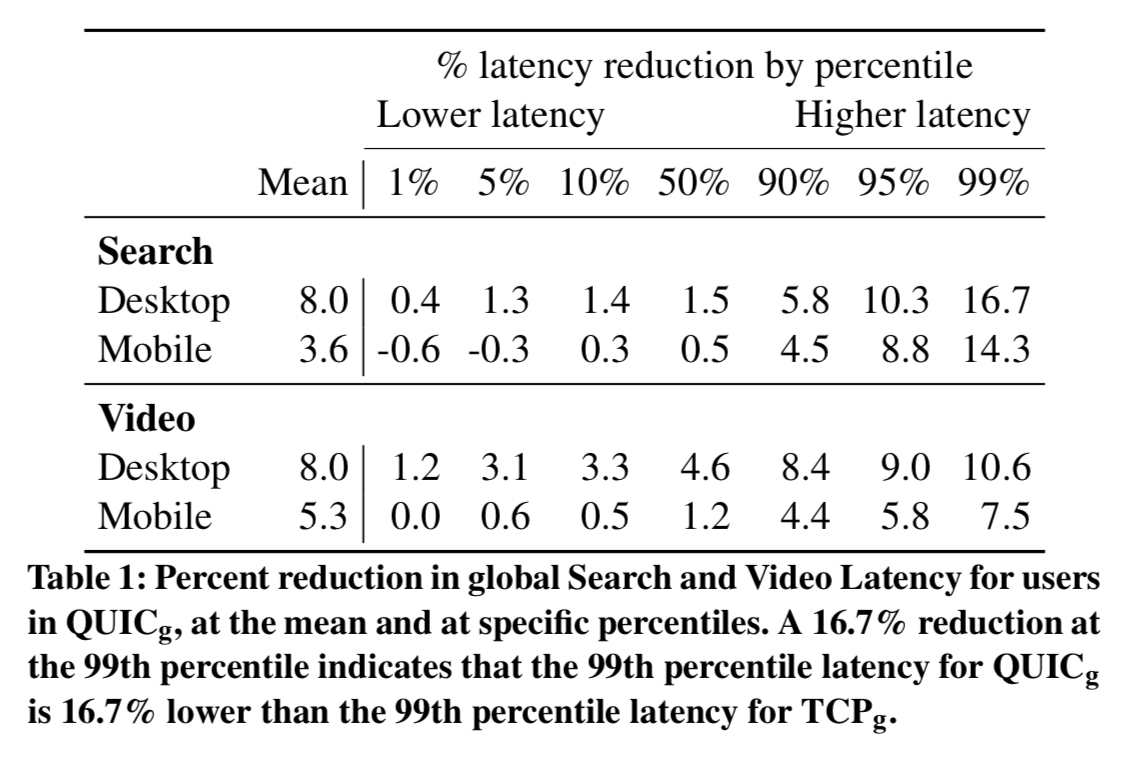

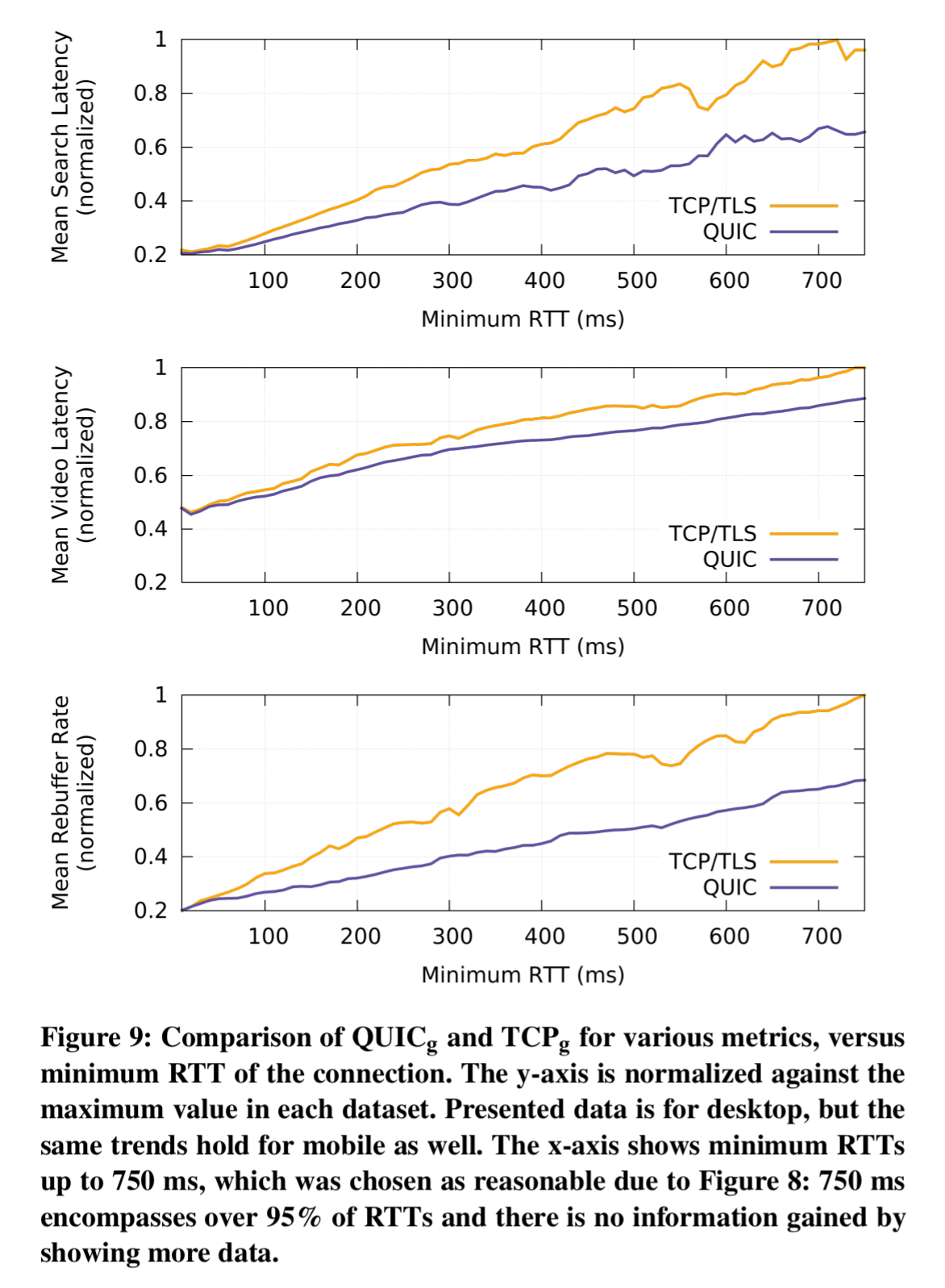

The table below shows the reductions in search and video latency achieved when using the QUIC protocol. The improvement mostly comes from reducing handshake latency.

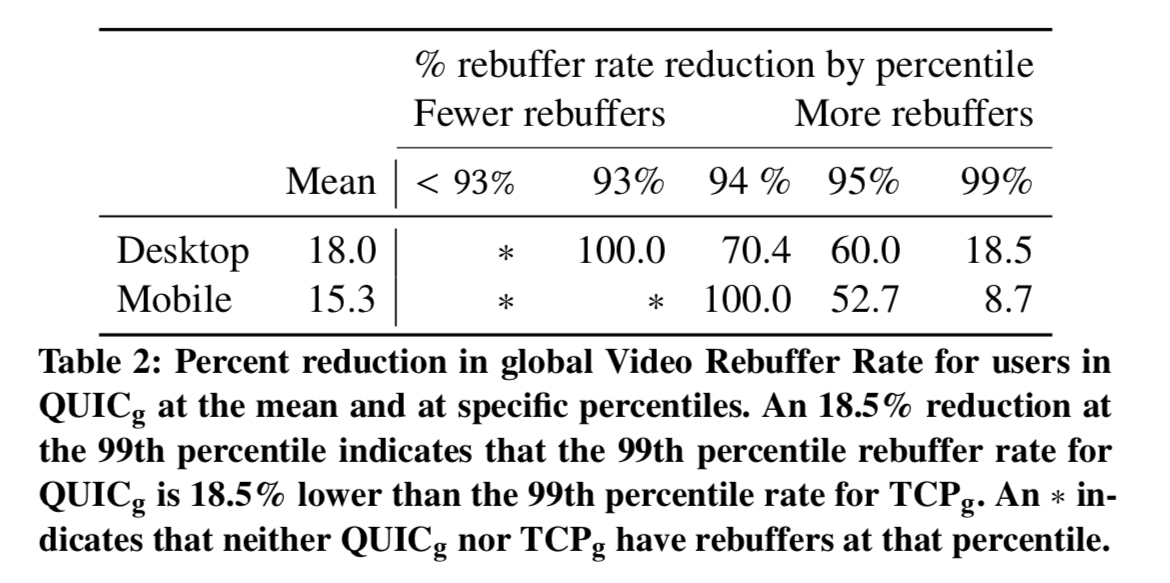

Users of QUIC also saw reduced playback interruptions due to rebuffering. This is due to QUIC’s reduced loss-recovery latency.

And here’s how QUIC stands up against the TCP/TLS alternative:

The downside is that QUIC is currently twice as expensive as TCP/TLS in terms of CPU.

Great writeup as always Adrian!

I should start by saying I’ma big fan of QUIC and look forward to seeing it standardised and used more widely outside of Google.

However, IIRC (and please correct me, been a while since I read the spec and it may have changed). There is one other somewhat significant limitation I think it’s worth calling out in a summary like this.

The initial request payload in a 0-RTT connection does not have the same security guarantees as subsequent requests. Again IIRC it is vulnerable to replay attacks and so it is only safe to issue idempotent requests as the first request in 0-RTT.

That limitation has two significant impacts:

1. client libraries must directly expose an API that allows the developer to explicitly signal that a request is 0-RTT safe before it can benefit from 0-RTT reconnection.

2. developer must reason about this, and explicitly add code or refactor to allow that to happen

Both mean that you don’t get at least some of the stated performance benefits seen here of 0-RTT “for free” without application changes beyond just switching our the transport layer.

Thanks to pointing out the limitations.

As everything else in life, there is no magic, only trade-offs.