A cloud-based content gathering network Bhattacherjee et al., HotCloud’17

We all know what a content distribution network is, but what’s a content gathering network?! CDNs are great, but their benefits are reduced for clients that have a relatively slow last mile connection – especially given that a typical web page request will involve many round trips.

It is worth noting that such large last-mile latencies (e.g 100ms) are not atypical in many parts of the world. There is, of course, significant ongoing work both on cutting down the number of RTTs a web request takes, and on improving last-mile latencies. However, realizing these benefits across the large eco-system of web service providers and ISPs could still take many years. How do we improve performance for users in these environments today?

The core idea of a CGN is to gather all the information needed for a page load in a place that has a short RTT time, and then transfer it to the client in (ideally) one round trip. At a cost of about $1 per user, the authors show that it can reduce the median page load time across 100 popular web sites by up to 53%. That’s definitely something users would love!

CDN vs CGN

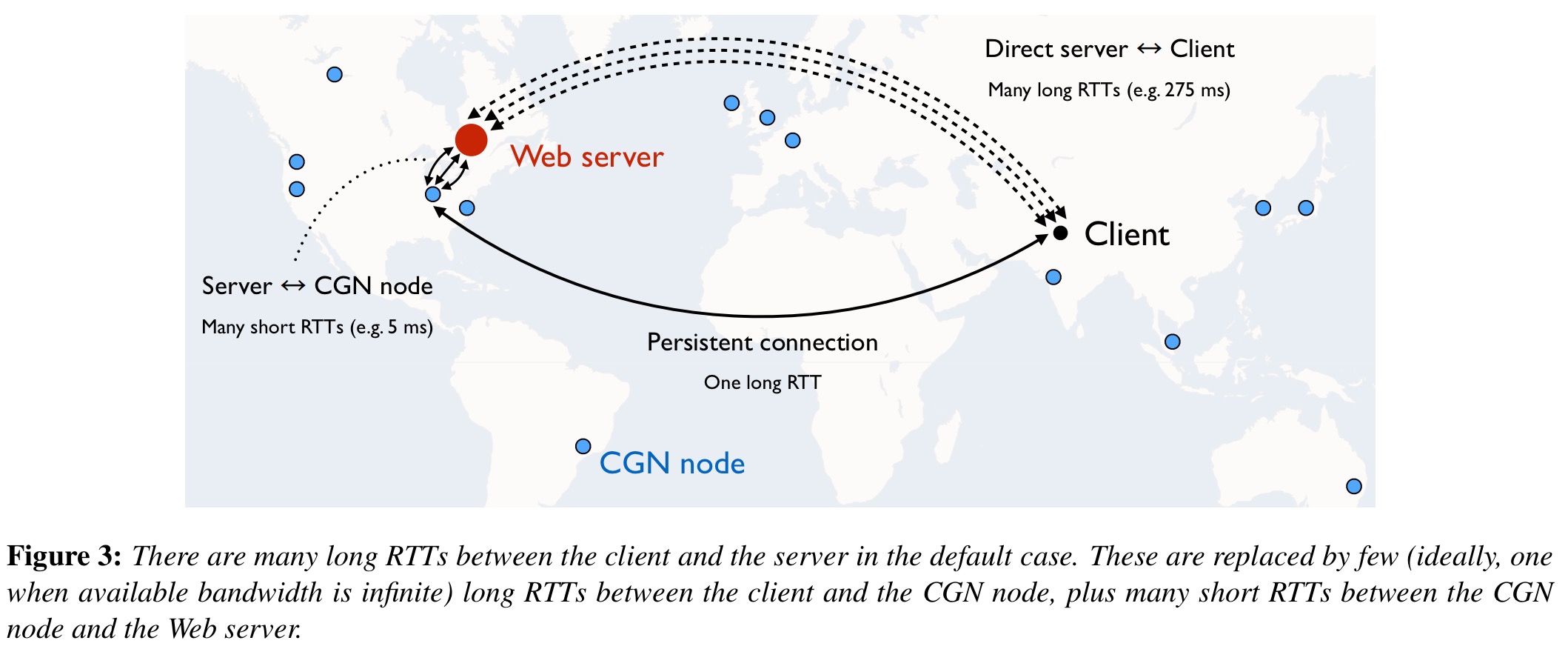

CDNs locate customer’s content as close to the end user as possible, whereas a CGN tries to locate (a proxy for) the end user as close to the content as possible. Until you take the number of round trips needed to load a web page into account, it doesn’t seem on the surface like it would make much difference. The following picture helps to illustrate what’s going on:

Suppose we have a web server serving some site on the east coast of the US. A CGN node in for example EC2 US-East will probably have very low latency access to the site. So it can make the multiple trips needed to access a page locally and then transfer the content to the client.

The client and the CGN node use aggressive transport protocols, with large initial window sizes, and without the need of an additional handshake (unlike TCP).

Here’s the full picture: the client browser is configured to forward web requests to a local HTTP proxy service, which then forwards the URL to the CGN node nearest to the hosting web server. The CGN node runs a headless browser, which starts downloading the web page. As the content is downloaded, the CGN node forwards it to the client’s local proxy in parallel using a TCP byte stream, and the local proxy serves the content to the browser.

How does the proxy service know which CGN node is nearest to the hosting web server? A network of CGN nodes is deployed (e.g., at least one in each public cloud region), and each node makes independent latency measurements to a list of sites, and exchanges information with the other nodes. (This is a few MB, even for a million popular web sites). Clients obtain the mapping from CGN nodes. Experiments conducted by the authors show that the mappings are stable enough that updates can be infrequent (e.g., once a day).

The rise of public clouds changes the game

We observe that present public cloud infrastructure provides enough global points of presence to be able to reach within close proximity of most popular Web services, and thus provides a natural platform to build a “content gathering network,” which operates on behalf of users.

On the other side of the coin, with increasing numbers of websites themselves being cloud hosted, the distances between public cloud infrastructure and popular websites can be very small indeed. The authors set out to measure just how low latency might be…

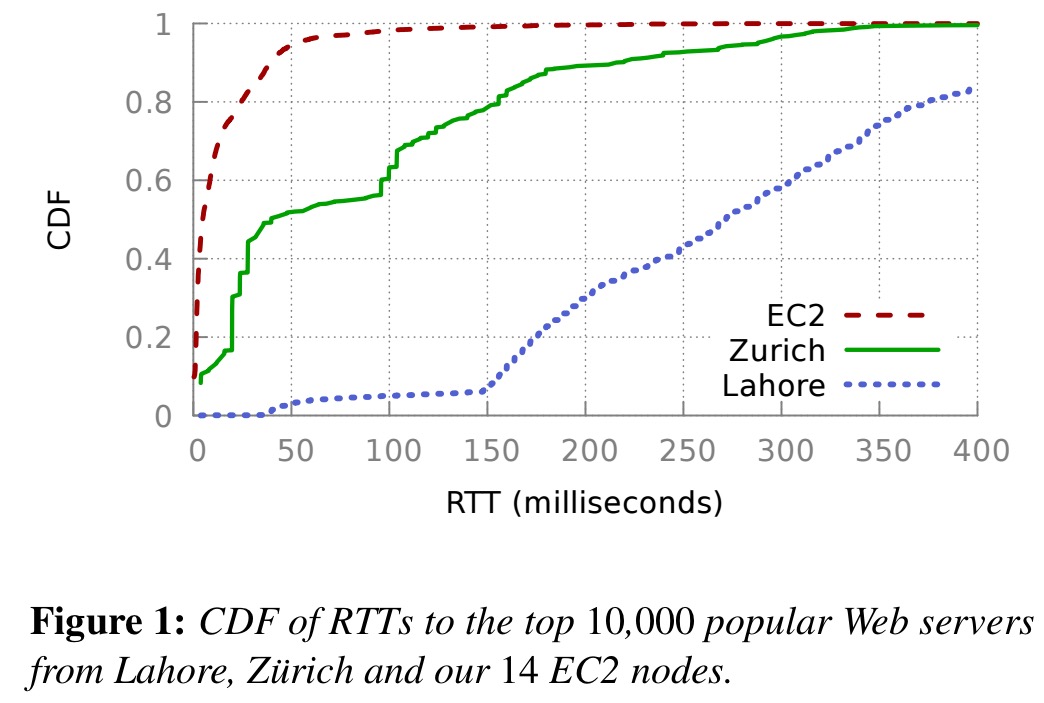

We deployed one node in each of Amazon’s 14 data center regions. From each of these 14 nodes, we measure round trip times to each Web server hosting the top 100,000 web sites in Alexa’s list of popular sites… For a smaller set of Web servers (top 10,000), we also similarly measured RTTs from clients in Lahore, Pakistan and Zurich, Switzerland. Both of these clients are university-hosted and “real” end-user connectivity is likely to be worse.

The results? Taking the median (and 90th pecentile), for the top 10,000 domains, EC2 is within 4.8ms (39.5ms), Zurich within 39.8ms (215.8ms), and Lahore within 275.8ms (471.8ms).

… median RTT from EC2 is 8x smaller than from Zurich, and 57x smaller than from Lahore.

For the top 100,000 domains, the median RTT from EC2 is still only 7.2ms.

Over a one-week period, the latencies from the EC2 node a domain is mapped to stayed very stable.

Page load time improvements

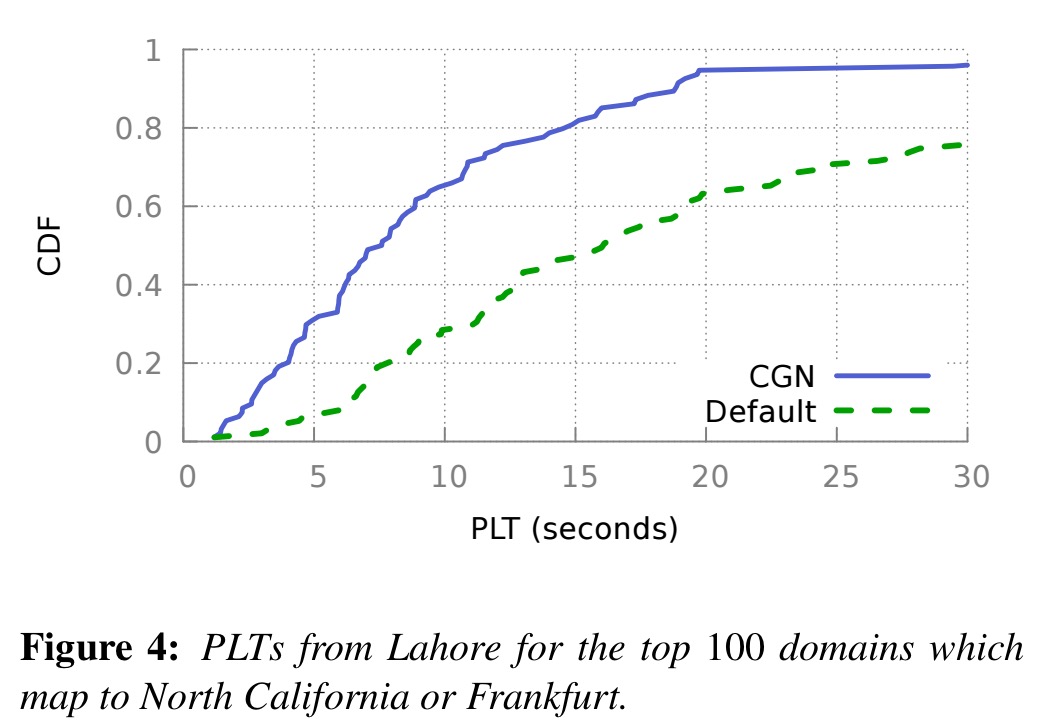

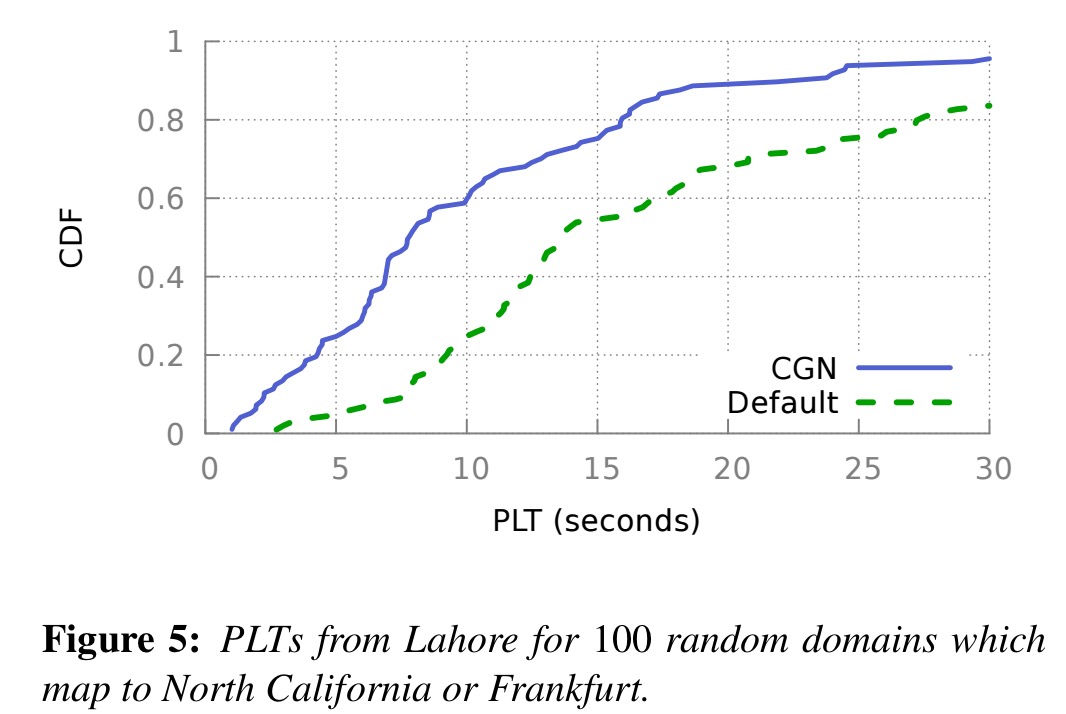

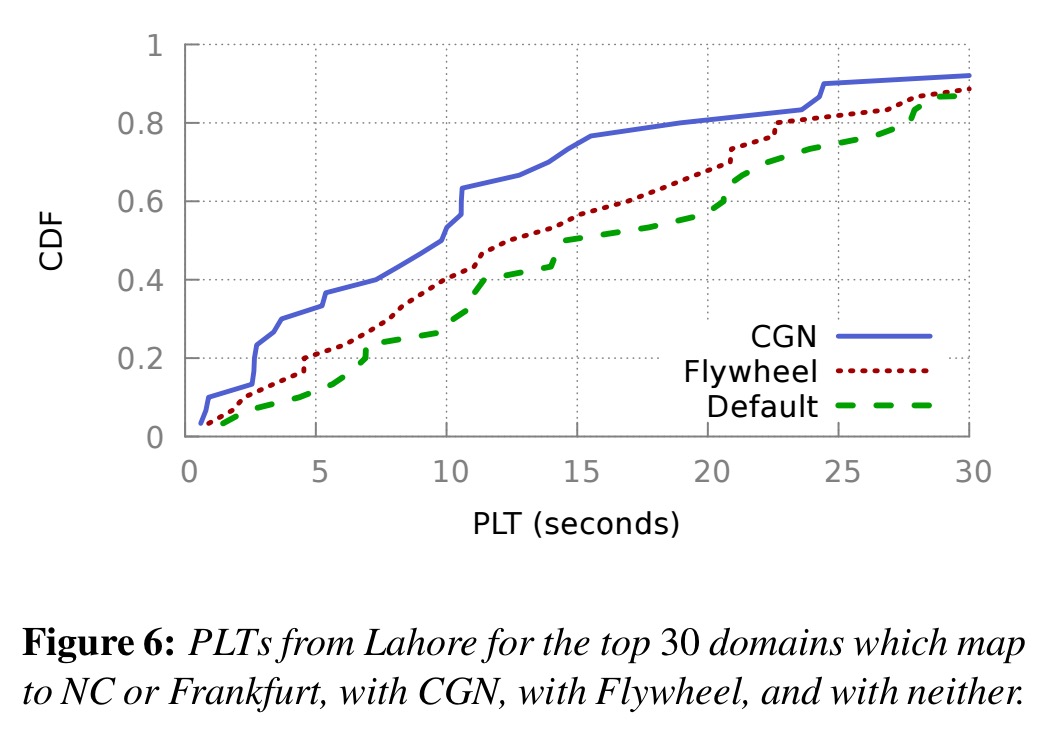

For their evaluation, the team used m4.10xlarge EC2 instances as CGN nodes, and PhantomJS as the headless browser. Two CGN nodes were operated: one in North Carolina, USA, and one in Frankfurt, Germany. The test was done only with those domains which would have mapped to either of these two locations.

The current implementation does not quite hit the goal of only one round trip between CGN and client due to differences in behaviours between client-side browsers and headless browsers, thus some content is still fetched from traditional CDN nodes. “But we do not believe that the 1-RTT goal is unreachable, and are working towards it.”

Web pages were loaded with and without the CGN, from a client in Lahore. Even though CDN content requests still went directly to the CDN servers (for now), the results are still impressive.

Out of the Alexa top-10K, we evaluate the top 100 and random 100 web pages which map to either of our two CGN nodes. For the top-100 set, the median page load time reduces from (the default) 16.1 seconds to 7.6 seconds with CGN – a reduction of 53%.

For the random 100 set, median page load time is reduced by 43.2%.

We also get a quick comparison with Google’s Flywheel using the “Data Saver” extension for Chrome. Testing against the top 30 domains, CGN still shows significant gains beyond Flywheel’s.

We note that Flywheel is a complex system with numerous optimizations, including compression, caching, and prefetching at the proxies. These techniques are all orthogonal to ours, and could readily be added to our approach for even more reduction in PLTs.

Given how well CGNs work, the authors are moved to ask “can we leverage public cloud infrastructure and the observation that most content is hosted in (or near) this infrastructure to entirely eliminate CDNs from Web site delivery?” (Note the scoping to web sites, characterised by large numbers of relatively small requests. For applications like video streaming, CDNs will always win).

What about…?

How much might it cost to run a CGN network? Some back of the envelope calculations by the authors suggest that with a network shared by multiple users, the cost to provide the service comes in at about $1 per user per month.

HTTPS. This is a big one for me. While technically supporting https is feasible, the client browser would need to trust the CGN node acting as at TLS/SSL proxy. “There is also scope for using techniques like Intel SGX to hide the content of client-server interactions from the CGN nodes as a privacy enhancement, although CGN nodes will still be able to see which servers a client connects to.” The authors note that CDNs already operate under a similar trust model, but to me there’s a big difference between shared static resources and dynamic content containing sensitive personal information.

Commercial model

Who would host a CGN service. The authors have three suggestions:

- Cloud providers could provide this as a service, to attract more web service providers to their infrastructure

- Browser vendors competing for market share could run the service

- Web service providers themselves may incur the expense

Rather than seeing this as a reduction in business for CDN vendors though, there seems to be an obvious fourth option to me: CDN vendors themselves could operate CGN capabilities alongside their existing CDN network.