No paper today, instead a short piece to tee-up the next mini-series of papers I’ll be covering…

There’s a lot of excitement around chatbots in the startup community. You can divide this into two broad classes:

- Consumer-oriented services that want to reach an audience which increasingly spends most of its time in messaging applications. Here the mantra is ‘go to where the users are’ and make using your service as simple as adding and chatting to a friend. Of course, this still doesn’t solve the discovery problem (how do potential users know you exist in the first place?). Furthermore, the service you’re offering has to be amenable to chat-style interaction or at least initiation: understanding the capabilities of the underlying technologies and where they are heading can help us to get a handle on that. Interactions here are typically 1:1.

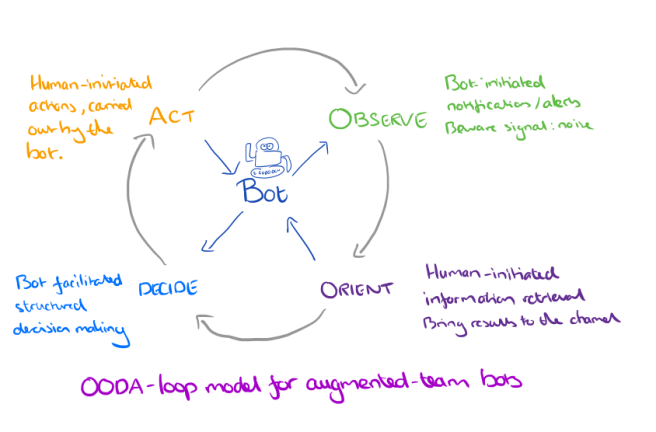

- Team-oriented (typically B2B) services in which the bot becomes like an additional member of the team. Again, the principle is to go to where the team already is – for example, hanging out on a Slack channel (disclaimer, Accel is an investor). Interactions here are 1 (bot) : n. The model I’ve been using for thinking about such bots is based on the good old OODA loop. Not all bots need to participate in every phase of the loop of course.

- Observe – the bot can bring relevant and timely information to the team’s attention. Key here is to avoid swamping the channel with noisy low-level alerts or notifications. You can bring increasing levels of sophistication to the way that you tune the information shown to the team over time, ranging from simple enable/disable settings to learning models. Observations are bot-initiated.

- Orient – some new information has come to light (perhaps via a bot message) and now the team needs to orient themselves to find out what is really going on. The team can make requests for information to the bot, which brings the requested information back to the channel. Here the central shift in thinking is to bring the information to where the team is, rather than having the team go out and interrogate a diverse set of tools to find it. Orientation activities are human-initiated and the bot needs to discover intent. It may well be useful to manage context across a series of orientation requests to so that mentions of ‘it’, ‘the widget’, and so on can be disambiguated.

- Decide – after a period of investigation and orientation the team may go through a decision process to decide what actions if any to take. Think for example of a bot guiding a team through a structured process such as following an operations playbook. (Further orienting activities may take place at each step of the the decision process). At one end of the spectrum the bot is playing the role of facilitator and perhaps guiding the team through a simple predefined checklist or decision tree, at the other end of the spectrum the bot could be using learned models to help diagnose a problem and propose a solution.

- Act – if the team decide that some actions need to be carried out as a result of the decision making process, then it should be possible to express these actions to the bot as commands / requests and have the bot carry them out on the team’s behalf. The bot will need to be able to extract intent and command parameters, ideally making use of conversational context. It should also track outstanding actions to keep the team informed of progress and results.

The whole process is about augmenting the capabilities of the team to make them more effective, not about replacing people with AI systems. I’ve been using this model with one of the startups I work with, Atomist, who are using a bot as the primary interface to the system they are building.

What does all this have to do with The Morning Paper? In order to separate the hype from the reality, the practical from the pipe-dream, it’s very useful to have a good handle on the capabilities of the underlying technologies. The WildML blog recently posted a great high-level overview of Deep Learning for ChatBots (and I’m looking forward to the next instalments in the series). Rather than repeat everything written there, I encourage you to go and check it out. The bottom line is that you need a relatively restricted domain (as opposed to a bot answering open-ended questions about life, the universe, and everything) and relatively short conversations with modest contextual requirements.

At the end of the Deep Learning for ChatBots article is a recommended reading list for those who are interested in looking into some of the research. I’ll be drawing from this list for the next series of papers on The Morning Paper. First up tomorrow will be “A survey of available corpora for building data-driven dialogue systems.” Don’t worry, it’s more interesting than it sounds :).

2 thoughts on “On chatbots”

Comments are closed.