Optimizing Search Engines using Clickthrough Data – Joachims, 2002

Today’s choice is another KDD ‘test-of-time’ winner.

The paper introduced the problem of ranking documents w.r.t. a query using not explicit user feedback but implicit user feedback in the form of clickthrough data. The author presented the Ranking SVM Algorithm to solve the proposed ranking problem. The paper has stimulated much follow-up research, and the Ranking SVM Algorithm has been widely used for many applications, as evidenced by the very large number of citations. The work has also been included in various textbooks.

It’s hard to believe that it was as recently as 2002 that the idea of using clickthrough data to improve search engine rankings was ground-breaking research! It seems so obvious to us now, but it must have been a very exciting breakthrough at the time.(And of course, there’s the tricky question of exactly how to do it, once you’ve had the insight).

Which WWW page(s) does a user actually want to retrieve when he types some keywords into a search engine? … Unfortunately, experience shows that users are only rarely willing to give explicit feedback. However, this paper argues that sufficient information is already hidden in the logfiles of WWW search engines. Since major search engines receive millions of queries per day, such data is available in abundance. Compared to explicit feedback data, which is typically elicited in laborious user studies, any information that can be extracted from logfiles is virtually free and substantially more timely.

We can model clickthrough data as a triplet (q,r,c) : query, ranked list of results, clicks.

The query q and the returned ranking r can easily be recorded whenever the resulting ranking is displayed to the user. For recording the clicks, a simple proxy system can keep a logfile. Each query is assigned a unique ID which is stored in the query-log along with the query words and the presented ranking. The links on the results-page presented to the user do not lead directly to the suggested document, but point to a proxy server. These links encode the query-ID and the URL of the suggested document. When the user clicks on the link, the proxy-server records the URL and the query-ID in the click-log. The proxy then uses the HTTP Location command to forward the user to the target URL. This process can be made transparent to the user and does not influence system performance.

We still need to be a little bit careful interpreting (q,r,c) triplets – users are naturally biased to click on links towards the top of the search rankings so we can’t treat a click as an absolute indication of search result relevance. But we can interpret it as a relative ranking of the results. Suppose a search returns a set of links link1, link2, … linkn. A user that clicks on link3 but not link1 or link2 must have scanned past links 1 and 2 before clicking on 3.

This strategy is captured by the following algorithm:

For a ranking (link1,link2,link3, …) and a set C containing the ranks of the clicked-on links, extract a preference example linki <r* linkj, for all pairs 1 ≤ j < i, with i ∈ C and j ∉ C.

“Unfortunately, this type of feedback is not suitable for standard machine learning algorithms.”

Consider a search engine with an operational retrieval function f, and let rf(q) be the ranking returned by f for the query q. We want to evaluate how closely its ranking rf(q) approximates the optimal ordering r*. Kendall’s τ is used for this, being the most frequently used measure in statistics for comparing the ordinal correlation of two random variables.

Take two finite strict orderings over some set of documents D, ra and rb. Examine all pairs of documents d1 and d2 in D – if ra and rb agree on the relative ranking call (d1,d2) a concordant pair, otherwise if they disagree they are a discordant pair. Let P be the number of concordant pairs, and Q be the number of discordant pairs. Kendall’s τ is simply the fraction of the total number of pairs that are concordant:

τ(ra,rb) = (P – Q) / (P + Q)

We are now in a position to define the problem of learning a ranking function. For a fixed but unknown distribution Pr(q, r∗) of queries and target rankings on a document collection D with m documents, the goal is to learn a retrieval function f(q) for which the expected Kendall’s τ is maximal.

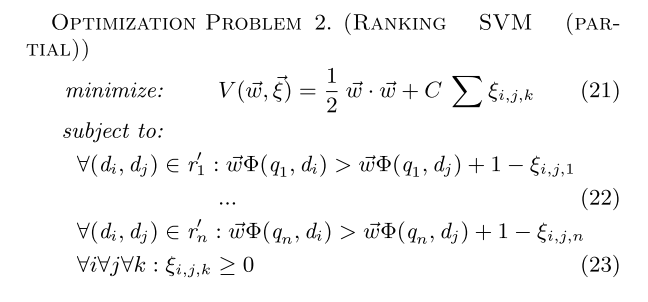

This turns out to be equivalent to minimizing the number of discordant pairs (Q). Unfortunately… this problem is NP-hard, but it is possible to approximate the solution. Joachims shows how to do this with a Support Vector Machine. My SVM-fu isn’t up to giving any meaningful analysis of the way this is done, so I’ll just show you where Joachims ends up:

εi,j,k are the introduced approximation variables that makes the problem practical, and C is a parameter that enables the trading off of margin size against training error.