RAPPOR: Randomized Aggregatable Privacy-Preserving Ordinal Response – Erlingsson et al. 2014

The RAPPOR paper made a brief appearance on HN a few weeks ago, and addresses the question of privacy preservation while collecting data – for example, in order to improve a service offering.

Crowdsourcing data to make better, more informed decisions is becoming increasingly commonplace. For any such crowdsourcing, privacy-preserving mechanisms should be applied to reduce and control the privacy risks introduced by the data collection process, and balance that risk against the beneficial utility of the collected data.

The neat thing about the RAPPOR approach is that it doesn’t rely on a trusted third-party, and once the data leaves the client no-one will ever be able to tell what the client’s true responses were. On the surface that doesn’t sound terribly useful, but by aggregating results from many clients, a true picture of the distribution of the data can be obtained, without compromising privacy of any individual. At the core of RAPPOR is the idea of a randomized response, a surveying technique invented in the 1960’s.

…RAPPOR is a mechanism for collecting statistics from end-user, client-side software, in a manner that provides strong privacy protection using randomized response techniques. RAPPOR is designed to permit collecting, over large numbers of clients, statistics on client-side values and strings, such as their categories, frequencies, histograms, and other set statistics. For any given value reported, RAPPOR gives a strong deniability guarantee for the reporting client, which strictly limits private information disclosed… and holds even for a single client that reports infinitely often on a single value.

RAPPOR makes clever use of Bloom filters – it never ceases to amaze me the uses to which hash functions can be put!

So how does it work?

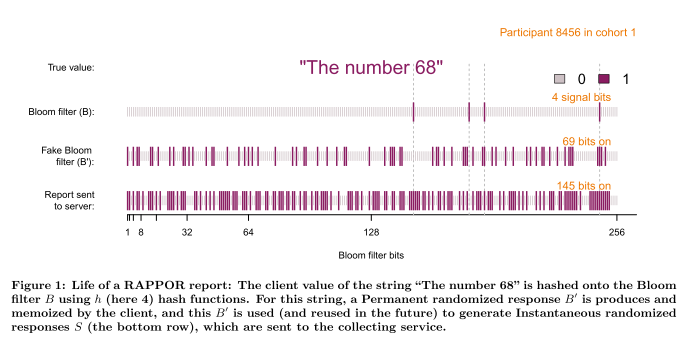

The first time the client responds with value v to a given question, create a permanent randomized response for value v:

- Hash the client’s value v onto a Bloom filter B of size k, using h hash functions

- Create a permanent randomized response B’ for this question using user-tunable parameter f, which controls the level of longitudinal privacy guarantee. B’ is a modifed version of the Bloom filter B, in which each bit is set to:

- 1, with probability f/2

- 0, with probability f/2

- the value of the same bit in B, with probability 1-f

- Memoize B’ for reuse in all reports when responding with value v to this question.

When sending a response back to the server, start with the memoized B’ (the randomized encoding of the true value v), and generate an instantaneous randomized response S (i.e., this step is repeated every time an answer is to be sent). S is a modified version of the already randomized B’. Two tunable parameters p and q govern this second randomization step. For each bit in B’:

- If the bit in B’ is set to 1, the corresponding bit in S is set to 1 with probability q

- If the bit in B’ is set to 0, the corresponding bit in S is set to 1 with probability p

The following figure from the paper illustrates the process.

The paper shows how to control the level of differential privacy by tuning the algorithm parameters (trading off with the accuracy of the statistics that can be extracted from the data).

The drawback to all this is that you need a lot of samples – given a sample of one hundred million responses, you can reliably detect about 1000 unique response strings to a question when tuned for ln(3)-differential privacy. [The practical implication of ln(3)-differential privacy – vs e.g. ln(4) – is a new known-unknown for me].

Approaches such as this which investigate how to obtain useful information from data while preserving privacy seem to me a valuable research direction.