Programs, life cycles, and laws of software evolution, Lehman, Proc. IEEE, 1980

Today’s paper came highly recommended by Kevlin Henney and Nat Pryce in a Twitter thread last week, thank you both!

The footnotes show that the manuscript for this paper was submitted almost exactly 40 years ago – on the 27th February 1980. The problems it describes though (and that the community had already been wrestling with for a couple of decades) seem as fresh and relevant as ever. Is there some kind of Lindy effect for problems as there is for published works? I.e., should we expect to still be grappling with these issues for at least another 60 years? In this particular instance at least, it seems likely.

As computers play an ever larger role in society and the life of the individual, it becomes more and more critical to be able to create and maintain effective, cost-effective, and timely software. For more than two decades, however, the programming fraternity, and through them the computer-user community, has faced serious problems achieving this.

On programming, projects, and products

What does a programmer do? A programmer’s task, according to Lehman, is to "state an algorithm that correctly and unambiguously defines a mechanical procedure for obtaining a solution to a given problem." Although if I had to write a Devil’s Dictionary entry for ‘programmer’ then I’d be tempted to write something more like: "Someone who builds castles in the air, wrangles data in varied and nefarious formats, fiddles with CSS in a never-ending quest for alignment, and navigates labyrinthine mazes." Anyway, the mechanical procedure is expressed in a high-level language, the programmer’s tool of choice.

(Languages) permitted and encouraged concentration on the intellectual tasks which are the real province of the human mind and skill… There are those who believe that the development of programming methodology, high-level languages and associated concepts, is by far the most important step for computer usage. That may well be, but it is by no means sufficient…

The problem is change. All change beyond the first version of the program is grouped by Lehman under the term maintenance. It’s no surprise under that definition that we spend far more time on program maintenance than on initial development. Change can come from needed repairs (bug fixes), but also improvements, adaptions, and extensions.

We shall, in fact, argue that the need for continuous change is intrinsic to the nature of computer usage.

So the thrust of this paper is to shift our thinking from programming / software development / software engineering as the process by which we create a point-in-time program to implement a given procedure, and instead start to focus on a program (software system) as an evolving entity over time. Through that lens we are drawn to think about the life cycle of software systems, the evolutionary forces at play, and how we can guide and manage that evolution.

This is one of those things that sounds so very obvious when we hear it, and yet in practice we often forget and are too focused on a point in time to see the flow over time. The enterprise still tends to suffer from this, a point ably made in Mik Kersten’s recent book "Project to Product" which encourages a shift from thinking about the delivery of projects with a definite end date, to the management of long-living products.

What should we do once we recognise that change is the key thing we need to manage?

The unit cost of change must initially be made as low as possible and its growth, as the system ages, minimized. Programs must be made more alterable, and the alterability maintained throughout their lifetime. The change process itself must be planned and controlled. Assessments of the economic viability of a program must include total lifetime costs and their life cycle distribution, and not be based exclusively on the initial development costs.

The three types of program

Programs exist in a context. Let’s call it ‘the real world.’ Only the real world is overwhelmingly rich in detail, so actually we are forced to think in terms of abstractions. To animate those abstractions we form them into a model with an accompanying theory as to how the various parts interact in a system. When we conceive of a program to interact with this world, we do so by imagining a new model (part) within that system that engages with it to produce the desired outcomes. The actual program that we write, expressed in some programming language, is a model of that model. Or something like that. You can meditate for a long time on what exactly Lehman means when he says:

Any program is a model of a model within a theory of a model of an abstraction of some portion of the world or of some universe of discourse.

Thinking about programs as models existing in a larger (eco)system leads to a classification of three different types of programs, which Lehman calls S-programs, P-programs, and E-programs.

S-programs are the simplest: their functionality is formally defined by and derivable from a specification. These are the kinds of programs that text books are fond of.

Correct solution of the problem as stated, in terms of the programming language being used, becomes the programmer’s sole concern.

P-programs are real-world problem solutions. This takes us into the ‘model of a model within a theory…’ territory.

The problem statement can now, in general, no longer be precise. It is a model of an abstraction of a real-world situation, containing uncertainties, unknowns, arbitrary criteria, continuous variables. To some extent it must reflect the personal viewpoint of the analyst. Both the problem statement and its solution approximate the real-world situation.

The critical difference between S-programs and P-programs is that in P-programs concern is centred on how well the solution works in its real-world context. If the real-world outcomes differ from what it is desired, the problem hasn’t changed, but the program does have to change. For any program that interacts with the real world in this way there’s one other fact of life that can’t be ignored: the world changes too.

Thus P-programs are very likely to undergo never-ending change or to become steadily less and less effective and cost effective.

E-programs are programs that ‘mechanize a human or societal activity’. This class of program is the most change prone of all. E-programs (embedded in the real-world programs) have real-world feedback loops:

The program has become a part of the world it models, it is embedded in it. Conceptually at least the program as a model contains elements that model itself, the consequences of its execution.

E-programs change because the real-world changes, just like P-programs, but E-programs can also be the cause of that change in the real world.

Whereas S-programs can in theory always be provable correct, the definition of ‘correctness’ for E-programs is based on the outcomes they effect in the real-world. This tells us something about the limitations of formal techniques: what they can and can’t do for us.

Correctness and proof of correctness of the (E-) program may be formally correct but useless, or incorrect in that it does not satisfy some stated specification, yet quite usable, even satisfactory. Formal techniques of representation and proof have a place in the universe of (P- and E-) programs but their role changes. It is the detailed behavior of the program under operational conditions that is of concern.

Section II.F in the paper contains another interesting assertion you can spend a long time thinking over: "it is always possible to continue the system partitioning process (of an E- or P- program) until all modules are implementable as S-programs." The resulting modules may, for the moment, be treated as complete and correct.

How programs evolve

Following initial construction, change is accommodated through a sequence of releases. Releases can be of varying granularity, including – 40 years ago remember – a pretty good description of continuous deployment:

The [term ‘sequence of releases’] is also appropriate when a concept of continuous release is followed, that is when each change is made, validated, and immediately installed in user instances of the system.

Sequences of releases take place with a generation of the system. At a larger scale we also have successive generations of system sequences

Keeping the long-term picture of the system life-cycle in mind is essential. Managers (project teams?) tend to concentrate on successful completion of their current assignments, as measured by observable product attributes, quality, cost, timeliness, and so on. That is, they are incentivised to focus on the short-term to the detriment of the long-term.

Management strategy will inevitably be dominated by a desire to achieve maximum local payoff with visible short-term benefit. It will not often take into account long-term penalties, that cannot be precisely predicted and whose cost cannot be assessed.

What often seems to happen today in response to these forces is that developers within the team fight for recognition of the need to address technical debts and to think longer term, and smuggle this kind of work into the plan. Lehman had a different idea of where the problem should be addressed:

Top-level managerial pressure to apply life-cycle evaluation is therefore essential if a development and maintenance process is to be attained that continuously achieves, say, desired overall balance between the short- and long-term objectives of the organization. Neglect will inevitably result in a lifetime expenditure on the system that exceeds many times the assessed development cost on the basis of which the system or project was initially authorized.

There’s a great company building lesson right there: managers will naturally tend to focus on short term objectives and measurable outcomes, it’s the job of the executive team to think longer term and strategically and ensure the needed balance. When executives get sucked into short-term thinking as well the company loses that balance. From a software perspective, and since software is central to so many businesses, that means we need systems and software literate executives.

Is this realistic? How can executives looking down on projects from 50,000 feet really gain an understanding of what is happening? Isn’t it only the developers that really know the truth? A systems and software literate executive can be effective by understanding the dynamics and laws of program evolution:

The resultant evolution of software appears to be driven and controlled by human decision, managerial edict, and programmer judgement. Yet as shown by extended studies, measures of its evolution display patterns, regularity and trends that suggest an underlying dynamics that may be modeled and used for planning, for process control, and for process improvement.

Individual decisions may appear localised and independent, but their aggregation, moderated by many feedback relationships, produces overall system responses that are regular and often normally distributed.

Informed strategy

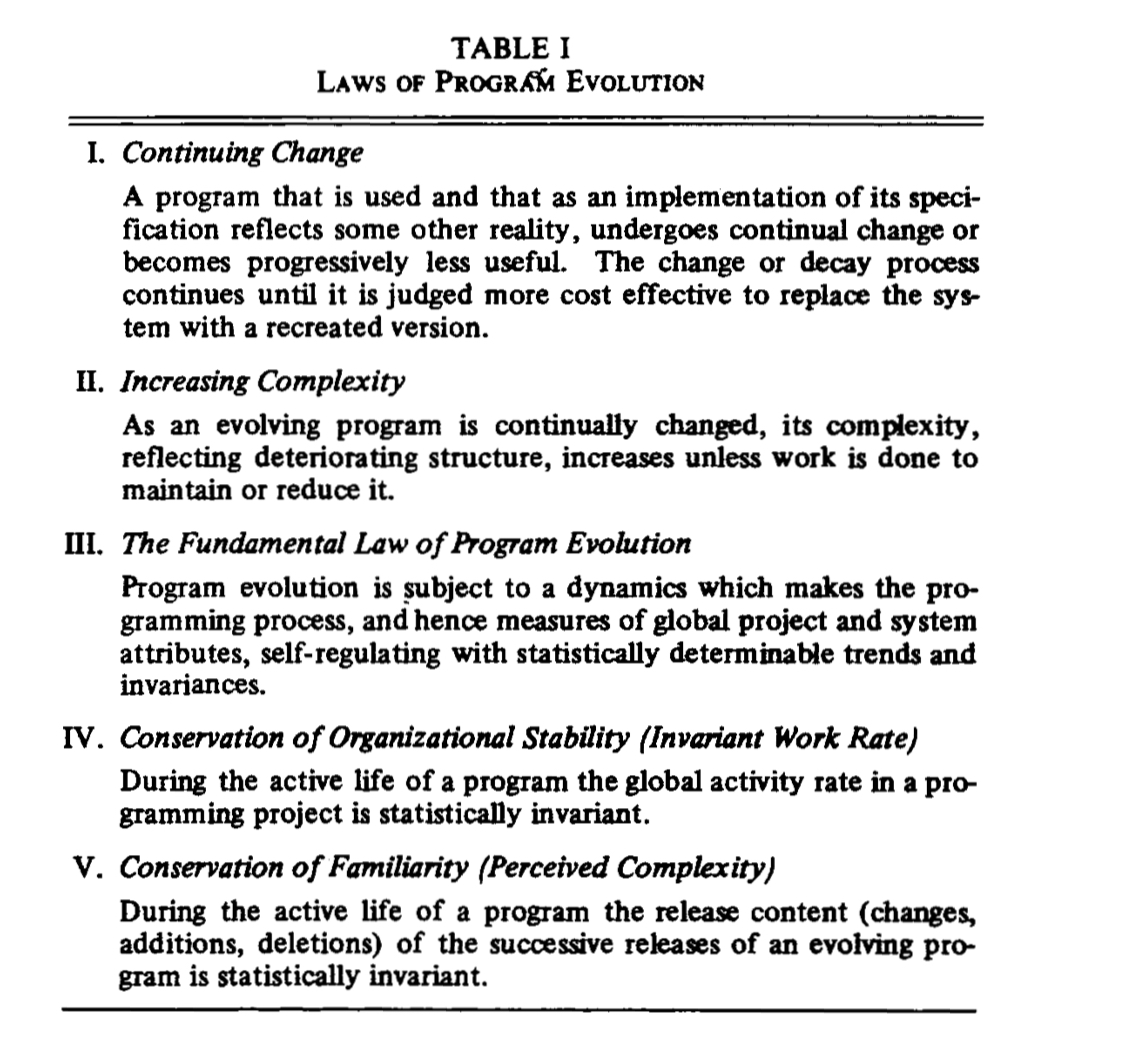

From observing these patterns play out across many projects, Lehman distills a set of five laws: change is continuous, complexity increases, program evolution follows statistically determinable trends and invariances, work rates are invariant, and the complexity in successive releases is statistically invariant.

The Law of Continuing Change says that (P- and E-) programs are never ‘done’, and we should expect constant change. Change is necessary even to stand still – a program that isn’t changing to keep up with changes in the real world is decaying.

The Law of Increasing Complexity is the second law of thermodynamics applied to software systems. Complexity increases and structure detoriorates under change, unless work is done to maintain or reduce it.

The Law of the Conservation of Organisational Stability says that the global activity rate on a programming project tends to be stable over time. This is a function of the organisation itself striving for stability and tending to avoid dramatic change and discontinuities in growth rates. Within this framework, resources do of course ramp up early in lifetime of a product / project, but the amount of resource that can be usefully applied to a project has a limit. The pressure for success leads to a build up of resources to the point where this limit is exceeded. Such a project has reached the stage of resource saturation and further changes have no visible effect on real overall output.

The Law of the Conservation of Familiarity says that the perceived complexity in each successive releases tends to be stable. "The law arises from the nonlinear relationship between the magnitude of a system change and the intellectual effort and time required to absorb that change".

The consequences of these four laws, and…

… the observed fact that the number of decisions driving the process of evolution, the many feedback paths, the checks and balances of organizations, human interactions in the process, reactions to usage, the rigidity of program code, all combine to yield statistically regular behavior such as that observed and measured in the systems studied.

Lead us to the Fundamental Law of Program Evolution: program evolution is subject to a dynamics which make the process and system self-regulating with statistically determinable trends and invariances.

The final section of the paper (V. Applied Dynamics) is a case study in how knowledge of these laws can be used to guide longer-term planning processes. Lehman analyses the releases of System X (a general purpose batch operating system) through one by looking at the number of modules added and changed during each release, as a proxy for complexity. By looking at the average number of modules changed per day during a release, it can be seen that releases that push the rate higher tended to be ‘extremely’ troublesome and followed by considerable clean-up. Likewise releases that exceeded the normal incremental baseline of module growth (number of new modules) also tended to leave a poor quality base and need to be followed by a clean-up release. Thus it becomes possible to look at the current rates of change and addition, and make an assessment of the likely underlying quality trends and what what can be accomplished in the near future.

You could do a similar analysis today on a number of different levels: from microservices added and changed per unit time, to an analysis of git repositories to see the number of files added and changed per unit time.

The last word

Software planning can and should be based on process and system measures and models, obtained and maintained as a continuing process activity… Software planning must no longer be based solely on apparent business needs and market considerations; on management’s local perspective and intuition.

Lehman’s research was good in its day, but the data used was minimal (so small it would be ignored today), and Lehman stated enough ‘laws’ (and kept rewording them) that something he said is inevitably going to resonate today. More discussion here: http://shape-of-code.coding-guidelines.com/2019/06/16/lehman-laws-of-software-evolution/

Who, other than an academic, would say something as nonsensical as: “Software planning must no longer be based solely on apparent business needs and market considerations”.

Adrian, many thanks for the post (and your overall effort!). If someone is interested in Lehman’s laws, there is a nice survey by Lehman himself and Fernandez-Ramil in: Software Evolution and Feedback: Theory and Practice. Nazim H. Madhavji, Juan Fernandez-Ramil , Dewayne Perry (eds), Wiley, 2006, Chapter 27. It is interesting that both the set of laws and the wording of the laws had changed at 2006, albeit not really critically.

IMHO the entire set of laws can be summarized in a single sentence: well-maintained software systems operate under a feedback-based evolution mechanism, where the positive-feedback force with requests for new functionality and system growth has to be regularly balanced by a negative-feedback force of perfective maintenance, in order to control the system’s complexity and internal quality. But that is just my interpretation…

Thanks again for the very interesting postings.

Panos Vassiliadis

PS. I also thank you for the “Lindy Effect”, I had never heard of the term, but after looking it up, it seems that we have encountered the same phenomenon in the study of relational schema evolution (we call it “gravitation to rigidity”): if tables survive early removal, they are practically never removed from the schema (in my interpretation due to the effect to the surrounding code that this might have)…

Adrian, yours is one of those blogs that remains as valuable to me as it has been all these years. Some of those blogs stand out immediately, at least from my PoV of the world of software. This is one of them. May be the data used by Lehman before framing the laws is not enough to satisfy a statistician, but the points he makes are immediately relatable to what we see every day. When he proposes that ‘From a software perspective, and since software is central to so many businesses, that means we need systems and software literate executives’, I can see very clearly what he is hinting at. In my experience, a very large number of such executives – I refer to them as chief stakeholders – either is dismissive of or unaware of the cost they incur because changes are managed poorly. One sure fallout of this is that a growing army of professional programmers are encouraged to turn a blind eye to the necessity of maintainability of software.