This is the final part of our tour through the papers from the Re-coding Black Mirror workshop exploring future technology scenarios and their social and ethical implications.

- Towards trust-based decentralized ad-hoc social networks Koidl

- MMM: May I mine your mind? Sempreboni and Viganò

(If you don’t have ACM Digital Library access, all of the papers in this workshop can be accessed either by following the links above directly from The Morning Paper blog site, or from the WWW 2018 proceedings page).

Towards trust-based decentralized ad-hoc social networks

Koidl argues that we have ‘crisis of trust’ in social media caused which manifests in filter bubbles, fake news, and echo chambers.

- “Filter bubbles are the result of engagement-based content filtering. The underlying principle is to show the user content that relates to the content the user has previously engaged on. The result is a content stream that lacks diversification of topics and opinions.”

- “Echo chambers are the result of content recommendations that are based on interests of friends and peers. This results in a content feed that is strongly biased towards grouped opinion (e.g. Group Think).”

- “Fake news, and related expressions of the same, such as alternative facts, is related to gaming or misusing the underlying greedy algorithm. The result is an increase in the spread of mostly promoted and highly opinionated content.”

For the business models of most social media platforms, I think it helps a lot to realise that the objective quality of the content is immaterial. Quality content is useful only to the extent that it engages and retains users. The platforms aren’t selling content, they’re selling your attention. The value of such a business is tied to the number of attention minutes it can command. By packaging people into groups, you can further increase the value of those attention minutes by selling advertisers the attention of the people they most want to reach. Thus the fundamentals are (a) a way of getting you to spend as much time on the platform as possible – hence all the systems tuned for engagement and to drive your reward systems in such a way as to keep you coming back for more, (b) a way of understanding as much about you as possible so that your attention can be packaged up and sold more effectively – hence all of the tracking and profiling that we see, and (c) a means of auctioning or otherwise selling your attention to people that want to bid for it.

Given the powerful incentives of this economic machine, how can we place trust in what we are being shown?

…users are left completely in the dark about what behaviour or engagement has led to content being shown in their content stream. This handover of trust is a critical element for social media applications.

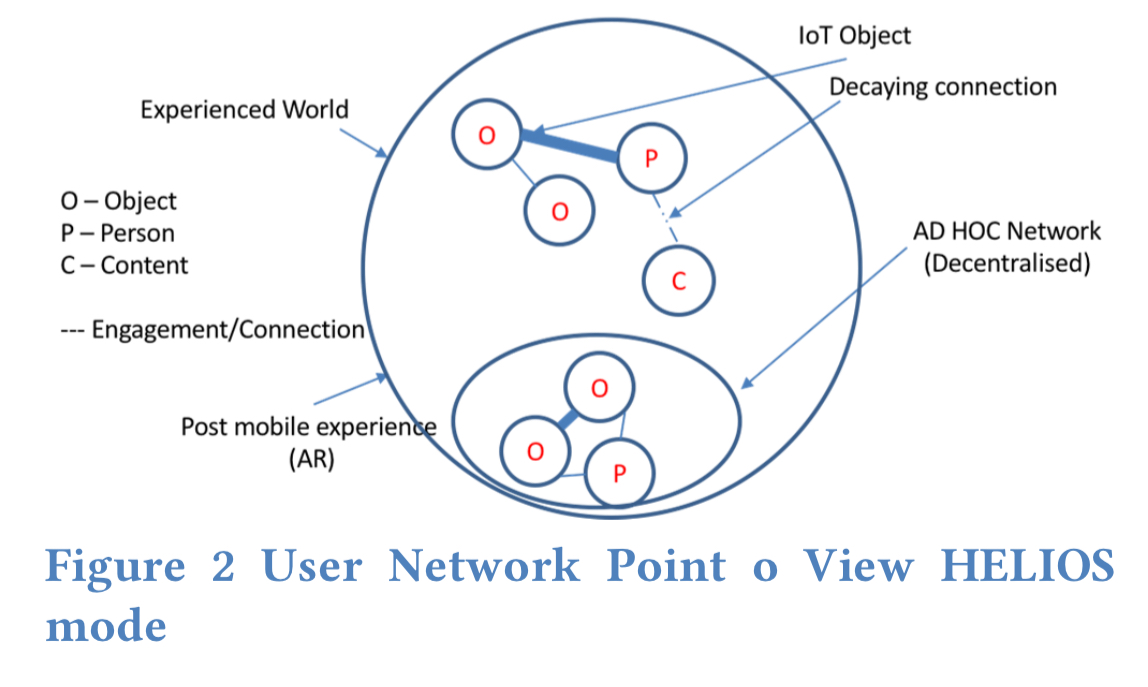

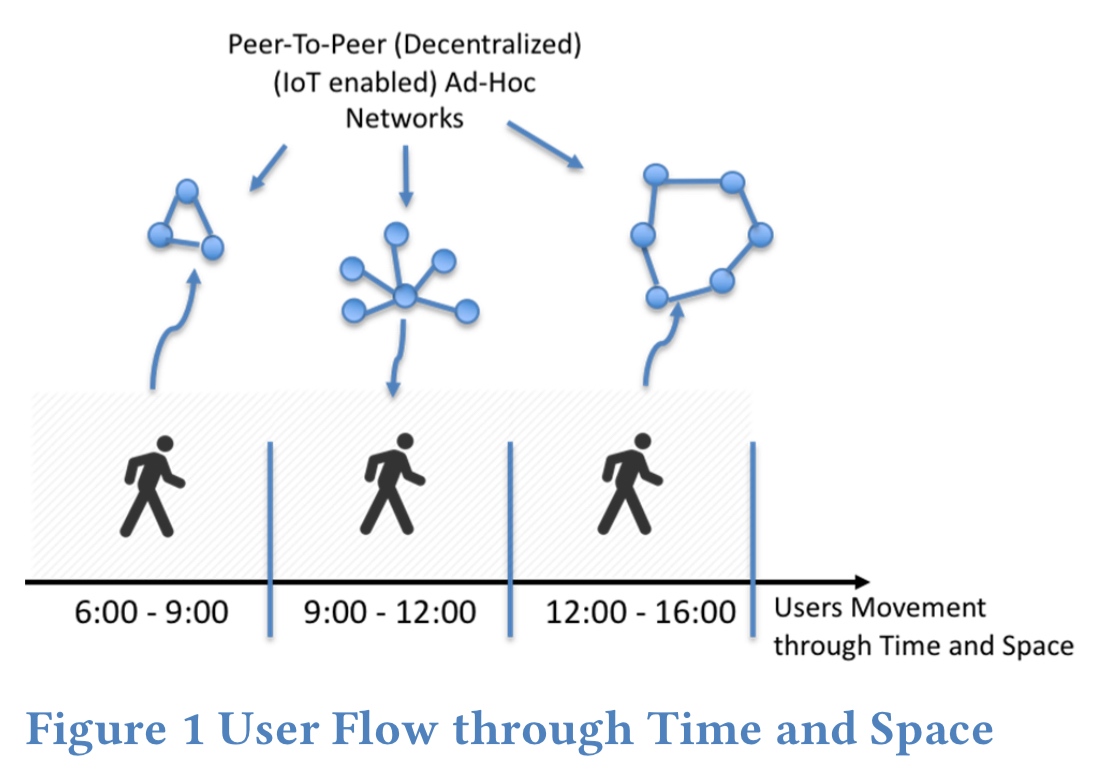

Koidl sets out the case for HELIOS, which aims to shift control and responsibility back to the individual user through the creation of proximity-based, ad-hoc, trust-anchored social networks. As a user moves through time and space HELIOS is constantly and dynamically learning network groups of people and devices. It’s a decentralized peer-to-peer model that places the user at the centre.

The type of networks that get established resemble the concept of Ego Networks, in which the individual is the centre of the social network. This concept fits in with the understanding that each creates social networks within their direct proximity naturally.

The proximity aspect is designed to model our interactions in the real world based on assessing objects and people nearby. Proximity feeds into context (local network) detection. “Only by auto-creating and auto-configuring the networks is large scale adoption and usage possible.” Contexts are implicit and gained based on the behaviour of the user in that context. Repeated interaction strengthens links, if unused they decay and eventually nodes are removed.

The usage of a trust graph ensures that each social network follows a “Trust by Design” principle. The main approach is to establish computational trust by establishing a trust value towards each new element within a social network.

As an example, a car that is used many times, is a car to which we can assign a higher degree of trust.

MMM: May I mine your mind?

This is the last of the papers from the workshop, and the most extreme in its imagining of future scenarios. The seed of the idea comes from the rise of cryptojacking, in which websites use embedded JavaScript to mine cryptocurrency when a user visits their site. Cryptojacking can be done stealthily, but you can also imagine scenarios in which donating your computing for crypto-mining is an explicit contract allowing you to participate in reward systems. (I guess that would like a kind of casual and very dynamic mining pool…).

Several studies have been published on brain-computer interaction and there are start-ups that are developing interfaces to connect the human brain directly to a computer… Our brain will be connected. Always connected. Our brain will always be able to process data from the network and process it. This means that our brain will have a direct channel to the Internet, so why not imagine traffic going in both ways?

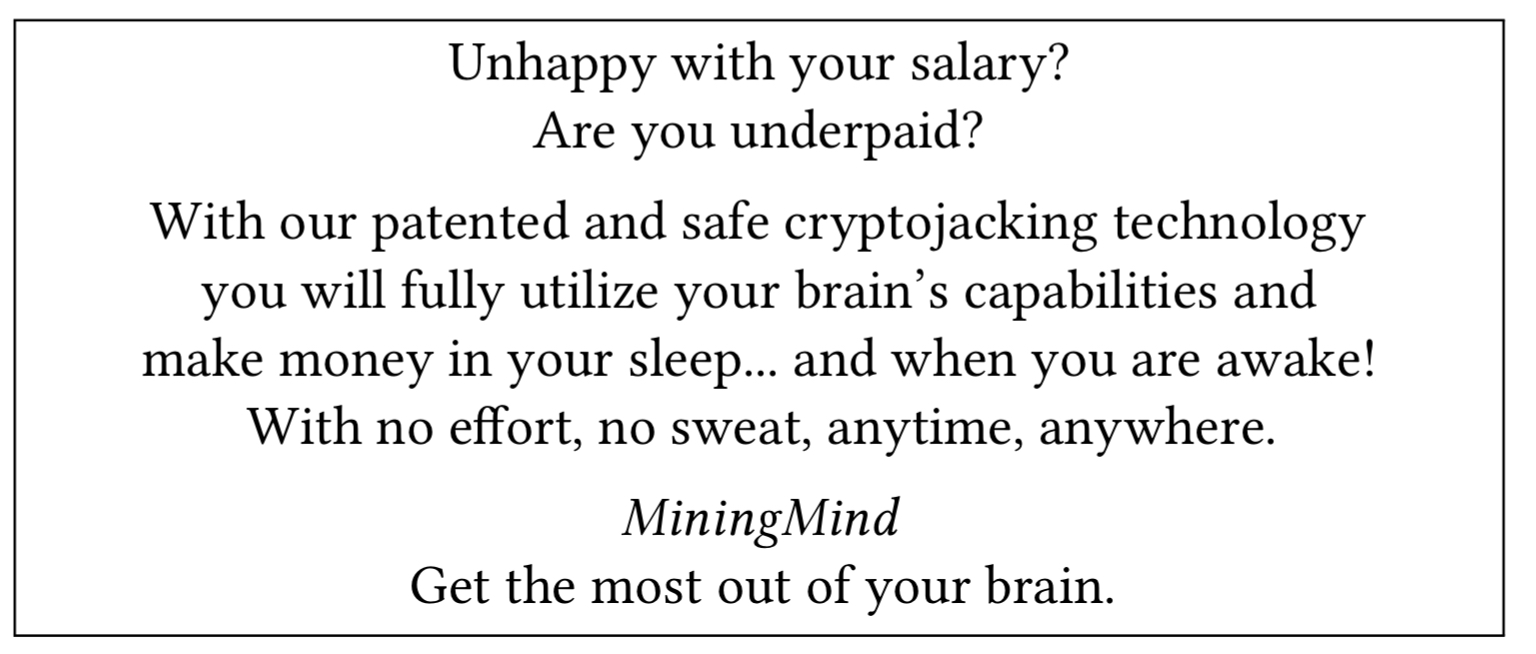

Now consider the following advert from the fictitious company MiningMind:

The premise for this plot is that soon we might have the technology to merge a reward system with a cryptojacking engine that uses the human brain to mine cryptocurrency… or to carry out some other mining activity. Part of our brain will be committed to cryptographic calculations, leaving the remaining part untouched for everyday operations, i.e., for our brain’s normal activity.

Leaving aside the thousand-and-one implementation and feasibility questions for the moment, if such a thing were possible, do you think there’s a vulnerable section of society that might be tempted by the offer???