This is part IV of our tour through the papers from the Re-coding Black Mirror workshop exploring future technology scenarios and their social and ethical implications.

- Is this the era of misinformation yet? Combining social bots and fake news to deceive the masses Wang et al.

- Gnirut: the trouble with being born human in an autonomous world Viganò and Sempreboni

(If you don’t have ACM Digital Library access, all of the papers in this workshop can be accessed either by following the links above directly from The Morning Paper blog site, or from the WWW 2018 proceedings page).

Is this the era of misinformation yet? Combining social bots and fake news to deceive the masses

In 2016, the world witnessed the storming of social media by social bots spreading fake news during the US Presidential elections… researchers collected Twitter data over four weeks preceding the final ballot to estimate the magnitude of this phenomenon. Their results showed that social bots were behind 15% of all accounts and produced roughly 19% of all tweets… What would happen if social media were to get so contaminated by fake news that trustworthy information hardly reaches us anymore?

Fake news and hoaxes have been around a long time, but the nature of social media pours fuel on the fire. Any user can create and relay content with little third-party filtering or fact-checking; many adults get their news on social media; and research has shown that people exposed to fake news tend to believe it.

We’re rapidly developing technologies to create very convincing fake news, especially within the attention span of a social media post. The written content could be created by humans, or natural language generation (which works better in short sections of text as in a social media post), which could include emotional awareness, and even be designed to replicate the style of a given individual as we saw earlier this week when we looked at ‘Be Right Back.’

We can use speech generation from scratch to imitate a given person’s voice, and also voice transformation which morphs the voice of a source speaker. In voice conversion the voice of the actor is converted to sound like a given target.

We can generate realistic looking images and even entire fake people. And we can also fabricate video, for example: lip syncing from audio and video footage to make someone appear to be saying whatever we want; transposition in real-time of facial expressions from an actor onto faces present in videos; even deepfakes which use deep learning techniques to swap faces in videos (here’s a good SFW post on the technology).

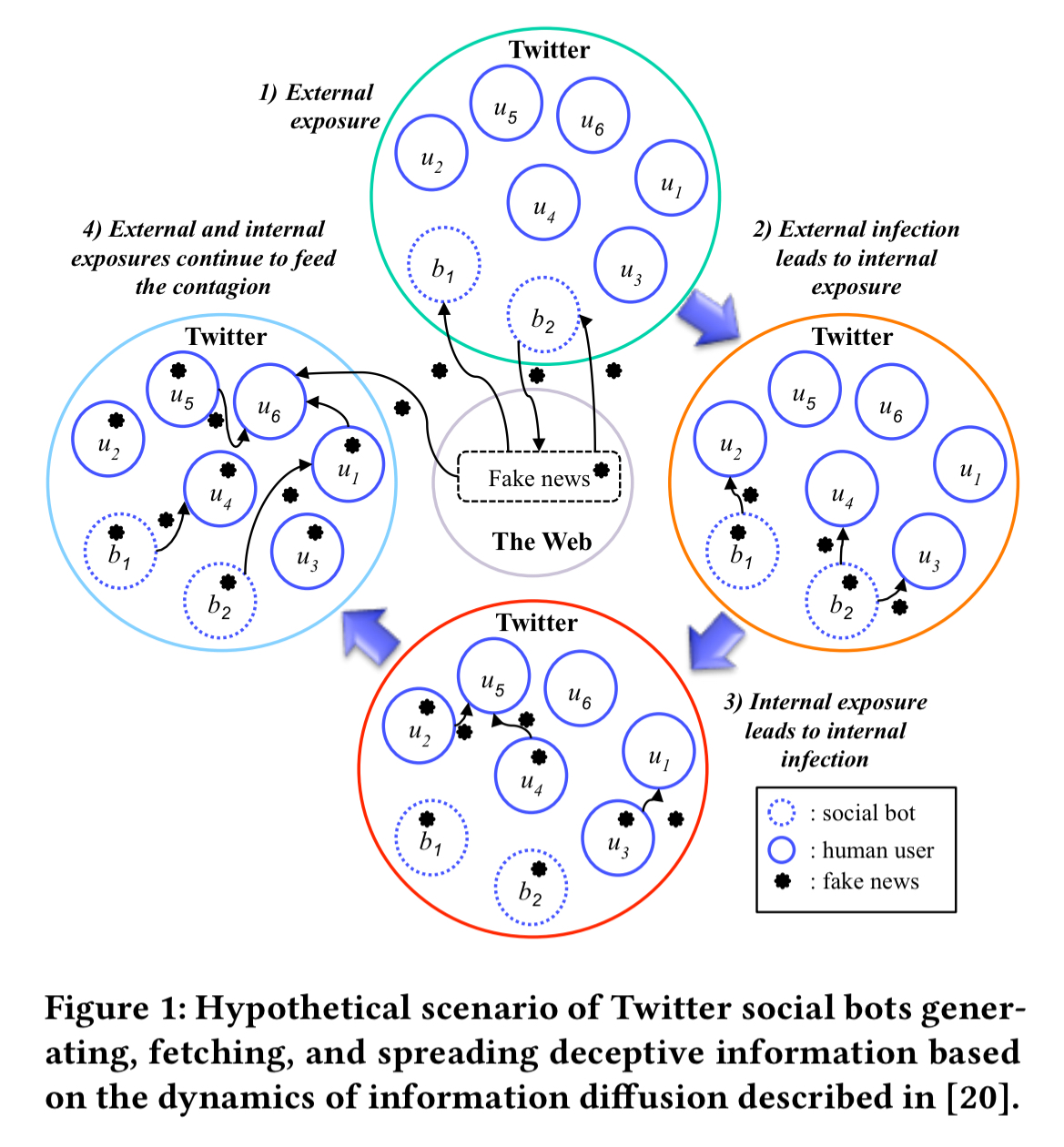

So we can manufacture content. Information diffusion analysis tells us how to get it to spread. Information diffusion typically follows these steps:

- The network is exposed to external information and gets externally infected whenever a node shares this information.

- This external infection causes a burst of internal exposure.

- Internal exposure leads to nodes relaying the information resulting in an internal infection.

- External and internal exposures continue to feed the contagion after the initial burst of infection.

(Or to put it more simply, someone posts a tweet, and other people see it and retweet it… ;) ).

We can ultimately imagine combining some of these capabilities in an intelligent social bot that given some objective is able to manufacture and propagate content (some reinforcement learning might also come in handy here, using reach as a key part of the reward function). First the bot selects or creates a piece of external information, then it creates initial bursts of internal exposure by relaying it.

In the third step, the bot aims to reach as many people as possible with the content.

For example, social bots could implement opinion mining and sentiment analysis techniques to locate users whose views are in line with the deceptive information being scattered.

Manual fact checking will never be able to keep up with the volume of fake information that can be easily published. So we’ll need automated tools to detect it. ClaimBuster is an example of research in this direction.

Gnirut: the trouble with being born human in an autonomous world

A young child wants to be a pilot when she grows up. Her parents have to explain that this is impossible – only AIs are allowed to fly planes now, humans are too fallible.

Many security sensitive and/or safety sensitive tasks are already carried out by machines, often with multiple human operators per deployed robot (e.g., a 5-to-1 human-to-robot ratio). For example, in military operations, remote surgery, assisted transportation, underwater, and so on. We’re moving towards a world in which one human operator can control a whole fleet of robots.

But why stop here? Solutions are currently being sought for full automation of systems by removing the human and transforming the operating space into a highly instrumented and controlled area for robots, as has been common in car manufacturing or even in places like Amazon warehouses…

Since many tasks don’t necessarily need a physical entity, we could also talk more generally about the human-to-AI ratio. What if we go further, and start considering a human supervised by one or more AIs? We can get a glimpse of this by watching young children’s interactions with Alexa. Rachel Botsman observed her three-year old daughter interacting with Alexa over time:

“With some trepidation, I watched my daughter gaily hand her decisions over, ‘Alexa, what should I do today?’ Grace asked in her singsong voice on Day 3. It wasn’t long before she was trusting here with the big choices. ‘Alexa, what should I wear today? My pink or my sparkly dress?’”

Why stop at multiple AIs for one human? Why not one AI managing multiple humans?

This will happen gradually, with the change of differentials initially too small to be noticed, or without us really realising that the sequence of differentials over a longer period of time have caused a momentus change.

For example, ‘in a laudable attempt to safeguard human safety, we will also likely soon legislate that some jobs should be carried out only by intelligent machines.’ This will extend to areas in which human nature and subjectivity might slow down reaction time or adversely affect the end-result. (As a small example, think of the automatic braking systems when a car detects an oncoming collision).

An intelligent algorithm analysing decisions from the European Court of Human Rights found patterns enabling it to predict outcomes of cases with 79% accuracy. Such an algorithm can be used to assist human decision makers.

But why stop here? Why not hand over the actually legislative power to intelligent machines, which, after all, have the ability to take better and more consistent decisions. “They” might then legislate that the list of professions that should not be accessible to humans ought to be extended to include also all kinds of drivers and pilots, doctors, surgeons, accountants, lawyers, judges, lawmakers, soldiers,…

Coming back to our child that wants to be a pilot, will she have to disguise herself as an AI in order to be allowed to train and fly? Perhaps she will have to pass the Gnirut Test (think about it!) involving an AI judge and a human subject which attempts to appear artificial… The sad thing is, I find it much easier to imagine an AI one day passing the Turing test, than a human ever passing the Gnirut test.