ViewMap: Sharing private in-vehicle dashcam videos Kim et al., NSDI’17

In the world of sensor-laden connected cars that we’re rushing towards, ViewMap addresses an interesting question: how can we use the information collected by those cars for common good, without significant invasion of privacy? It raises deeper questions too about the limits of state surveillance and where we think they should be. In short, ViewMap assumes cameras in cars (e.g., DashCams) that are continuously recording their environment. Anonymous fingerprints of short video segments are uploaded to a government-controlled service which can then request video footage from the scene of a traffic accident or crime. The challenge in all of this is preserving privacy.

Motivating examples and privacy concerns

Dashcam’s are becoming increasingly popular in many parts of Asia and Europe (over 60% adoption in South Koreo for example). For countries such as South Korea, Russia, and China, the use of dashcams is an integral part of the driving experience. Other countries such as Austria and Switzerland strongly discourage dashcams due to visual privacy concerns though.

Where they exist, dashcam videos are often useful to accident investigations. Vehicles directly involved in the accident may have their own videos, but nearby vehicles may have wider views offering different angles.

Authorities such as police want to exploit the potential of dashcams because their videos, if collected, can greatly assist in the accumulation of evidence, providing a complete picture of what happened in incidents.

How do you find those videos though, and persuade the drivers of the vehicles in question to share them?

At least in the case of a traffic accident, other drivers should be aware that they’ve witnessed an accident and come forward. For general crimes though, the situation may be different:

While CCTV cameras are installed in public places, there exist a countless number of blind spots. Dashcams are ideal complements to CCTV since pervasive deployment is possible (cars are everywhere). However, the difficulty here is that users are not often aware whether or not they have such video evidence.

It’s easy to see the privacy risk when providing personal location and time-sensitive information – this could easily be used to track individuals. In addition to potential privacy leakage for participating drivers, there is also a privacy risk for other drivers and individuals that are captured in the recorded video. This latter information is of course exactly what the authorities are interested in when investigating incidents.

ViewMap system design

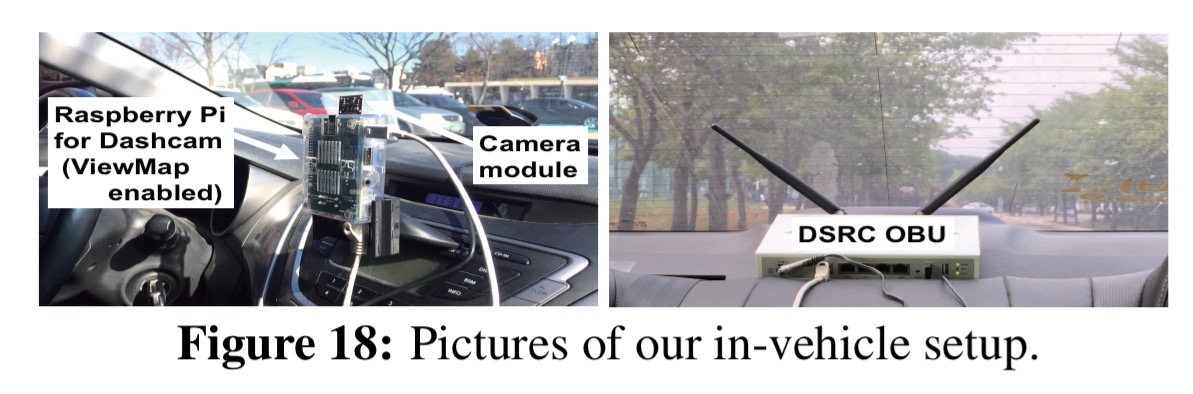

The ViewMap system places a Raspberry Pi powered dashcam in each vehicle, connected via bluetooth to a Galaxy S5 phone (for uploading information). A dedicated short-range communications (DSRC) radio is placed in the back of the car for vehicle-to-vehicle communication.

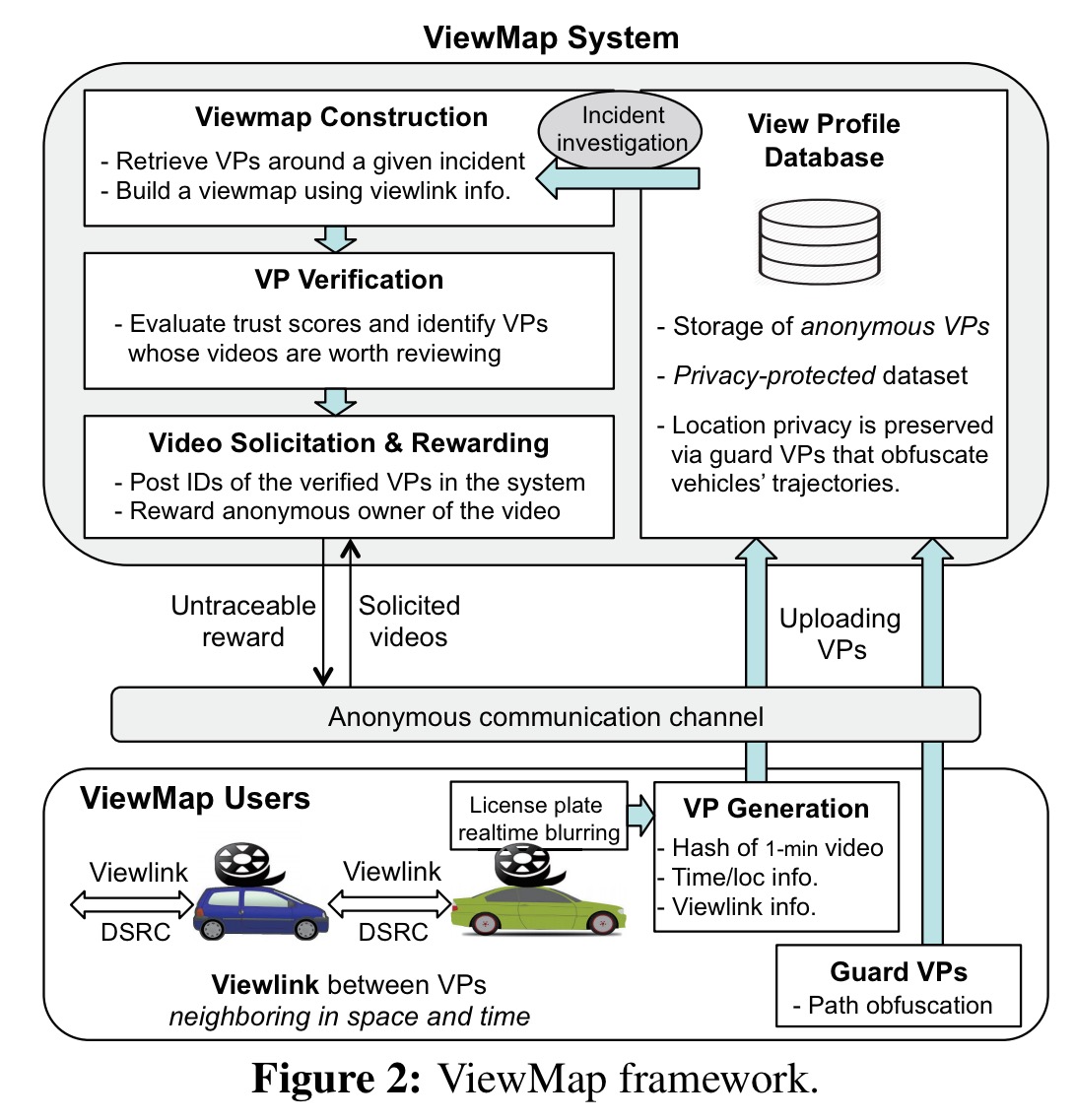

Number plates in the captured video are blurred in realtime before being stored. The system does not currently do any face detection and blurring. Every minute of recorded video is represented by a data structure called a View Profile (VP) that summarises time and location/trajectory, a video fingerprint, and a Bloom filter of the video fingerprints of video taken by neighbouring vehicles (exchanged over the DSRC links). Vehicles anonymously upload their View Profiles using a Tor client on the phone. The anonymised, self-contained VPs are stored in a central VP database by authorities. The dashcam system itself keeps a rolling window (typically 2-3 weeks worth of driving time) of video footage associated with these VPs). Original video footage will not be available after this time.

Video fingerprinting

Dashcams are time-synchronized using GPS and record new videos every minute on the minute. Every second, each vehicles produces and broadcasts a View Digest (VD) of the video segment it is currently recording.

The view digest at time i is a concatenation of:

- Current time

- Current location

- The byte-size of the video so far for this view profile window

- The initial location for the view profile

- The current view profile identifier

- A hash of the view digest from time i-1 together with the last one second of video data

When a vehicle receives a view digest broadcast it verifies that the time and location are within acceptable ranges before storing it. At most two view digests are kept per neighbour per view profile identifier – the first and the last received in the window.

Once the current minute of recording is complete, each vehicle A generates its View Profile, VP, for that minute. The VP contains the sixty View Digests for the minute, plus a Bloom filter of the first and last View Digests received from each neighbouring vehicle as above.

Protecting location privacy

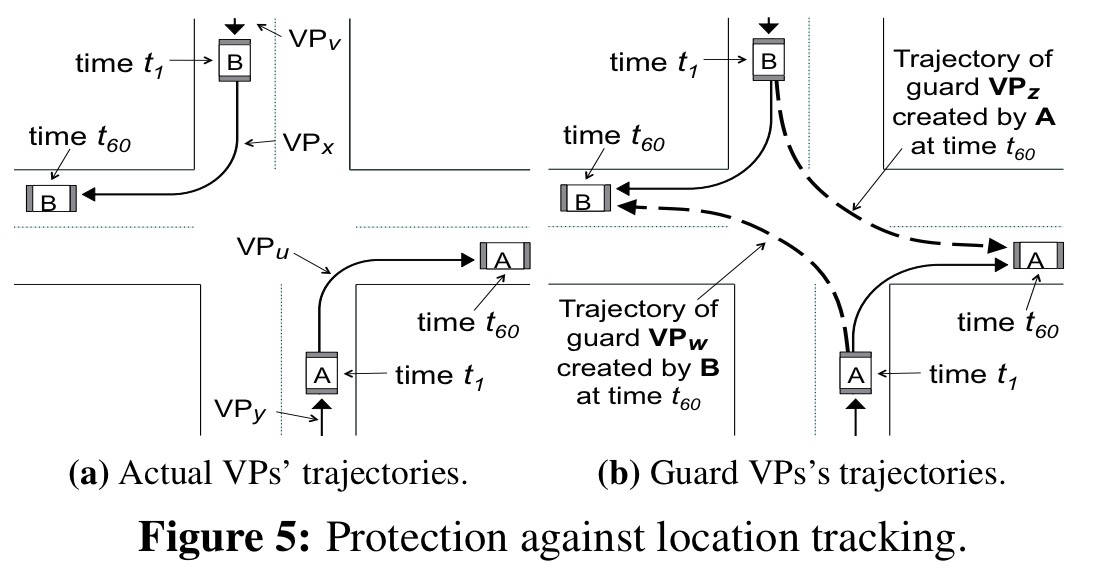

As described so far, it would be possible to follow a user’s path by linking a series of VPs adjacent in space and time. To protect against this, each vehicle also uploads a number of guard (fake) VPs.

Suppose that a vehicle V accepts digests from m other vehicles during its VP window. For a configurable percentage α of these (the study uses α = 0.5), V creates guard VPs with a trajectory starting at the other vehicles initial location (as recorded in the view digest), and ending at its own (V’s) location. This can be seen visually in the following figure.

There are readily available on/offline tools that instantly return a driving route between two points on a road map. In this work, we use the Google Directions API for this purpose. In an effort to make guard VPs indistinguishable from actual VPs, we arrange their VDs variable spaced (within the predefined margin) along the given route.

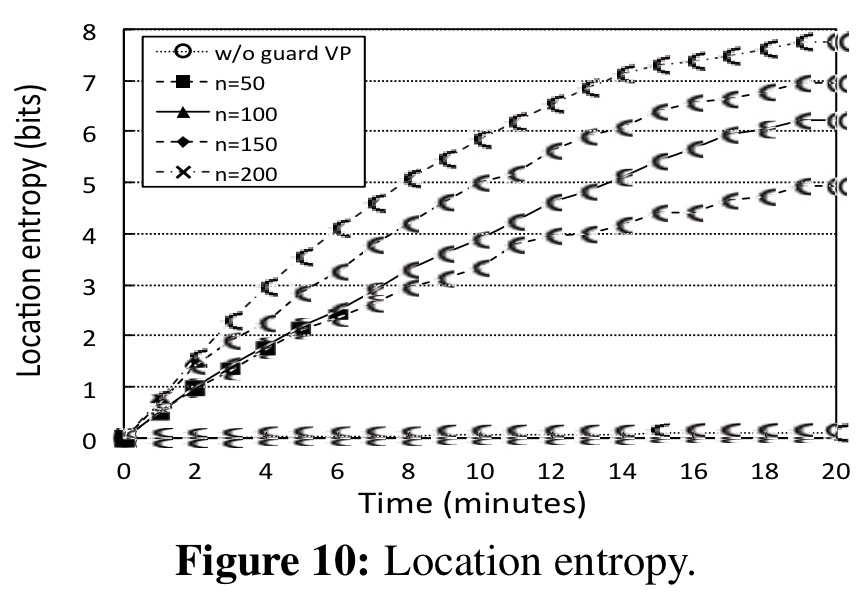

Notice therefore that privacy depends on sufficiently wide adoption of the system. Using a simulation with n = 50 to 200 vehicles travelling in a 4km2 area, the authors studied the uncertainty in a vehicles position over time, measured as location entropy in bits (i.e., 8 bits of entropy means a 1 in 256 chance of guessing the true location). In the lowest density case of 50 vehicles, 3 bits of entropy (1 in 8 chance) are arrived at within 10 minutes of driving.

(The markers seem to be messed up in the figure above and the one following, but it’s enough to get the idea…)

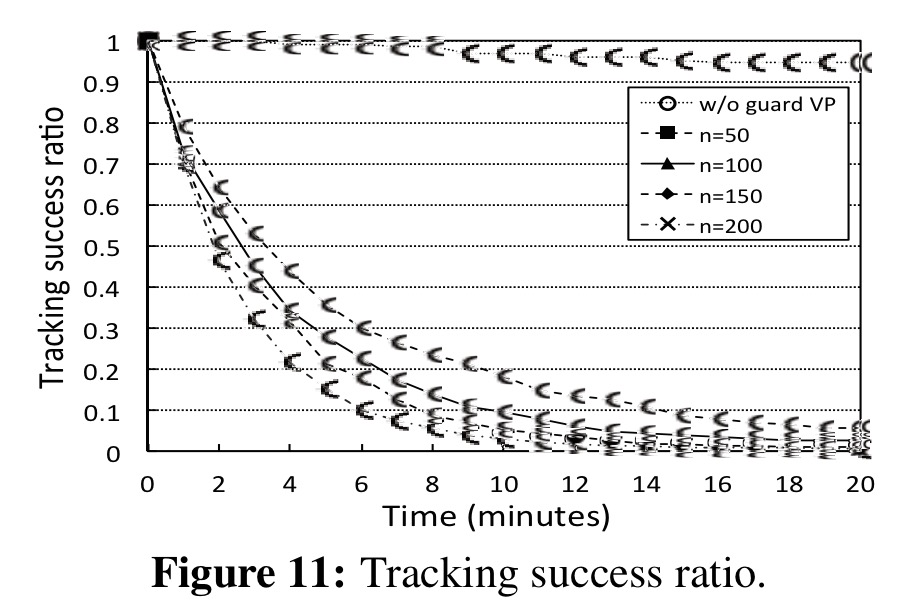

The following figure looks at the success of a tracker in determining the true location over time (as compared to a ground truth made available for the simulation).

We see that, in the sparse case of n = 50, the tracking success ratio decreases to 0.2 before ten minutes and further drops below 0.1 before fifteen minutes. In the case without guard VPs, on the other hand, the tracking success ratio still remains above 0.9 even after twenty minutes. This result shows: (i) the privacy risk from anonymous location data in its raw form; and (ii) the privacy protection via guard VPs in the VP database.

Collecting video evidence

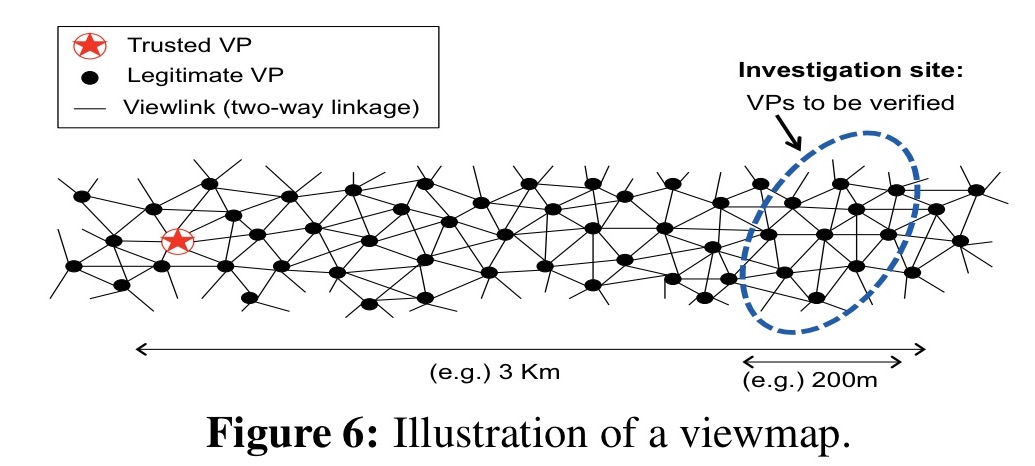

To gather video evidence of an incident, the system builds a series of view maps, one for each one-minute period of interest. The ViewMap system is designed to protect against attackers gaming the system by uploading fake VPs for various purposes, thus we need to establish trust in the view map. Trust is anchored in special trusted VPs. These come from authorities such as police cars.

Building a view map for a given location starts by finding the nearest trusted VP. We then have to build a chain of trust from that VP to the actual location of interest, each link in the chain is called a viewlink.

To start off with, we gather all VPs whose claimed locations at the time of interest are within the geographical area between the anchor trusted VP and the target location. For each member of this set, we find neighbour candidates with time-aligned locations within the radius of the DSRC radios. These candidates are filtered using Bloom filter membership queries to make sure they really did communicate in that time window. Edges (viewlinks) are then created to the filtered candidates. These edges signify that the two connected VPs are line-of-sight neighbours at some point in their timelines.

Given the graph formed by the above process, we’re now in a position to weed out fake VPs using a variation of the TrustRank algorithm originally developed to output a probability distribution of a person arriving at a given web page after randomly clicking on links from a given seed page.

To exploit the linkage structure of viewmaps, we adopt the TrustRank algorithm tailored for our viewmap. In our case, a trusted VP (as a trust seed) has an initial probability (called trust scores) of 1, and distributes its score to neighbor VPs divided equally among all adjacent ‘undirected’ edges (unlike out-bound links in the web model). Iterations of this process propagate the trust scores over all the VPs in a viewmap via its linkage structure.

Once legitimate VPs near a given incident have been identified, the system solicits the videos. This is done by posting ‘request for video’ announcements in a well-known place which users check. If a user has a matching video, they can upload it anonymously. (ViewMap includes an anonymous reward mechanism to encourage this behaviour – see section 5.3 in the paper for details). The uploaded video can be validated via cascading hash operations against the system-owned (anchor of trust) VP, and then reviewed by human investigators.

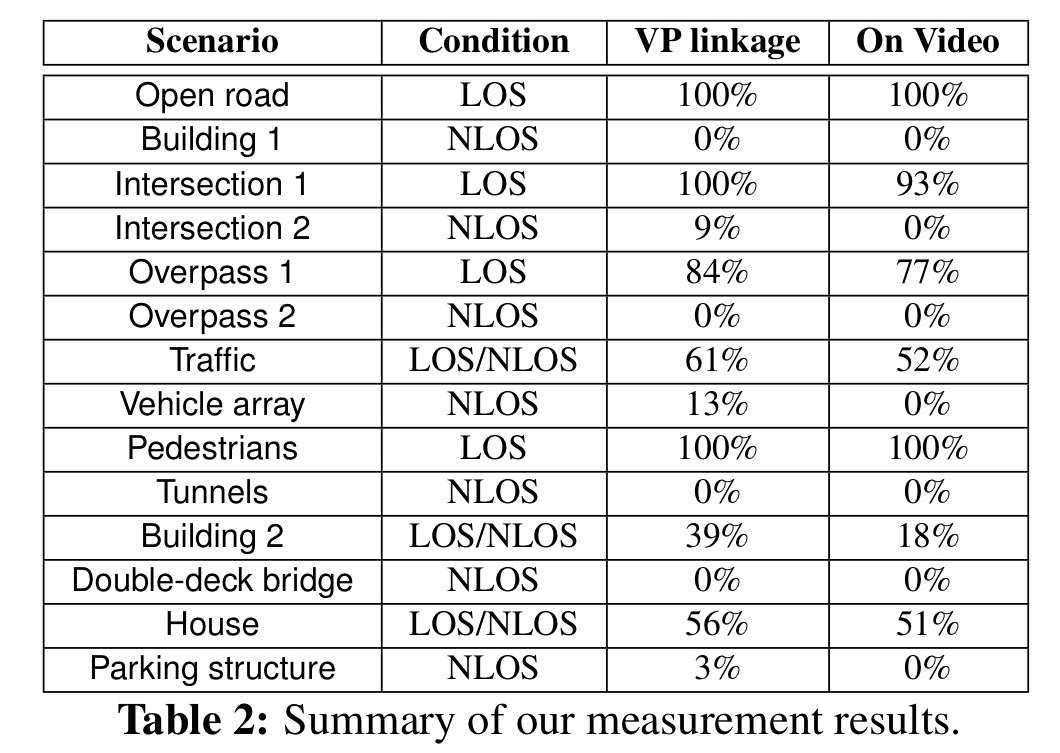

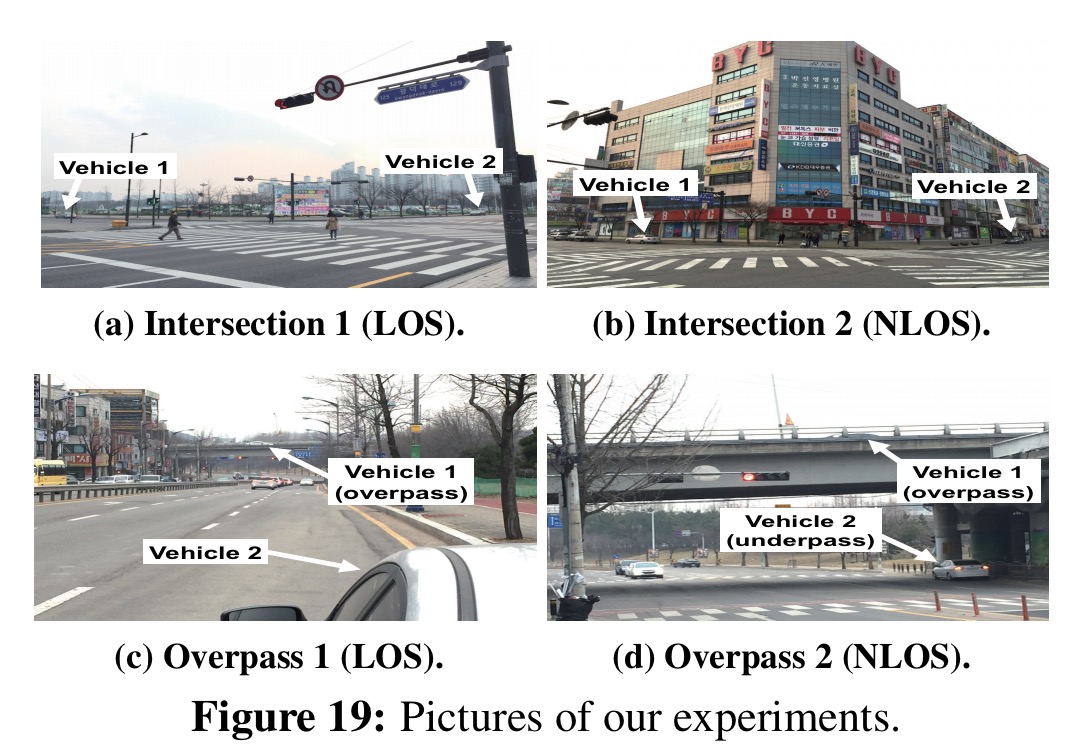

In a controlled experiment, various locations are selected where vehicles are either in line-of-sight (LOS),non-line-of-sight (NLOS), or mixed locations:

It can be seen from the results below that creating view link edges in the graph does indeed correspond highly with line-of-sight situations and with at least one of the two vehicles involved appearing in the other’s video.