Strategic Dialogue Management via Deep Reinforcement Learning – Cuayahuitl et al. 2015

If computers learning to play Atari arcade games by themselves isn’t really your thing, perhaps you’re more into board games? How about a Deep Reinforcement Learning system that learns how to trade effectively in Settlers of Catan! Again, we’re not talking about a system that was programmed to play Settlers of Catan (as we’ll see shortly, the learned approach beats those), but a reinforcement agent that is told the rules of the game, given a reward function so that it can evaluate how well it is doing, and left to figure everything else out by itself. It learns to make trade offers, and how best to reply (accept, reject, counter-offer) to offers made by others. I realised what all these reinforcement learning systems remind me of: the way that starting to measure or report a metric, or the introduction of an incentive plan into an organisation can completely change the way that people behave – and you’d better be ready for them to do whatever it takes to do well as judged by that metric. Start measuring developer productivity by the number of bug reports they close out for example, and watch all the trivial typos in the docs get fixed, while the harder issues are avoided like the plague! Reinforcement learning systems are really good at playing this game of maximising the reward.

In the game Settlers of Catan players try to build settlements and cities connected by roads. To do this they need resources. The particular resources that are most beneficial to them at any point in time vary depending on the strategy the player has chosen and the situation on the board. Resources in the game are represented by cards. At each turn, a player can offer to trade resources with other players, and can make use of available resources to build roads, settlements, and cities.

> This game is highly strategic because players often face decisions about when to trade, what resources to request, and what resources to give away—which are influenced by what they need to build. A player can extend build-ups on locations connected to existing pieces, i.e.road, settlement or city, and all settlements and cities must be separated by at least 2 roads. The first player to win 10 victory points wins and all others lose.

An agent learning to play the game must learn both how to offer, and how to reply to offers. In reinforcement learning situations are mapped to actions by maximising a long-term reward signal. A reinforcement learning agent is characterised by:

- A possibly infinite set of states, S

- A possibly infinite set of actions, A

- A stochastic state transition function T :: S → A → S that specifies the next state given the current state and action

- A reward function R :: S → A → S → ℜ that specifies the reward given to the agent for choosing action a when the environment makes a transition from state s to state s’.

- A policy function π :: S → A that defines a mapping from states to actions.

The goal of the agent is to maximise its cumulative discounted reward, by convention represented as the function Q* :: S → A → ℜ, the sum of rewards at each time step, discounted by factor γ at each time step.

In Deep Reinforcement Learning (DRL) we induce a Q :: S → A → Θi → ℜ function that approximates Q* using a multilayer convolutional neural network, where Θiare the parameters (weights) of the neural network at iteration i.

DRL with large action sets can be very expensive. Therefore the authors learn from constrained action sets: a static action set Ar which contains responses to trading negotiations and does not change, and an action set Ao which contains only those trading negotiations valid at a given point in the game (e.g. you can’t offer to trade resources you don’t have).

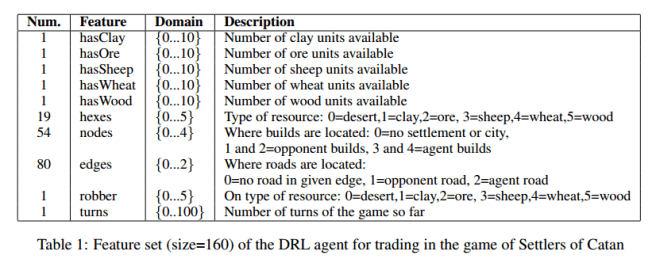

The state space S for the Settlers of Catan agent contains 160 non-binary features describing the game board and available features:

The action space A contains 70 actions for offering trading negotiations, and 3 actions (accept, reject, counteroffer) for replying to offers from opponents.

The state transition function T is simply based on the game itself, taking advantage of the JSettlers framework. The reward function R is also based on the game points provided by the JSettlers framework, with different weightings for the offer and reply-to-offer actions.

The model architecture consists of a fully-connected multilayer neural network with 160 nodes in the input layer, 50 nodes in the first hidden layer, 50 nodes in the second hidden layer, and 73 nodes (action set) in the output layer. The hidden layers use RELU (Rectified Linear Units) activation functions to normalise their weights. Finally, the learning parameters are as follows: experience replay size=30K, discount factor=0.7, minimum epsilon=0.05, learning rate=0.001, and batch size=64.

So of course the central question is, “does the agent learn to play a good game of Settlers of Catan?” Three DRL agents were trained, one against a random opponent (makes offers randomly, replies to offers randomly), one against a heuristic-based opponent using the heuristic bots included in the JSettlers framework, and one against an agent making trading offers based on supervised training using a random forest classifier, and replying to offers using heuristics.

The DRL agents acquire very competitive strategic behaviour in comparison to the other types of agents—they simply win substantially more than their opponents. While random behaviour is easy to beat with over 98% win-rate, the DRL agents achieve over 50% of win-rate against heuristic opponents and over 40% against supervised opponents. These results substantially outperform the heuristic and supervised agents which achieve less than 30% of win-rate.

The DRL agents also outperform the others in terms of average victory points, pieces built, and total trades. Interesting, the DRL agent trained using randomly behaving opponents are almost as good as those trained with stronger opponents. “This suggests that DRL agents for strategic interaction can also be trained without highly skilled opponents, presumably by tracking their rewards over time.”

The fact that the agent with random behaviour hardly wins any games suggests that sequential decision-making in this strategic game is far from trivial.

Let’s recap what has been achieved here:

Our learning agents are able to: (i) discover what trading negotiations to offer, (ii) discover when to accept, reject, or counteroffer; (iii) discover strategic behaviours based on constrained action sets—i.e. action selection from legal actions rather than from all of them; and (iv) learn highly competitive behaviour against different types of opponents. All of this is supported by a comprehensive evaluation of three DRL agents trained against three baselines (random, heuristic and supervised), which are analysed from a cross-evaluation perspective. Our experimental results report that all DRL agents substantially outperform all the baseline agents. Our results are evidence to argue that DRL is a promising framework for training the behaviour of complex strategic interactive agents.

So far in the last couple of weeks we’ve seen algorithms that learn how to play video games, learn how to develop algorithms, and learn how to undertake strategic negotiations. The machines are coming!

One thought on “Strategic Dialogue Management via Deep Reinforcement Learning”

Comments are closed.