A Hitchhiker’s guide to fast and efficient data reconstruction in erasure-coded data centers – Rashmi et al.

So far this week we’ve looked at a programming languages paper and a systems paper, so for today I thought it would be fun to look at an algorithm-based paper.

HDFS enables horizontally scalable low-cost storage for the masses, so it becomes feasible to collect and store much more data. Enter the Jevons paradox and soon you’ve got so much data that the storage costs become a real issue again. For redundancy and read efficiency, HDFS and the Google File System store three copies of all data by default.

Although disk storage seems inexpensive for small data sizes, this assumption is no longer true when storing multiple copies at the massive scales of operation of today’s data centers. As a result, several large-scale distributed storage systems now deploy

erasure codes, that provide higher reliability at significantly lower storage overheads, with the most popular choice being the family of Reed-Solomon (RS) codes.

We saw yesterday how Facebook’s f4 system uses Reed-Solomon coding and XOR to reduce the replication factor for warm blobs. Saving 30%+ in storage costs seems like a great idea, so why isn’t everyone doing it?

Although RS codes improve storage efficiency in data centers, they cause a significant increase in the disk and network traffic. This is due to the heavy download requirement during reconstruction of any missing or otherwise unavailable unit.

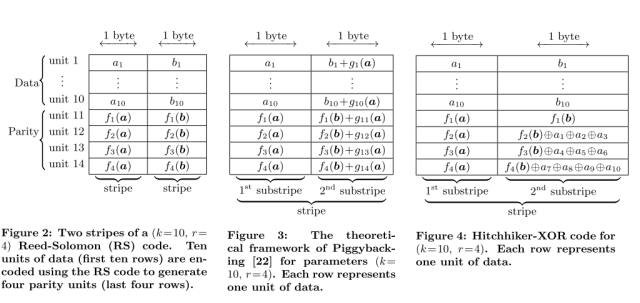

Reed-Solomon (RS) codes are associated with two parameters, often called n and k, but this paper uses k and r so we’ll go with that. You take k units of data (pick your unit size), and compute r parity units, giving you a total of (k+r) units, which is known as a stripe. Given any k of the data units in the stripe, it is possible to reconstruct any of the other units. Keeping a full replica would of course be a 2x storage requirement, but using an RS(10,4) code is only a 1.4x storage requirement. k=10,r=4 is the configuration used by Facebook. RS codes give a minimum storage overhead amongst all erasure codes that can tolerate any r failures in (k+r) units.

You can see from the above though, that to reconstruct a unit requires downloading of k of the remaining units (vs downloading only the missing unit when using simple replication). What does this overhead mean in a production system?

We have performed extensive measurements on Facebook’s data-warehouse cluster in production, which consists of multiple thousands of nodes, and which stores multiple Petabytes of RS-encoded data. These measurements reveal that a median of more than 50 machine-unavailability events occur per day, and a median of 95,500 blocks of RS-encoded data are recovered each day (the typical size of a block is 256 Megabytes (MB)). The reconstruction operations for RS-encoded data consume a large amount of disk and cross-rack bandwidth: a median of more than 180 Terabytes (TB) of data is transferred through the top-of-rack switches every day for this purpose.

This also means that read performance for degraded reads (those that require a reconstruction) suffers, and also that the recovery time of the system increases.

Based on conversations with teams from multiple enterprises that deploy RS codes in their storage systems, we gathered that this in-creased disk and network traffic and its impact on degraded reads and recovery is indeed a major concern, and is one of the bottlenecks to erasure coding becoming more pervasive in large-scale distributed storage systems.

Enter Hitchhiker…

First a couple of insights to help inform the algorithm design:

Firstly, most failures only affect a single block:

We collected measurements of the number of missing blocks per stripe across six months in the data-warehouse cluster in production at Facebook, which stores multiple

Petabytes of RS-coded data. We observed that among all the stripes that had at least one block to be reconstructed, 98.08% of them had exactly one such block missing, 1.87% had two blocks missing, and the number of stripes with three or more such blocks was 0.05%. The measurements thus reveal single block reconstructions to be by far the

most common scenario in the system at hand.

Secondly, encoding is done once, but repair may be done many times:

Hitchhiker trades off a higher encoding time for improvement along other dimensions. Encoding of raw data into erasure-coded data is a one time task, and is often executed as a background job. On the other hand, reconstruction operations are performed repeatedly, and degraded read requests must be served in real time.

So, if we could find an encoding that might be computationally a little more expensive than straight RS, but consumed no more storage, and gave us lower network and disk traffic during reconstruction that would be an interesting trade-off.

Hitchhiker introduces a new encoding and decoding technique layered on top of RS codes that reduces the amount of data download required during reconstruction, and uses a disk-layout technique that saves disk traffic as well.

Hitchhiker reduces both network and disk traffic during reconstruction by 25% to 45% without requiring any additional storage and maintaining the same level of fault-tolerance as RS-based systems.

(Encoding time is increased by 72%).

Here are the results when the team evaluated Hitchhiker at Facebook:

We evaluated Hitchhiker on two clusters in Facebook’s data centers, with the default HDFS parameters of (k = 10, r = 4). We first deployed Hitchhiker on a test cluster comprising 60 machines, and verified that the savings in the amount of download during reconstruction is as guaranteed by theory. We then evaluated various metrics of Hitchhiker on the data-warehouse cluster in production consisting of multiple thousands of machines, in the presence of ongoing real-time traffic and workloads. We observed that Hitchhiker reduces the time required for reading data during reconstruction by 32%, and reduces the computation time during reconstruction by 36%. … Based on our measurements of the amount of data transfer for reconstruction of RS-encoded data in the data-warehouse cluster at Facebook, employing Hitchhiker would save close to 62TB of disk and cross-rack traffic every day while retaining the same storage overhead, reliability, and system parameters.

So how does it work??

Recall that a block of k data units and r parity units is called a stripe. Hitchhiker puts two stripes together, using information from the first stripe when encoding the second one. Thus the encoding of the second stripe ‘piggybacks’ on the first one. A ‘stripe’ in Hitchhiker therefore contains 2(k+r) units, and we call the original RS stripes the substripes. The following figures taken from the paper illustrate how this pairing works (click on the image for a larger view).

The team created three versions of the piggy-backing functions, two based on XOR operations, and one that is slightly more expensive to compute but works in a broader range of circumstances. The trick in designing these functions of course is to be able to exploit their properties to give faster reconstruction with less data.

The proposed code has three versions, two of which re-quire only XOR operations in addition to encoding of the underlying RS code. The XOR-only feature of these erasure codes significantly reduces the computational complexity of decoding, making degraded reads and failure recovery faster. Hitchhiker’s erasure code optimizes only the reconstruction of data units; reconstruction of parity units is performed as in RS codes.

See the full paper for an analysis of how reconstruction and encoding work in detail.

The simple XOR approach is pretty good:

As compared to a (k = 10, r = 4) RS code, Hitchhiker-XOR saves 35% in the amount of data required during the reconstruction of the first six data units and 30% during the

reconstruction of the remaining four data units.

But the extended XOR+ approach is even better:

Hitchhiker-XOR+ further reduces the amount of data required for reconstruction as compared to Hitchhiker-XOR, and employs only additional XOR operations. It however requires the underlying RS code to possess a certain property. This property, which we term the all-XOR-parity property, requires at least one parity function of the RS code to be an XOR of all the data units.

The non-XOR code gives the same benefits as XOR+:

Hitchhiker-nonXOR presented here guarantees the same savings as Hitchhiker-XOR+ even when the underlying RS code does not possess the all-XOR-parity property, but at the cost of additional finite-field arithmetic.

Finite-field arithmetic: very informally in this context, arithmetic operations that give results of the same ‘shape’ (belong to the same field) as their arguments.

In addition to the functions themselves, there is the question of which substripes to couple together into a Hitchhiker stripe. The authors show that the natural strategy of coupling adjacent units from the input data actually leads to inefficient disk reads on recovery, and it is better to ‘hop’ a few units when coupling which enables contiguous reads of data on recovery. In the paper this is described as the ‘hop-and-couple’ strategy.

Reblogged this on sonofbluerobot.