The Log-Structured Merge-Tree (LSM Tree) – O’Neil et al. ’96.

Log-Structured Merge is an important technique used in many modern data stores (for example, BigTable, Cassandra, HBase, Riak, …). Suppose you have a hierarchy of storage options for data – for example, RAM, SSDs, Spinning disks, with different price/performance characteristics. Furthermore, you have a large dataset experiencing frequent writes, and need to maintain an index on that dataset that can be queried at any time – how can you best exploit the tiers of storage available to you to make keeping this up-to-date as efficient as possible?

For example:

High-performance transaction system applications typically insert rows in a History table to provide an activity trace; at the same time the transaction system generates log records for purposes of system recovery. Both types of generated information can benefit from efficient indexing.

The LSM tree is a data structure designed to provide low-cost indexing for files experiencing a high rate of inserts (and deletes) over an extended period. A good modern example might be incoming streaming data being written to a table. LSM trees cascade data over time from smaller, higher performing (but more expensive) stores to larger less performant (and less expensive) stores.

The LSM-tree uses an algorithm that defers and batches index changes, cascading the changes from a memory-based component through one or more disk components in an efficient manner reminiscent of merge sort. … it is most useful in applications where index inserts are more common than finds that retrieve the entries. This seems to be a common property for History tables and log files, for example.

The only thing that is required for LSM trees to give an advantage is a high update rate compared to the retrieval rate. Although many examples involve time-series data, this is not a necessary feature. The high update:retrieval rate ratio makes the efficiency of maintaining the index paramount. At the same time find access needs to be frequent enough that an index of some kind must be maintained, because a sequential search through all the records would be out of the question.

An LSM-tree is composed of two or more tree-like component data structures. For example, a two component LSM-tree has a smaller component which is entirely memory resident, known as the C0 tree (or C0 component), and a larger component which is resident on disk, known as the C1 tree (or C1 component).

Note that although the C1 tree resides on disk, frequently referenced page nodes will still reside in memory in memory buffers. Dath is first inserted into C0, and from there it migrates to C1.

The index entry for [an insert] is inserted into the memory resident C0 tree, after which it will in time migrate out to the C1 tree on disk; any search for an index entry will look first in C0 and then in C1. There is a certain amount of latency (delay) before entries in the C0 tree migrate out to the disk resident C1 tree, implying a need for recovery of index entries that don’t get out to disk prior to a crash.

It’s very cheap to insert an entry into the memory-resident C0 tree, but the cost / capacity of memory compared to disk limits the size of what it makes sense to keep in memory.

At the heart of the LSM algorithm is a rolling merge process:

We need an efficient way to migrate entries out to the C1 tree that resides on the lower cost disk medium. To achieve this, whenever the C0 tree as a result of an insert reaches a threshold size near the maximum allotted, an ongoing rolling merge process serves to delete some contiguous segment of entries from the C0 tree and merge it into the C1 tree on disk.

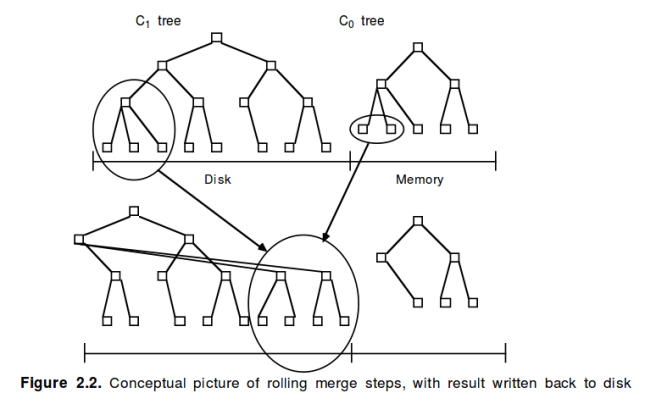

The rolling merge proceeds one block at at time from the downstream tree (C1 in our example). A block is read in and entries from the upstream tree (C0) are merged with it. This reduces the size of the C0 tree, and creates a new merged C1 block that is written out to disk.

The rolling merge acts in a series of merge steps. A read of a multi-page block containing leaf nodes of the C1 tree makes a range of entries in C1 buffer resident. Each merge step then reads a disk page sized leaf node of the C1 tree buffered in this block, merges entries from the leaf node with entries taken from the leaf level of the C0 tree, thus decreasing the size of C0, and creates a newly merged leaf node of the C1 tree.

Newly merged blocks are written to new disk positions, so that the old blocks will not be over-written and will be available for recovery in case of a crash…. We picture the rolling merge process in a two component LSM-tree as having a conceptual cursor which slowly circulates in quantized steps through equal key values of the C0 tree and C1 tree components, drawing indexing data out from the C0 tree to the C1 tree on disk.

The best efficiency gains over B-tree based systems (the prior art) come when reads and writes are in multi-page blocks thus eliminating seek time.

Finds simply need to work through the trees in order:

When an exact-match find or range find requiring immediate response is performed through the LSM-tree index, first the C0 tree and then the C1 tree is searched for the value or values desired.

We can generalize from a two-component LSM tree to one with k components:

…we define a multi component LSM-tree as having components C0, C1, C2, . . ., CK-1 and CK, indexed tree structures of increasing size, where C0 is memory resident and all other components are disk resident. There are asynchronous rolling merge processes in train between all component pairs (Ci-1, Ci) that move entries out from the smaller to the larger component each time the smaller component, Ci-1, exceeds its threshold size.

Section 3 of the paper contains an analysis of the cost-performance of LSM trees. This is based on the cost per unit of storage in each component (i.e. memory in C0, and disk in C1), and the cost per unit of I/O in each component. The Five-Minute Rule, which we examined earlier in the series (together with its updates at 10 and 20 years later) determines the inflection points where the dominant factors change.

This section also shows how to calculate the optimal threshold sizes for the various components in an LSM tree. The answer turns out to be to arrange things in a geometric progression whereby the ratio of the size of component(i) to the size of component(i+1) is a fixed value r for all adjacent components in the tree. The three variables ultimately affecting overall cost are therefore r, the size of component 0, and the number of components, k.

there are costs associated with increasing the number of components: a CPU cost to perform the additional rolling merges and a memory cost to buffer the nodes of those merges (which will actually swamp the memory cost of C0 in common cost regimes). In addition, indexed finds requiring immediate response will sometimes have to perform retrieval from all component trees. These considerations put a strong constraint on the appropriate number of components, and three components are probably the most that will be seen in practice.

There’s plenty more in the paper (which runs to 32 pages) that we haven’t had space to cover here, including an analysis of the concurrency and recovery implications of LSM-trees.

3 thoughts on “The Log-Structured Merge-Tree (LSM Tree)”

Comments are closed.