The tradeoffs of large scale learning Bottou & Bousquet, NIPS’07

Welcome to another year of The Morning Paper. As usual we’ll be looking at a broad cross-section of computer science research (I have over 40 conferences/workshops on my list to keep an eye on as a start!). I’ve no idea yet what papers we’ll stumble across, but if previous years are anything to go by I’m sure there’ll be plenty of great material to keep interest levels high.

To start us off, today’s paper choice is “The tradeoffs of large scale learning,” which won the ‘test of time’ award at NeurIPS last month.

this seminal work investigated the interplay between data and computation in ML, showing that if one is limited by computing power but can make use of a large dataset, it is more efficient to perform a small amount of computation on many individual training examples rather than to perform extensive computation on a subset of the data. [Google AI blog: The NeurIPS 2018 Test of Time Award].

For a given time/computation budget, are we better off performing a computationally cheaper (e.g., approximate) computation over lots of data, or a more accurate computation over less data? In order to get a handle on this question, we need some model to help us think about how good a given solution is.

Evaluating learning systems

Consider an input domain and an output domain

. We have input-output pairs

, and some probability distribution

. We want to learn the conditional distribution

— in other words, to make a prediction of the output,

, given the input

. A loss function

measures the distance between our prediction and the true value. We will approximate the probability distribution with a function

such that

.

The ‘gold standard’ function is the one that minimises the expected risk (loss),

over all output classes. We don’t know the true distribution

though. Instead, we are given a sample of n independently drawn training examples. The best we can do is find

, the function that minimises the empirical risk

across these n data points.

When we build a given learning model, we constrain the set of prediction functions that can be learned to come from some family . Let

be the best possible function within that family in terms of minimising expected risk.

The optimal function may well not belong to the family

. So by constraining learned functions to come from

we introduce an approximation error

that measures the difference in expected risk between

and

.

We also have an estimation error that measures the effect of minimising the empirical risk based on training examples rather than the expected risk across the whole distribution (the expected value of

).

The estimation error is determined by the number of training examples and by the capacity of the family of functions. Large families of functions have smaller approximation errors but lead to higher estimation errors.

Now, given that we’re working from training samples, it should be clear that the the empirical risk is already an approximation of the expected risk

. Finding the

that minimises the empirical risk is often computationally expensive. Since we’re already approximating, maybe a cheaper to compute approximation to

would be good enough? If we allow the minimisation algorithm to return an approximate solution

that is within

of the true minimum, then we introduce a third error term

, measuring the gap between

and

.

So now we have a trade-off involving three variables and two constraints:

The constraints are the maximal number of available training examples and the maximal computation time. The variables are the size of the family of functions

, the optimization accuracy

, and the number of examples

.

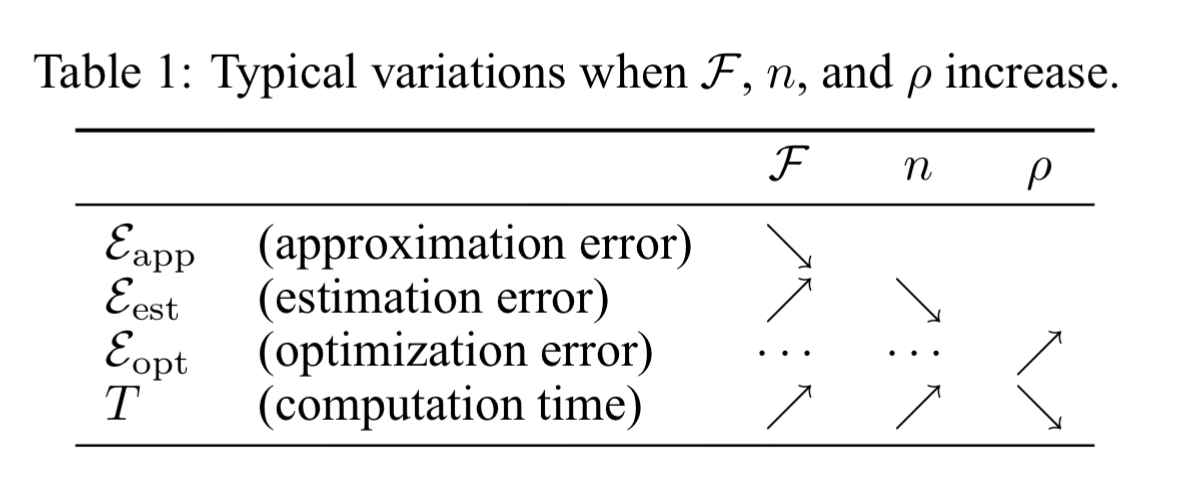

Typically the error components change with respect to the variables as shown in the following table:

When we are constrained by the number of available training examples (and not by computation time) we have a small-scale learning problem. When the constraint is the available computation time, we have a large-scale learning problem. Here approximate optimisation can possibly achieve better generalisation because more training examples can be processed during the allowed time.

Exploring the trade-offs in large-scale learning

Armed with this model of learning systems, we can now explore trade-offs in the case of large-scale learning. I.e., if we are compute bound, should we use a coarser-grained approximation over more data, or vice-versa? Let’s assume that we’re considering a fixed family of functions and hence ignore the

error component. Thus the paper focuses on the choice of optimisation algorithm.

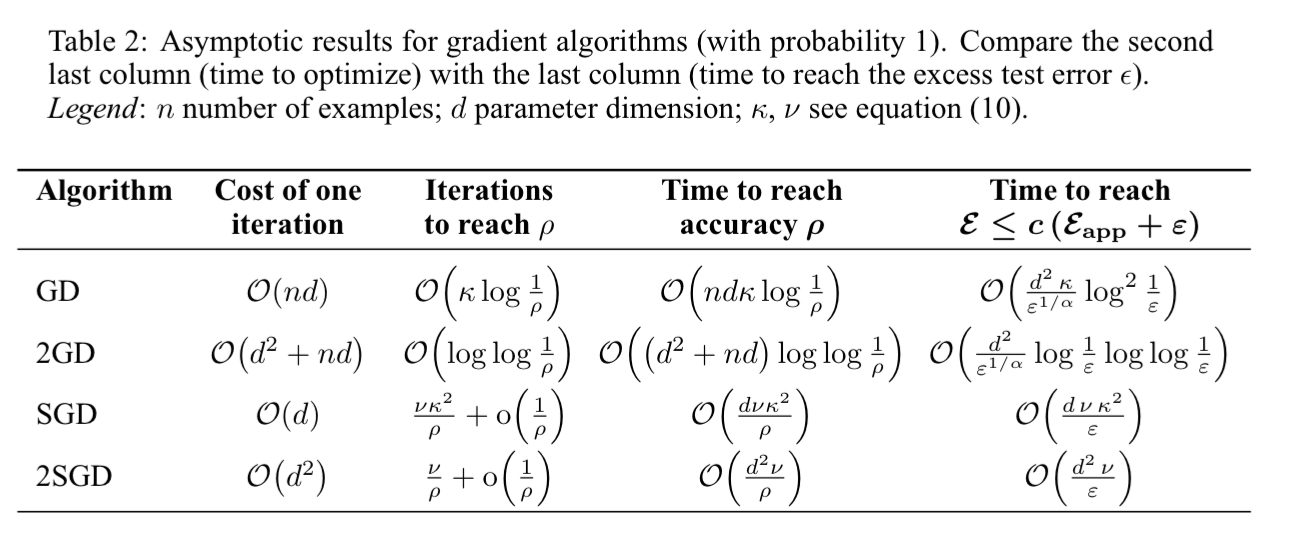

Four gradient descent algorithms are compared:

- Gradient descent, which has linear convergence and requires

iterations to reach accuracy

. Each iteration considers all training examples.

- Second order gradient descent (2GD), which requires

iterations to reach accuracy

. Each iteration considers all training examples.

- Stochastic gradient descent, in which a random training example is chosen at each iteration, and the parameters are updated on the basis of that example only. It reaches accuracy

after less than

iterations on average. Neither the initial value of the parameter vector nor the total number of examples appear in the dominant term of the bound.

- Second order stochastic gradient descent, which doesn’t change the influence of

on the convergence rate, but does improve the constants.

The results are summarised in the following table. The third column, time to reach a given accuracy, is simply the cost per iteration (column one) times the number of iterations needed (column two). The fourth column bounds the time needed to reduce the excess error below some chosen threshold.

Setting the fourth column expression to

and solving for

yields the best excess error achieved by each algorithm within the limited time

. This provides the asymptotic solution of the Estimation-Optimization tradeoff for large-scale problems satisfying our assumptions…

- SGD and 2SGD results do not depend on the estimation rate exponent,

. When the estimate rate is poor, there is less need to optimise accurately and so we can process more examples.

- Second order algorithms bring little asymptotic improvements, but do bring improvements in the constant terms which can matter in practice.

- Stochastic algorithms yield the best generalization performance despite being the worst optimization algorithms.

The perspective proposed by Léon and Olivier in their collaboration 10 years ago provided a significant boost to the development of the algorithm that is nowadays the workhorse of ML systems that benefit our lives daily, and we offer our sincere congratulations to both authors on this well-deserved award. [Google AI blog: The NeurIPS 2018 Test of Time Award].