Spectre attacks: exploiting speculative execution Kocher et al., 2018

Yesterday we looked at Meltdown and some of the background on how modern CPUs speculatively execute instructions. Today it’s the turn of Spectre of course, which shares some of the same foundations but is a different attack, not mitigated by KAISER. On a technical front, Spectre is as fascinating as it is terrifying, introducing a whole new twist on ROP.

This paper describes practical attacks that combine methodology from side-channel attacks, fault attacks, and return-oriented programming that can read arbitrary memory from the victim’s process… These attacks represent a serious threat to actual systems, since vulnerable speculative execution capabilities are found in microprocessors from Intel, AMD, and ARM that are used in billions of devices.

Spectre is a step-up from the already very bad Meltdown:

- it applies to AMD and ARM-based processors (so works on mobile phones, tablets, and so on)

- it only assumes that speculatively executed instructions can read from memory the victim process could access normally (i.e., no page fault or exception is triggered)

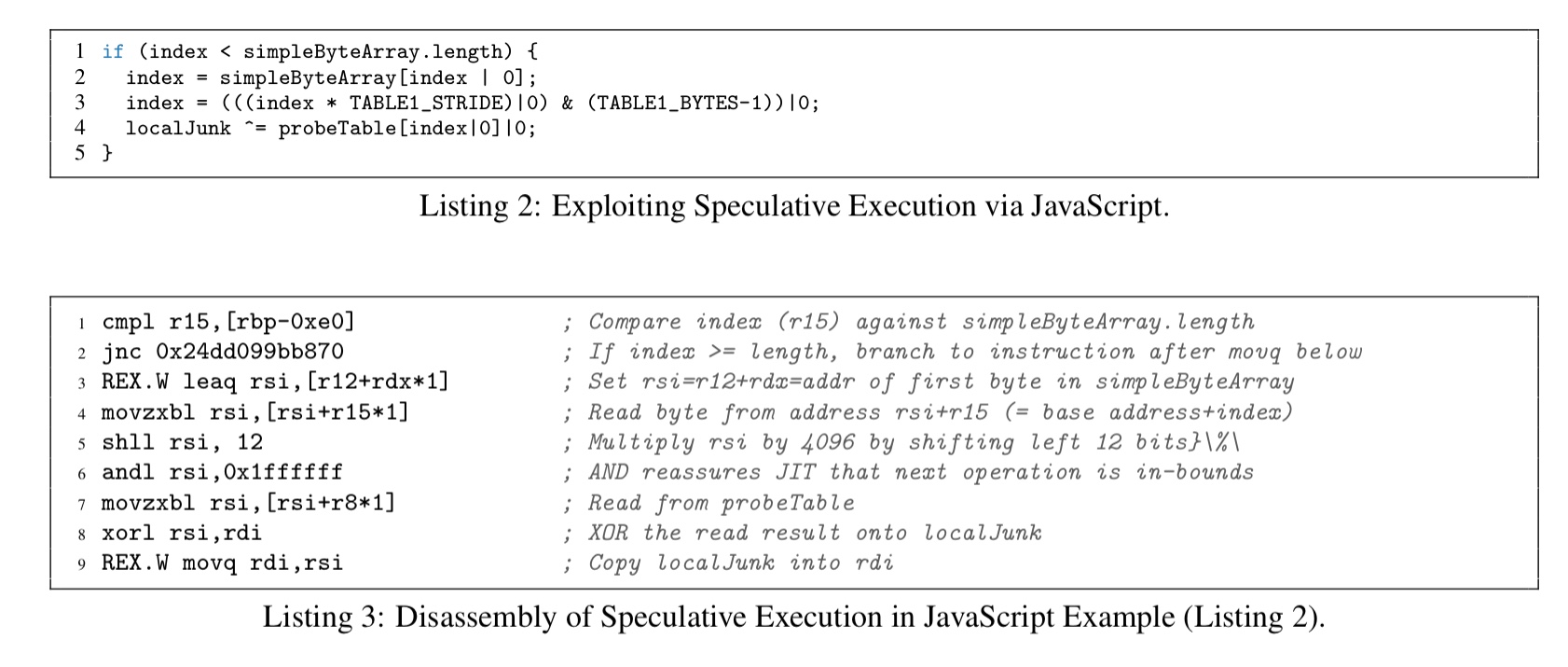

- the attack can be mounted in just a few lines of JavaScript – and a few lines of JavaScript can easily be delivered to your system from, for example, an ad displayed on a site that you would otherwise trust.

… in order to mount a Spectre attack, an attacker starts by locating a sequence of instructions within the process address space which when executed acts as a covert channel transmitter which leaks the victim’s memory or register contents. The attacker then tricks the CPU into speculatively and erroneously executing this instruction sequence…

Let’s play tennis

You’re watching an epic clay-court tennis match with long rallies from the baseline. Every time the ball is received deep in the forehand side of the court, our would-be-champion plays a strong cross-court forehand. Her opponent plays the ball deep into the forehand side of the court once more, and almost reflexively starts moving across the court to get into position for the expected return. But this time her speculative execution is in vain — the ball is played down the line at pace leaving her wrong-footed. By forcing a branch misprediction the now champion tennis player has won the point, game, set and match!

Spectre exploits speculative execution of instructions following a branch. Inside the CPU, a Branch Target Buffer (BTB) keeps a mapping from addresses of recently executed branch instructions to destination addresses. The BTB is used to predict future code addresses (“it’s going to be a cross-court forehand”) even before decoding the branch instructions. Speculative execution of the predicted branch improves performance.

Attack foundations

Consider this simple code fragment:

[code language=”c”]

if (x < array1_size)

y = array2[array1[x] * 256];

[/code]

On line 1 we test to see whether x is in bounds for array1, and if it is, we use the value of x as in index into array1 on line 2. We can execute this code fragment lots of times, always providing a value of x that is in bounds (the cross-court forehand). The BTB learns to predict that the condition will evaluate to true, causing speculative execution of line 2. Then, wham, we play the forehand down-the-line, passing a value of x which is out of bounds. Speculative execution of line 2 goes ahead anyway, before we figure out that actually the condition was false this time.

Suppose we want to know the value of sensitive memory at address $a$. We can set x = a - (base address of array1) and then the lookup of array1[x] on line 2 will resolve to the secret byte at $a$. If we ensure that array1_size and array_2 are not present in the processor’s cache (e.g., via a clflush) before executing this code, then we can leak the sensitive value.

When the code runs…

- The processor compares the malicious value of

xagainstarray1_size. This results in a cache miss. - While waiting for

array1_sizeto be fetched, speculative execution of line 2 occurs, reading the data, $k$, at the target address. - We then compute

k * 256and use this as an index intoarray2. That’s not in the cache either, so off we go to fetch it. - Meanwhile, the value of

array1_sizearrives from DRAM, the processor realises that the speculative execution was erroneous in this case, and rewinds its register state. But crucially, the access of array2 affects the cache as we saw yesterday with Meltdown. - The attacker now recovers the secret byte $k$, since accesses to

array2[n*256]will be fast for the case when $n = k$, and slow otherwise.

You can even do this from JavaScript, using code like this:

(Enlarge)

Note that there’s no access to the clflush instruction from JavaScript, but reading a series of addresses at 4096-byte intervals out of a large array does the same job. There’s also a small challenge getting the timer accuracy needed to detect the fast access in array2. The Web Workers feature of HTML5 provides the answer – creating a separate thread that repeatedly decrements a value in a shared memory location yields a timer that provides sufficient resolution.

For a C code example, see appendix A in the paper. Unoptimised, this code can read about 10KB/second an an i7 Surface Pro 3.

The art of misdirection

With indirect branches we can do something even more special. An indirect branch is one that jumps to an address contained in a register, memory location, or on the stack.

If the determination of the destination address is delayed due to a cache miss and the branch predictor has been mistrained with malicious destinations, speculative execution may continue at a location chosen by the adversary.

This means we can make the processor speculatively execute instructions from any memory location of our choosing. So all we have to do, as in the gadgets of Return-Oriented Programming (ROP), is find some usable gadgets is the victim binary.

… exploitation is similar to return-oriented programming, except that correctly-written software is vulnerable, gadgets are limited in their duration, but need not terminate cleanly (since the CPU will eventually recognize the speculative error), and gadgets must exfiltrate data via side channels rather than explicitly.

In other words, this is an even lower barrier than regular ROP gadget chaining. Code executing in one hyper-thread of x86 processors can mistrain the branch predictor for code running on the same CPU in a different hyper-thread. Furthermore, the branch predictor appears to use only the low bits of the virtual address: “as a result, an adversary does not need to be able to even execute code at any of the memory addresses containing the victim’s branch instruction.”

A Windows example

A proof-of-concept program was written that generates a random key the goes into a infinite loop calling Sleep(0), loading the first bytes of a file, calling Windows crypto functions to compute the SHA-1 hash of the key and file header, and then prints out the hash whenever the header changes. When compiled with optimisation flags, the call to Sleep(0) will be made with file data in registers ebx and edi (normal behaviour, nothing special was done to cause this).

In ntdll.dll we find the byte sequence 13 BC 13 BD 13 BE 13 12 17, which when executed corresponds to:

adc edi, dword ptr [ebx+edx+13BE13BDh]

adc dl, byte ptr [edi]

If we control ebx and edi (which we do, via the file header), then we can control which address will be read by this code fragment.

Now the first instruction of the sleep function is jmp dword ptr ds:[76AE0078h], which we can target for branch mistraining. (The actual destination changes per reboot due to ASLR).

- Simple pointer operations were used to locate the indirect jump at the entry point for sleep, and the memory location holding the destination for the jump

- The memory page containing the destination for the jump was made writable using copy-on-write, and modified to change the jump destination to the gadget address. Use the same method, a

ret 4instruction was written at the location of the gadget. (These changes are only visible to the attacker, not the victim). - A set of threads are launched to mistrain the branch predictor. This is a bit fiddly (see the details in section 5.2), but once an effective mimic jump sequence is found the attacker is able to read through the victim’s address space.

Variations and mitigations

Spectre is not really so much an individual attack, as a whole new class of attacks: speculative execution may affect the state of other microarchitectural components, and virtually any observable effect of speculative execution can lead to leaks of sensitive information. Timing effects from memory bus contention, DRAM row address selection status, availability of virtual registers, ALU activity, and the state of the branch predictor itself need to be considered.

The conditional branch vulnerability can be mitigated if speculative execution can be halted on potentially-sensitive execution paths… Indirect branch poisoning is even more challenging to mitigate in software. It might be possible to disable hyperthreading and flush branch prediction state during context switches, although there does not appear to be any architecturally-defined method for doing this.

Anything we can do in software or microcode though, should at best be seen as stop-gap countermeasures pending further research.

The last word

The vulnerabilities in this paper, as well as many others, arise from a longstanding focus in the technology industry on maximizing performance. As a result, processors, compilers, device drivers, operating systems, and numerous other critical components have evolved compounding layers of complex optimizations that introduce security risks. As the costs of insecurity rise, these design choices need to be revisited, and in many case alternate implementations optimized for security will be required.