Simplifying and Isolating Failure-Inducing Input – Zeller et al. 2002

The most common question I get asked about The Morning Paper is ‘how do you find time to read so many papers?’ The second most common question is ‘how do you find interesting papers?’ Sometimes it goes like this: Colin Scott writes a great blog post on Fuzzing Raft for Fun and Publication, which references his paper on ‘Minimizing Faulty Executions of Distributed Systems’ – which we’ll look at later this week. In that paper the authors make use of a technique called ‘Delta Debugging…’ sounds intriguing, and here we are!

Delta Debugging it turns out is pretty neat (how come it’s taken me 13 years since publication to hear about it ????). It’s an automated binary-search-on-steroids approach for taking input that produces a failure (for example, an HTML page that crashes a browser, or a program that breaks a compiler) and stripping out all of the irrelevant parts to leave just what is essential to reproduce the bug.

Delta debugging was able to take a C program that crashed the gcc compiler, and turn it into a minimal test case that reproduces the bug, without understanding anything about the syntax or semantics of C. If you’ll forgive me for reproducing the original failing program in full, it makes the achievement much easier to comprehend:

#define SIZE 20

double mult(double z[], int n)

{

int i, j ;

i = 0;

for (j = 0; j < n; j++) {

i = i + j + 1;

z[i] = z[i] * (z[0] + 1.0);

}

return z[n];

}

void copy(double to[], double from[], int count)

{

int n = (count + 7) / 8;

switch (count % 8) do {

case 0: *to++ = *from++;

case 7: *to++ = *from++;

case 6: *to++ = *from++;

case 5: *to++ = *from++;

case 4: *to++ = *from++;

case 3: *to++ = *from++;

case 2: *to++ = *from++;

case 1: *to++ = *from++;

} while(--n > 0);

return mult(to,2);

}

int main(int argc, char *argv[])

{

double x[SIZE], y[SIZE];

double *px = x;

while (px < x + SIZE)

*px++ = (px - x) * (SIZE + 1.0);

return copy(y,x,SIZE);

}

In 34 seconds (on 2002 hardware!), the test case was automatically minimized to:

t(double z[],int n){int i,j;for(;;){i=i+j+1;z[i]=z[i]*z[0]+0;}return z[n];}

(Note that all the redundant spaces are gone too… there is no pretty formatting because the algorithm has no knowledge of C remember).

An 894 line HTML document that crashed Mozilla on printing was reduced down to just “<SELECT>” using the same techniques.

So how does delta debugging work its magic? The paper explains two variants – one called ddmin that seeks to find a minimal input (simplification), and an extension called simply dd that seeks to isolate the one change that makes the difference between a passing and failing test case.

The Minimizing Delta Debugging Algorithm (ddmin)

Starting with an input that causes a failure (the failing test case), the goal is to find a 1-minimal approximation to the smallest possible test case that still reproduces the failure. The test case is 1-minimal if removing any single change would cause the failure to disappear.

Here ‘change’ refers to the unit of change for the input that the ddmin algorithm is working with. For the C program and HTML examples, the unit of change is a character. But ddmin could also be run with the unit of change being a line, or anything else that makes sense as an atomic unit given the nature of the input.

If the failing test case only contains 1 unit of change, then it is minimal by definition. Otherwise we can begin with a binary search…

- Partition the failing input (sequence of changes) into two subsets with similar sizes, lets call them Δ1 and Δ2 ( reducing with n=2 subsets)

- Test Δ1 and Δ2

- If Δ1 reproduces the failure, we can reduce to Δ1 and continue the search in this subset. We can do likewise with Δ2 if that reproduces the failure.

But what if neither Δ1 nor Δ2 reproduces the failure? Perhaps both tests pass, or perhaps they result in input that is not well-formed from the perspective of the program under test and cause the test to be ‘unresolved.’ We have two possible strategies open to us:

- We can test larger subsets of the failing input – this will increase the chances that the test fails, but gives us a slower progression to the 1-minimal solution.

- We can test smaller subsets of the failing input – this has a lower chance of producing a failing test, but gives us faster progression if it does.

The ddmin algorithm cleverly combines both of these and proceeds as follows:

- Partition the failing input into a larger number of subsets (twice as many as on the previous attempt). Test each small subset Δi, as well as its (large) complement: failing input – Δi. From testing each Δi and its complement we get four possible outcomes:

- If the test of any Δi reproduces the failure, then we can reduce to this subset and continue with this as our new input (reducing with n=2 subsets).

- If the test of any Δi complement reproduces the failure, then continue from this complement and reduce it with n-1 subsets.

- If no test reproduces the failure, and any subset contained more than one change, then try again with finer-grained subsets. Step up to 2n if possible, and if 2n > the number of changes in the input, then just divide into subsets of one change each.

- If no test reproduces the failure, and all subsets contain only one change, we are done – we have a 1-minimal test input.

ddmin guarantees to eventually return a 1-minimal test case. If there are c changes in the original failing test case then in the worst case ddmin takes c2 + 3c tests (it will most likely take much less than this, due to the divide-and-conquer nature of the algorithm). If there is only one failure inducing change somewhere in the input, and all tests cases that include it reproduce the failure then the number of tests needed to find that change will be ≤ _2log2c.

Whether this “best case” efficiency applies depends on our ability to break down the input into smaller chunks that result in determined (or better: failing) test outcomes. Consequently, the more knowledge about the structure of the input we have, the better we can identify possibly failure-inducing subsets, and the better is the overall performance of ddmin. The surprising thing, however, is that even with no knowledge about the input structure at all, the ddmin algorithm has sufficient performance — at least in the case studies we have examined.

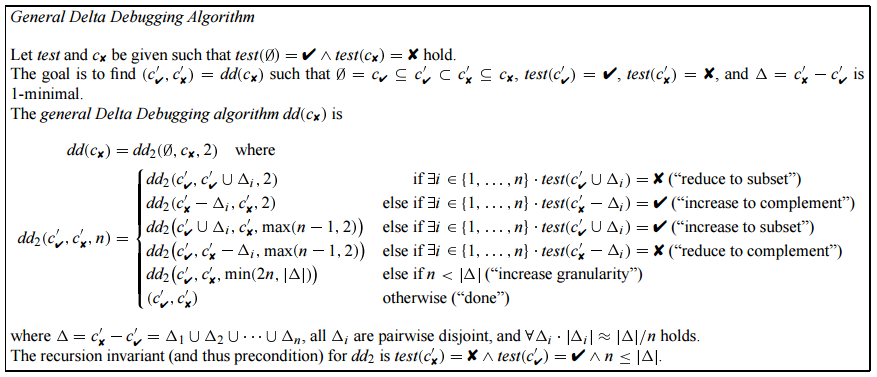

The General Delta Debugging Algorithm (dd)

The ddmin algorithm only moves in one direction – it always attempts to cut away from a failing input to make it smaller. Another option would be to take a passing test (that is a subset of the original input) and add to it to see if the addition reproduces the failure. In this way we can more quickly isolate the cause of failure.

To get the best efficiency, one can combine both approaches and narrow down the set of differences whenever a test passes or fails… It turns out that the original ddmin algorithm can easily be extended to compute a 1-minimal difference rather than a minimal test case. Besides reducing the failing test case whenever a test fails, we now also increase the passing test case whenever a test passes. At all times, the failing and passing test cases act as lower and upper bound of the search space, which is systematically narrowed—like in a branch-and-bound algorithm, except that there is no backtracking.

ddmin is extended to work on two sets at a time: a passing test case (start out with the empty set of ‘changes’) and the failing test case (start out with the full input that is to be reduced).

When computing subsets, instead of computing subsets simply of the failing test case, compute subsets Δ of the difference between the passing set and the failing set. Now we add two new rules:

- Test the passing set ⋃ Δi for each Δi. If we find a combination that passes then we have a larger passing test case and we can continue to the next iteration (reducing the difference between the new passing test case and the failing case).

- If none of these pass then test the failing set – Δi for each Δi. If we find a combination that passes then once again we have a larger passing test case…

“As a consequence of the additional rules, the ‘increase granularity’ rule only applies if all previous tests turn out unresolved.

Here’s the full dd algorithm from the paper:

Delta Debugging vs Greedy Search

The Delta Debugging algorithm begins to sound like a greedy search… John Regehr made this observation in 2011 in his blog post ‘Generalizing and Criticizing Delta Debugging’.

Tomorrow we’ll look at an extension to Delta Debugging that both speeds it up and also increases its output quality on structured inputs…