Eraser: A dynamic data race detector for multi-threaded programs – Savage et al. 1997

Debugging a multithreaded program can be difficult. Simple errors in synchronization can produce timing-dependent data races that can take weeks or months to track down.

Eraser dynamically detects data races in multi-threaded programs. There are two basic approaches to doing this, one based on ‘happens-before’ relationships, and one based on ensuring consistent locking discipline. Eraser uses the latter strategy.

We have aimed Eraser specifically at the lock-based synchronization used in modern multithreaded programs. Eraser simply checks that all shared-memory accesses follow a consistent locking discipline. A locking discipline is a programming policy that ensures the absence of data races. For example, a simple locking discipline is to require that every variable shared between threads is protected by a mutual exclusion lock. We will argue that for many programs Eraser’s approach of enforcing a locking discipline is simpler, more efficient, and more thorough at catching races than the approach based on happens-before.

Let’s get some definitions out of the way, briefly look at happens-before (the prior art), and then take a look at the core lockset algorithm that Eraser uses.

Definitions

Lock:

A lock is a simple synchronization object used for mutual exclusion; it is either available, or owned by a thread. The operations on a lock mu are lock(mu) and unlock(mu). Thus it is essentially a binary semaphore used for mutual exclusion, but differs from a semaphore in that only the ownerof a lock is allowed to release it.

Data Race:

A data race occurs when two concurrent threads access a shared variable and when at least one access is a write and the threads use no explicit mechanism to prevent the accesses from being simultaneous.

Happens-before

Happens-before is a partial order of all events of all threads in a concurrent execution. For any single thread, events are ordered in the order in which they occur.

Between threads, events are ordered according to the properties of the synchronization objects they access. If one thread accesses a synchronization object, and the next access to the object is by a different thread, then the first access is defined to happen before the second if the semantics of the synchronization object forbid a schedule in which these two interactions are exchanged in time.

For example, if one thread must release a lock before another thread can acquire it then we have a happens-before relationship. Without the presence of some sychnronization object, then we are at the mercy of data races.

If two threads both access a shared variable, and the accesses are not ordered by the happens-before relation, then in another execution of the program in which the slower thread ran faster and/or the faster thread ran slower, the two accesses could have happened simultaneously; that is, adata race could have occurred, whether or not it actually did occur. All previous dynamic race detection tools that we know of are based on this observation.

The authors of the paper point out that detectors based on happens-before are at the mercy of the interleaving produced by the scheduler for whether they can detect a race or not (see the easy to follow example in the paper). The Lockset algorithm used by Eraser does not have this property.

The Lockset Algorithm

The basic version of the algorithm seeks to ensure that every access to a shared variable is protected by some lock. Whatever that lock is (since we don’t know this up front), we want the lock to be held by any thread that accesses the variable. Eraser infers the protection relationship between lock and variable during program execution.

For each shared variable v, Eraser maintains the set C(v) of candidate locks for v. This set contains those locks that have protected v for the computation so far. That is, a lock l is in C(v) if, in the computation up to that point, every thread that has accessed v was holding l at the moment of the access. When a new variable v is initialized, its candidate set C(v) is considered to hold all possible locks. When the variable is accessed, Eraser updates C(v) with the intersection of C(v) and the set of locks held by the current thread. This process, called lockset refinement, ensures that any lock that consistently protects v is contained in C(v). If some lock lconsistently protects v, it will remain in C(v) as C(v) is refined. If C(v) becomes empty this indicates that there is no lock that consistently protects v.

If locks_held(t) is the set of locks held by thread t, then the algorithm can be succintly expressed as follows;

For each v, initialize C(v) to the set of all locks.

On each access to v by thread t,

set C(v) := the intersection of C(v) and locks_held(t);

if C(v) = { }, then issue a warning.

Which is pretty neat for something so simple!

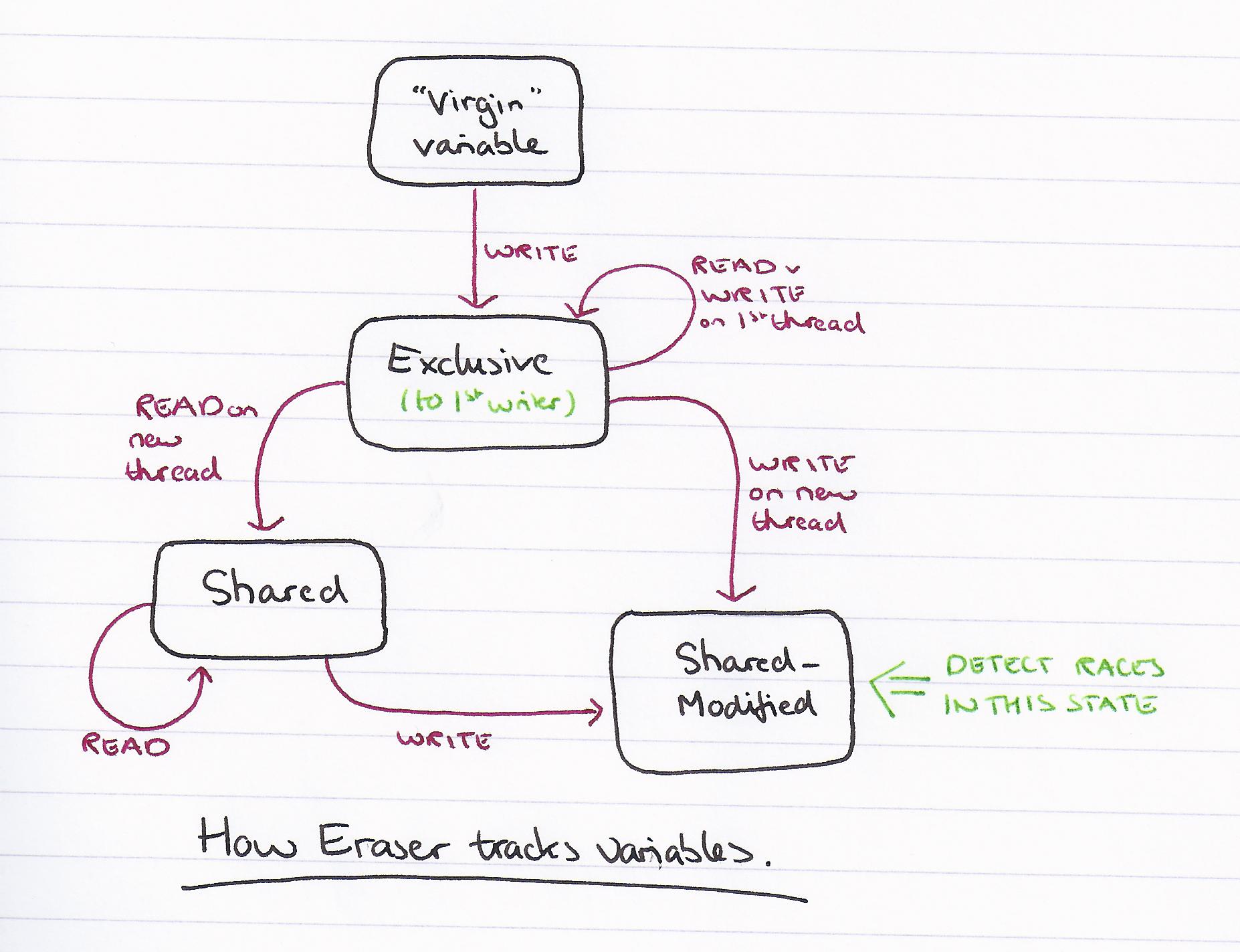

There are some refinements necessary to handle the case of initialization (which may often occur without locking), variables that are read-only after initialization (final variables) and can be safely read without locks, and read-write locks that allow multiple simultaneous readers but write exclusivity. These are all fairly easily dealt with:

- For initialization, reporting of warnings is deferred until after initialization. The end of initialization is taken as the point at which a second thread first accesses the variable.

- To support final variables, races are only reported after a variable has become write-shared by more than one thread.

- To deal with multiple reader, single writer variables, locks held purely in read mode are removed from the candidate set when a write occurs, as these locks do not protect against a race between the writer and some other reader thread:

Many programs use single-writer, multiple-reader locks as well as simple locks. To accommodate this style we introduce our last refinement of the locking discipline: we require that for each variable v, some lock m protects v, meaning m is held in write mode for every write of v, and m is held in some mode (read or write) for every read of v.

One issue with Eraser is that it can create false alarms. These fall into three broad catagories:

- Memory reuse: if a program allocates its own memory blocks and later on privately recycles them, Eraser has no way of knowing that the ‘new’ memory is now protected by a new set of locks.

- Private locks: using a locking mechanism other than pthreads, which the Eraser implementation described in the paper would then be unable to detect.

- Benign race: genuine races, but which do not affect the result of the program (some of these may be intentional)

Eraser introduced an annotation model to suppress unwanted warnings.

Not implemented in Eraser, but a useful extension, is the ability to detect deadlocks. The paper gives us a sketch of how this can work:

A simple discipline that avoids deadlock is to choose a partial order among all locks and to program each thread so that whenever it holds more than one lock, it acquires them in ascending order. This discipline is similar to the locking discipline for avoiding data races: it is suitable for checking by dynamic monitoring, and it is easier to produce a test case that exposes a breach of the discipline than it is to produce a test case that actually causes a deadlock.

A results of checking several programs with Eraser are reported – and as expected, it does a good job of flushing out bugs!

A note on Valgrind

Today the most commonly used tool is arguably Valgrind, which implements data-race detection with DRD. This is what the Valgrind manual has to say on the topic:

There exist two different approaches for verifying the correctness of multithreaded programs at runtime. The approach of the so-called Eraser algorithm is to verify whether all shared memory accesses follow a consistent locking strategy. And the happens-before data race detectors verify directly whether all interthread memory accesses are ordered by synchronization operations. While the last approach is more complex to implement, and while it is more sensitive to OS scheduling, it is a general approach that works for all classes of multithreaded programs. An important advantage of happens-before data race detectors is that these do not report any false positives.