The MADlib Analytics Library – MAD Skills, the SQL – Hellerstein et al. 2012

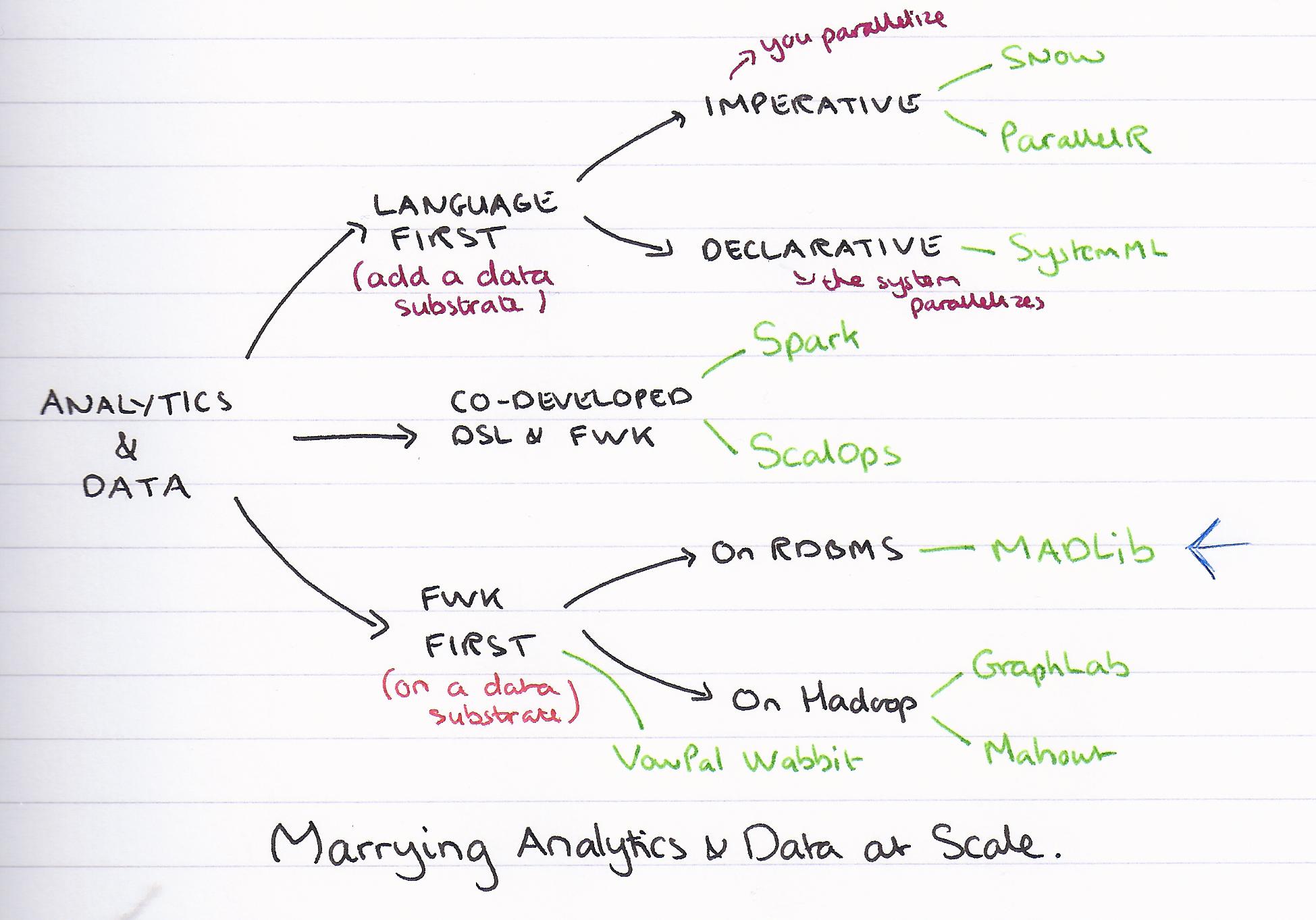

The way that we use large databases has evolved from being primarily in support of accounting and financial record-keeping, to primarily in support of predictive analytics over a wide range of potentially noisy data. Analytics at scale requires the marriage of scalable data platforms with analytic libraries. The section on related work contains a nice overview of the main approaches taken, together with representative systems in each category. I’ve sketched out this ‘family tree’ below. This was a 2012 paper – what new systems should we also include for a 2015 update?

The analytics use case looks different to the system-of-record use case:

Data scientists make use of database engines in a very different way than traditional data warehousing professionals. Rather than carefully designing global schemas and “repelling” data until it is integrated, they load data into private schemas in whatever form is convenient. Rather than focusing on simple OLAP-style drill-down reports, they implement rich statistical models and algorithms in the database, using extensible SQL as a language for orchestrating data movement between disk, memory, and multiple parallel machines. In short, for data scientists a DBMS is a scalable analytics runtime — one that is conveniently compatible with the database systems widely used for transactions and accounting.

From these observations came the MAD acronynm to describe the characteristics of this new platform (Magnetic, Agile, and Deep):

- Magnetic indicates that the platform attracts all kinds of data to it, rather than repelling data that doesn’t strictly conform to pre-conceived ideals

- Agile to represent the fact that data scientists use iterative, interactive processess for modeling, loading, and iterating on data, and

- Deep to reflect the statisticals models and algorithms being used.

MADlib is a library of analytic methods that can be installed and executed within a relational database engine that supports extensible SQL.

It is also an open source project with a website at MADlib.net. MADlib has continued to see healthy growth and development since the publication of this paper, with the 1.7 release coming out less than a month ago (Dec. 31st 2014).

In a first for The Morning Paper, you can even watch a video of Joe Hellerstein and Chris Ré explaining the background of the project and research:

One standard methodology in the domain of statistical analytics is called SEMMA, which stands for Sample, Explore, Modify, Model, Assess.

The ‘EMMA’ portion of this cycle identifies a set of fundamental tasks that an analyst needs to perform, but the first, “S” step makes less and less sense in many settings today…. Winning requires extracting advantages in the long tail of “special interests”.

While the Hadoop system has been evolving, we’ve also seen a resurgence of interest (and acknowledgement) of the important role that SQL has to play. “For these cases, it would be helpful to push statistical methods into the DBMS. And as we will see, massively parallel databases form a surprisingly useful platform for sophisticated analytics.”

Ideally, we would like MADlib methods to be written entirely in a straightforward and portable SQL. Unfortunately, the portable core of “vanilla” SQL is often not quite enough to express the kinds of algorithms needed for advanced analytics.

You need to be able to intelligently partition large matrices, and to quickly invoke well-tuned linear algebra methods. MADlib exploits user-defined aggregates (UDAs), user-defined functions (UDFs), and a sparse matrix C library to provide efficient representations on disk and in memory.

The most basic building block in the macro-programming of MADlib is the use of user-defined aggregates (UDAs). In general, aggregates—and the related window functions—are the natural way in SQL to implement mathematical functions that take as input the values of an arbitrary number of rows (tuples). DBMSs typically implement aggregates as data-parallel streaming algorithms. And there is a large body of recent work on online learning algorithms and model-averaging techniques that fit the computational model of aggregates well.

Many statistical methods are iterative – i.e. they make many passes over a data set. Several SQL workarounds are described (virtual tables, window aggregates, recursive queries) but none quite met the needs of MADlib. So a driver UDF was written in Python to control iteration in such a way that all large data movement is done within the database engine and its buffer pool.

What does all this look like to the end user?

Here’s a linear regression example:

psql# SELECT (linregr(y, x)).* FROM data; -[ RECORD 1

]+--------------------------------------------

coef | {1.7307,2.2428}

r2 | 0.9475

std_err | {0.3258,0.0533}

t_stats | {5.3127,42.0640}

p_values | {6.7681e-07,4.4409e-16} condition_no | 169.5093

The paper contains examples showing how linear regression, logistic regression, and k-Means are implemented in MADlib, as well as a discussion of contributions for convex optimisation and statistical text analytics.

The authors conclude:

Scalable analytics are a clear priority for the research and industrial communities. MADlib was designed to fill a vacuum for scalable analytics in SQL DBMSs, and connect database research to market needs. In our experience, a parallel DBMS provides a very efficient and flexible dataflow substrate for implementing statistical and analytic methods at scale.

If you want to see what MADlib can do now three years on, take a look at the list of modules in the MADlib documentation. You can even install MADlib in PostgreSQL and have a play!