Unveiling and quantifying Facebook exploitation of sensitive personal data for advertising purposes Cabañas et al., USENIX Security 2018

Earlier this week we saw how the determined can still bypass most browser and tracker-blocking extension protections to track users around the web. Today’s paper is a great example of why you should care about that. Cabañas et al. examine the extent to which the profile Facebook builds on its users includes sensitive personal data made available to advertisers for targeting. The work was done just prior to the GPDR coming into force, which makes for very interesting timing from a legal perspective. The headline result is that it looks like Facebook is holding sensitive data on about 40% of the EU population, and that this data can be used by third-parties to target individuals in sensitive demographics and even identify them at a cost of as little as €0.015 per user.

What kinds of sensitive data are we talking about?

The GDPR definition of sensitive personal data is “data revealing racial or ethnic origin, political opinions, religious or philosophical beliefs, or trade union membership, and the processing of genetic data, biometric data for the purpose of uniquely identifying a natural person, data concerning health or data concerning a natural person’s sex life of sexual orientation.”

As we’ve seen in previous editions of The Morning Paper, Facebook build profiles of their users based on their activities on Facebook and on third-party sites with Facebook trackers. Using the Facebook Ads Manager, you can target users based on their interests (aka ‘ad preferences’). The authors collected a total of 126,192 unique ad preferences which Facebook had assigned to real users. In amongst these 126K preferences are some that pertain to sensitive personal data. To find out how many, the authors selected five of the relevant GDPR categories and set out to match ad preferences against these categories.

- Data revealing racial or ethnic origin

- Data revealing political opinions

- Data revealing religious or philosophical beliefs

- Data concerning health

- Data concerting sex life and sexual orientation

One reason for believing that Facebook is tracking such information is that they were previously fined in Spain in 2017 for violating the Spanish implementation of the EU data protection directive 95/46EC (a GDPR predecessor). The Spanish Data Protection Agency claimed that Facebook ‘collects, stores and processes sensitive personal data for advertising purposes without obtaining consent from users.’ The amount of the fine? A paltry €1.2M — compare that to Facebook’s 2017 revenues of $40.6B!

The Data Validation Tool for Facebook users (FDVT)

Data for the research is based on (consent-giving) users of the Data Validation Tool for Facebook Users (FDVT). This is a browser extension that shows users how much money Facebook is earning based on their activities on the Facebook site. The video on the linked page makes for very interesting viewing. We all know that advertising powers much of the internet, but it really brings it home to see the revenue you’re generating for Facebook ticking up in real-time as you use the site. The FDVT collects the ad preferences that Facebook has assigned to its users. The 126K unique ad preference categories were obtained from 4,577 users of FDVT between October 2016 and October 2017. Each FDVT user is assigned a median of 474 preferences.

Assessing the extent of potentially sensitive ad preferences

Starting with the set of 126K ad preferences, the team applied a two-stage filter to find those that reveal sensitive personal data. The initial filter is done using the NPL techniques to find interests whose name or disambiguation category (provided by Facebook) suggests they might be sensitive. A dictionary of 264 sensitive keywords was built and then the spaCy python package was used to compute the semantic similarity between interest name and disambiguation category and the keywords. 4,452 ad preferences gave a high enough similarity score to pass this first filter.

A panel of twelve researchers with some knowledge in the area of privacy then manually classified these 4,452 remaining ad preferences. Each panelist classified the ad preferences into one of five politically sensitive categories (Politics, Health, Ethnicity, Religion, Sexuality) or ‘Other’. Based on majority voting, 2,092 ad preferences were finally labeled as sensitive.

58.3% of the sensitive preferences relate to politics, 20.8% to religion, 18.2% to health, 1.5% to sexuality, and 1.1% to ethnicity.

The Facebook Ads Manager API was then used to quantify how many Facebook users in the EU country have been assigned at least one of the potentially sensitive ad preferences.

The Results

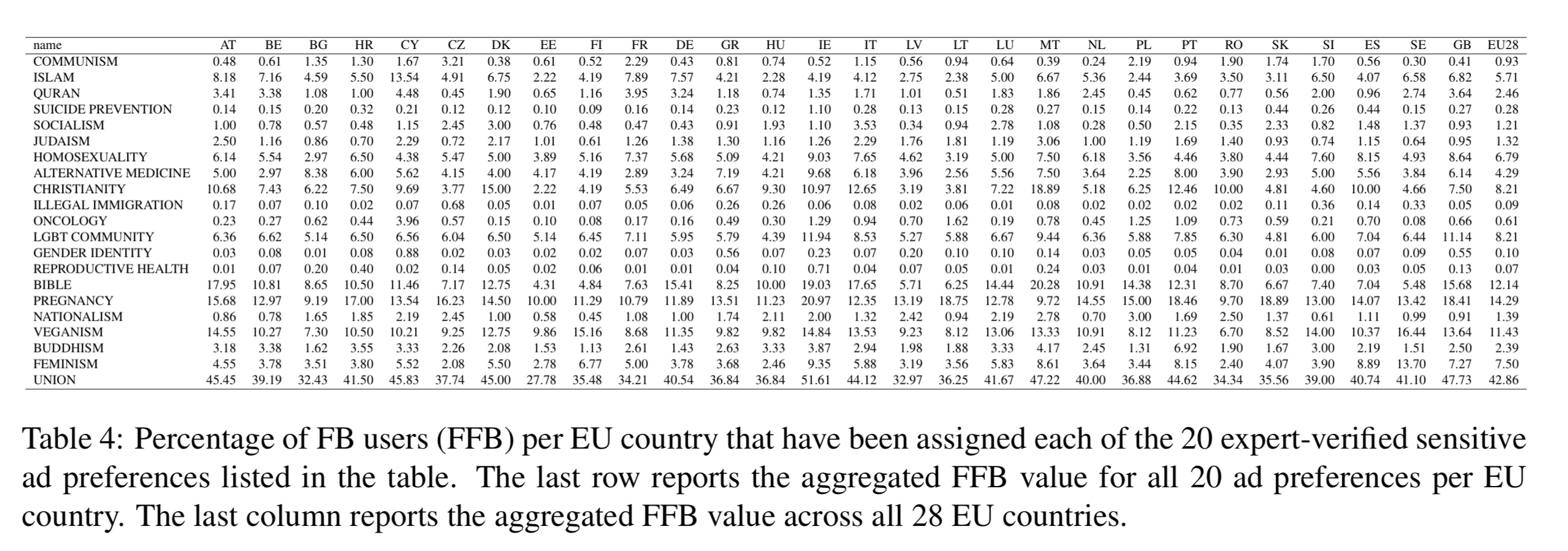

Results are reported as a percentage of Facebook users per country and in the EU overall (FFB(country, N) for the percentage of FB users assigned at least one of the top N sensitive categories), and also as a percentage of the overall population of each country (FC(country, N)). Here’s the data for every EU country with N=500.

We observe that 73% of EU FB users, which corresponds to 40% of EU citizens, are tagged with some of the top 500 potentially sensitive ad preferences in our dataset. If we focus on individual countries, FC(C,N=500) reveals that in 7 of them more than half of their citizens are tagged with at least one of the top 500 potentially sensitive ad preferences… These results suggest that a very significant part of the EU population can be targeted by ad campaigns based on potentially sensitive personal data.

Expert verification

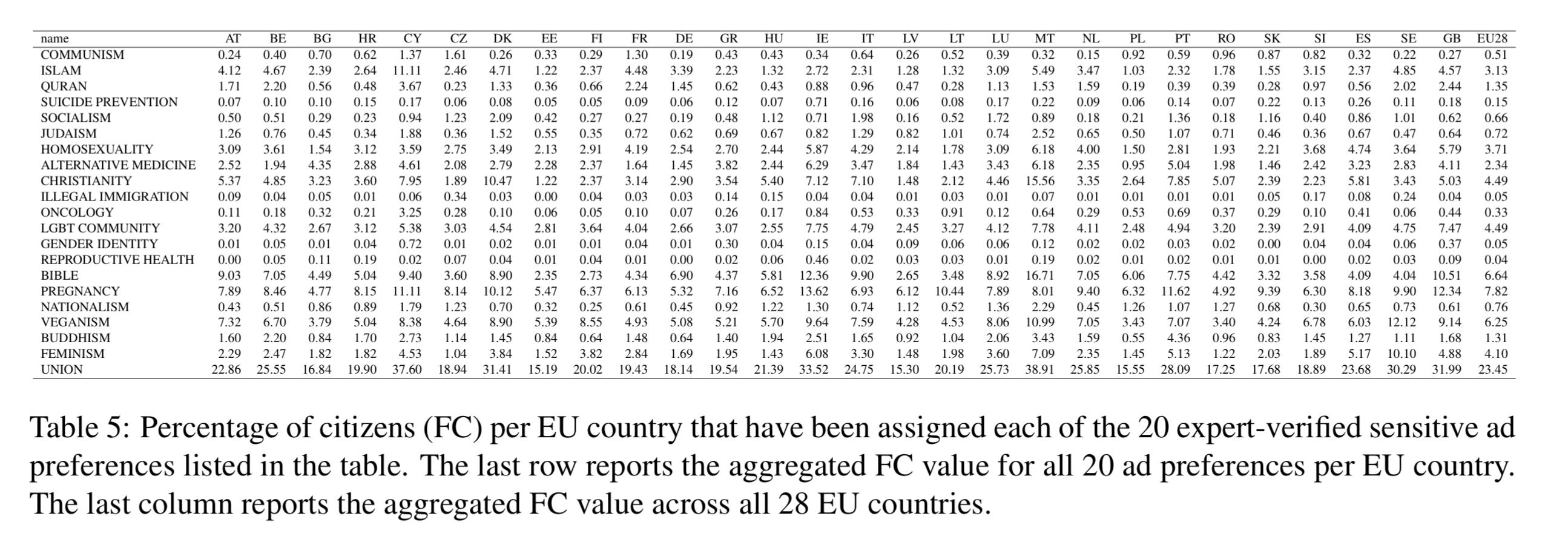

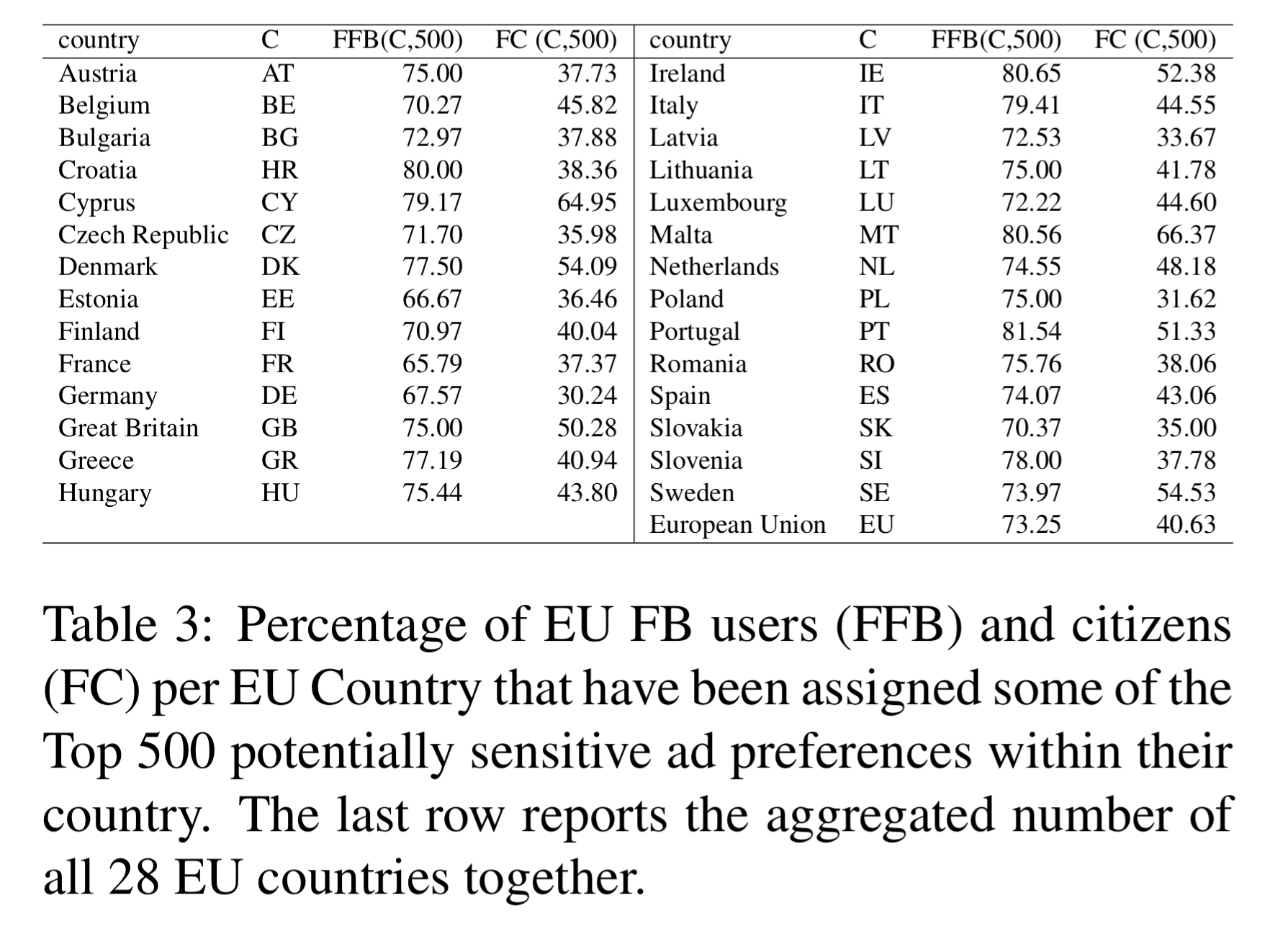

To further verify that the set of potentially sensitive ad preferences contained those likely to be relevant under the GDPR, an expert from the Spanish DPA reviewed a subset of 20 ad preferences that all panelists classified as sensitive. The expert confirmed the sensitive nature of the attribute in all cases. The following tables show the percentage of Facebook users, and overall citizens, tagged with at least one of these expert-verified sensitive attributes.

Exploitation

So far we know that Facebook has sensitive data, but it doesn’t allow third-parties to identify individual users directly based on this. You can of course (that’s the whole point from Facebook’s perspective) conduct advertising campaigns using it though.

The authors give two examples of how the advertising mechanism could be abused. Firstly, an attacker could create hate campaigns, targeting specific sensitive social groups. Campaigns can reach thousands of users at a very low cost (e.g., the authors reached more than 26K FB users with a spend of only €35). Such a campaign would of course be a violation of Facebook’s advertising policies and you would hope that the review process would catch such campaigns before they are live. At least in the context of dark ads though some undesirable adverts do seem to slip through the net.

The second example is an advertiser using a legitimate looking advertising campaign targeting a sensitive group, which is actually a phishing-like attack. When users click on an advert from the campaign they can be taken to a phishing page persuading the user to give away some information that reveals their identity. A recent study cited by the authors saw phishing attack success rates of as high as 9%. At this level, it would have costs the authors around €0.015 per user to identify an arbitrary member of a group.

In summary, although Facebook does not allow third parties to identify individual users directly, ad preferences can be used as a very powerful proxy to perform identification attacks based on potentially sensitive personal data at low cost. Note that we have simply described this ad-based phishing attack but have not implemented it due to the ethical implications.

FDVT and sensitive data

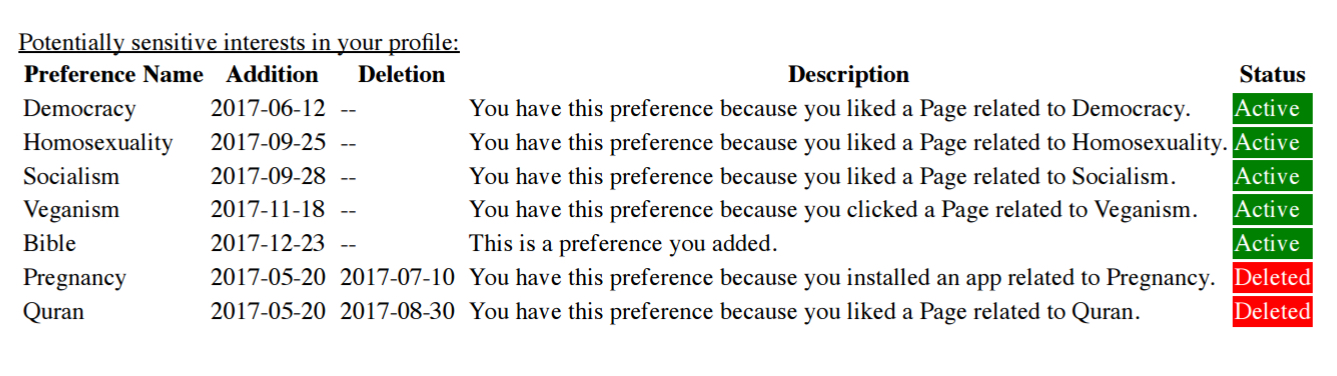

The FDVT has been extended to enable users to see the sensitive personal data attributes Facebook has associated them with, along the reason Facebook gives.

The results of our paper urge a quick reaction from Facebook to eliminate all ad preferences that can be used to infer the political orientation, sexual orientation, health conditions, religious beliefs or ethnic origin of a user…