Zab: High-performance broadcast for primary-backup systems – Junqueira et al. 2011

This is part six of a ten-part series on consensus and replication.

This paper describes the atomic broadcast protocol that underpins Zookeeper. Rather than replicate operations as we’ve seen so far for Paxos and VR, Zab replicates state changes.

Critical to the design of Zab is the observation that each state change is incremental with respect to the previous state, so there is an implicit dependence on the order of the state changes. State changes consequently cannot be applied in any arbitrary order, and it is critical to guarantee that a prefix of the state changes generated by a given primary are delivered and applied to the service state.

Zab is also designed for high performance, which leads to a desire to have multiple outstanding transactions simultaneously. The argument made is that the combination of multiple outstanding ttransactions plus the incremental state property cannot be addressed by Paxos. The situation does not seem quite as black and white to me as the paper makes out though:

It is important in our setting that we enable multiple outstanding ZooKeeper operations and that a prefix of operations submitted concurrently by a ZooKeeper client are committed according to FIFO order. Traditional protocols to implement replicated state machines, like Paxos, do not enable such a feature directly, however. If primaries propose transactions individually, then the order of learned transactions might not satisfy the order dependencies and consequently the sequence of learned transactions cannot be used unmodified. One known solution to this problem is batching multiple transactions into a single Paxos proposal and having at most one outstanding proposal at a time. Such a design affects either throughput or latency adversely depending on the choice of the batch size.

There follows an example in which three different proposers make proposals for three consecutive sequence numbers. The latter proposals are accepted, but the first fails to achieve a quorum and is later superceded by an alternate proposal. Due to the FIFO sequencing requirement (incremental state dependencies), this violates the Zookeeper requirement.

Now, Lamport himself in Paxos Made Simple states that a single distinguished proposer is required (so we don’t ordinarily have multiple proposers making proposals), and we know that this distinguished proposer (or a VR primary in VR) can sequence client transactions. Furthermore, Lamport also describes multiple rounds of Paxos executing simultaneously. If learners don’t apply an operation from their logs until all preceding operations have also been applied, this would seem on the surface to give us what Zab requires. And under normal operation I believe it would. So why then is Zab worried about “If primaries propose transactions individually…?” Not because they want to enable this for performance reasons, since Zab itself also uses a primary-backup model. The gap left by Paxos as I see it is therefore in the situation when the distinguished proposer fails with outstanding but unaccepted proposals. This can lead to gaps in the sequence, which a new distinguished proposer will fill with no-op operations.

The Zab ordering requirement is called primary ordering.

…we need to satisfy one more property to enable multiple changes in progress from a given primary. Since each state change is based on a previous state if the change for that previous state is skipped, the dependent changes must also be skipped. We call this property primary order, and we split it into two parts: local primary order and global primary order.

- Local primary order says that if a primary broadcasts m1 before m2, then any process that delivers m2 has to have delivered m1 first.

- Global primary order says that if a primary broadcasts m1, and then the primary changes and the new primary broadcasts m2, then any process that delivers both m1 and m2 has to deliver m1 first. Note this rule permits delivery of m2 but not m1 in this circumstance.

… transactions sent by different primaries are not necessarily considered as causally related even if they are actually sent by the same process.

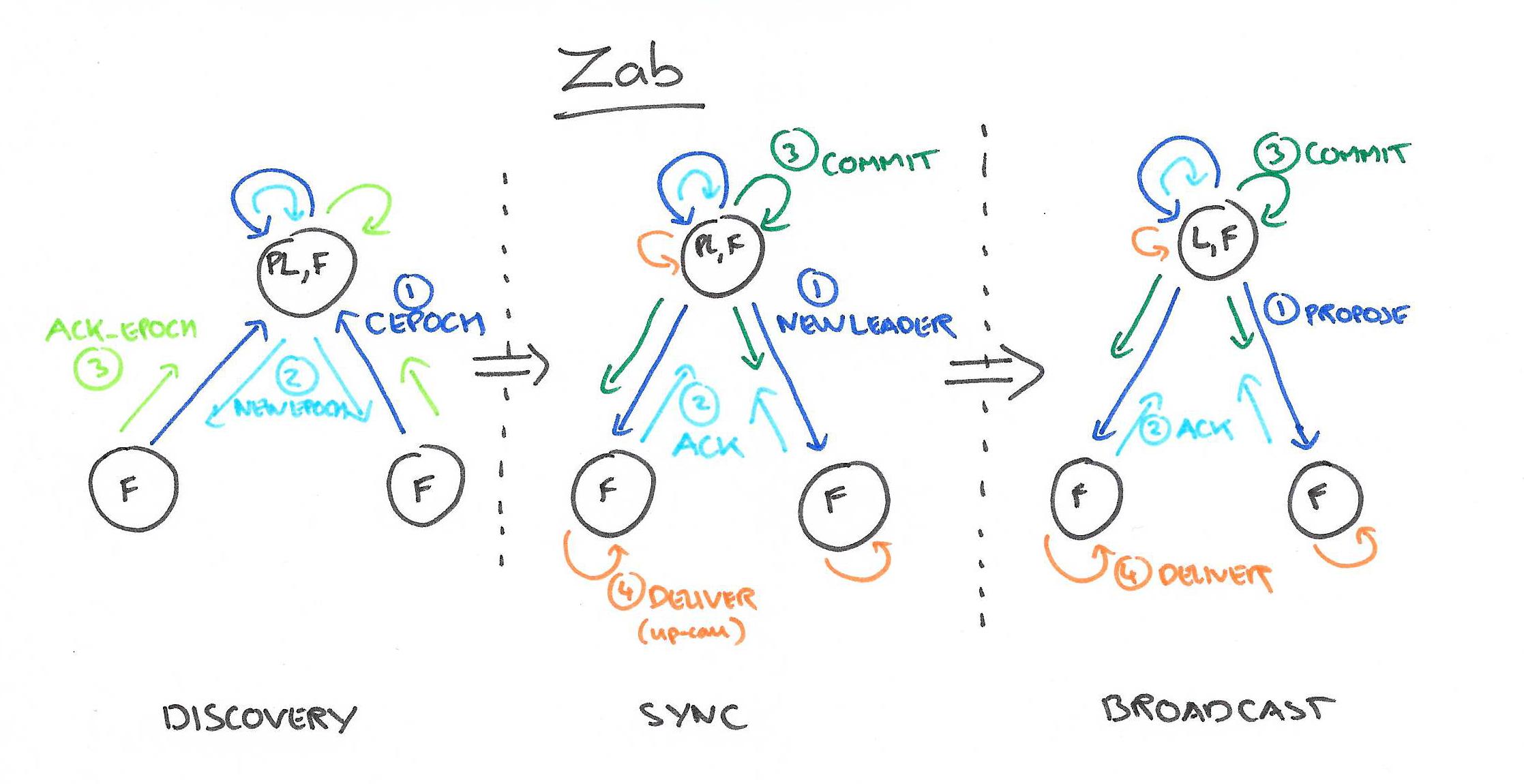

Now we’ve got the motivation out of the way, let’s take a look at how the core Zab protocol works. There are three phases: discovery, synchronization, and broadcast (normal operation). It’s the synchronization phase that makes Zab different:

To guarantee primary order despite primary crashes, Zab implements three phases. One particular phase critical to guarantee that the property is satisfied is synchronization. Upon a change of primary, a quorum of processes has to execute a synchronization phase before the new primary broadcasts new transactions. Executing this phase guarantees that all transactions broadcast in previous epochs that have been or will be chosen are in the initial history of transactions of the new epoch.

The paper contains an analysis of the correctness of the algorithm, and an evaluation of its implementation. Here I’m going to focus on the core protocol itself.

There are two roles a Zab process can perform according to the protocol: leader and follower. A leader concurrently executes the primary role and proposes transactions according to the order of broadcast calls of the primary. Followers accept transactions according to the steps of the protocol. A leader also executes the steps of a follower.

In addition, each process implements a leader oracle to determine which other processes it should follow (see for example the round-robin scheme of Viewstamped Replication Revisited for one way this could work). If the leader oracle of a process determines it is the leader, than that process executes the leader steps of the protocol – this is not enough to establish its leadership though, phase 2 (synchronization) does that.

A Zab process can be looking for a leader (ELECTION state), following (FOLLOWING state), or leading (LEADING state). When a process starts, it enters the ELECTION state. While in this state the process tries to elect a new leader or become a leader. If the process finds an elected leader, it moves to the FOLLOWING state and begins to follow the leader. Processes in the FOLLOWING state are followers. If the process is elected leader, it moves to the LEADING state and becomes the leader. Given that a process that leads also follows, states LEADING and FOLLOWING are not exclusive. A follower transitions to ELECTION if it detects that the leader has failed or relinquished leadership, while a leader transitions to ELECTION once it observes that it no longer has a quorum of followers supporting its leadership.

An iteration of the algorithm proceeds through all three phases. Note that Zab re-establishes TCP connections between processes with every iteration, thus ensuring messages from older iterations will no longer be delivered.

Discovery

- Followers send a current epoch message (CEPOCH) to the prospective leader (as chosen by the oracle), which includes the epoch number of the last new epoch (NEWEPOCH) messasge they acknowledged if any.

- Once the prospective leader has received CEPOCH messages from a quorum of followers it assigns a new epoch number which is greater than any sent by the followers in those messages, and sends a NEWEPOCH message to each member of the quorum including this new epoch number.

- When a follower receives this NEWEPOCH message, it acknowledges it if the epoch number is greater than that of the last epoch it previously acknowledged. The epoch acknowledgement message sent by the following includes the current epoch of the follower, as well as the follower’s transaction history.

An optimisation is recommended:

Given that the history of a follower can be arbitrarily long, it is not efficient to send the entire history in a ACK-E. The last zxid of a follower is sufficient for the prospective leader to determine if it needs to copy transactions from any given follower, and only copies missing transactions.

- When the proposed leader has received an epoch acknowledgement from each member of the quorum, it chooses the most up to date history from amongst all those sent in the acknowledgements to become the initial history of the new epoch. The most up to date history is the one with the highest epoch number, and within that epoch, the highest transaction id.

Synchronization

A new epoch has now begun, with a new proposed leader. The synchronization step confirms this leadership.

An elected leader does not become established for a given epoch e until it completes Phase 2, in which it successfully achieves consensus on the proposal history and on itself as the leader of e.

- The proposed leader sends a new leader (NEWLEADER) message to each member of the quorum containing the epoch number of the newly launched epoch, together with the initial history for that epoch.

- When a follower receives a NEWLEADER message, it checks the epoch number against the epoch number it last acknowledged. If these are different, it starts a new iteration of the protocol. In the expected case of course they will be the same, and the follower proceeds by accepting all transactions in the initial history and setting its own history to match. It then acknowledges the new leader proposal.

- Once the proposed leader has received a quorum of acknowledgements it is established as the new leader, and sends a commit message to all followers.

- When a follower receives this commit message, it delivers (cf. VR’s service up-call) each transaction in the initial history in order.

Broadcast

This is the normal phase that the system stays in until a problem is suspected:

To mutually detect crashes in a fine-grained and convenient manner, avoiding operating system reconfiguration, leader and followers exchange periodic heartbeats. If the leader does not receive heartbeats from a quorum of followers within a timeout interval, the leader renounces leadership of the epoch, and transitions to the ELECTION state. Once it elects a leader, it starts a new iteration of the algorithm, and starts a new iteration of the protocol proceeding to Phase 1… A follower only follows one leader at a time and stays connected to a leader as long as it receives heartbeats within a timeout interval.

The basics of the broadcast phase should seem familiar by now: the leader proposes transactions in increasing order of transaction id. For each proposal the leader waits until a quorum of acknowledgements have been received, and then sends a commit message for that transaction. Note that Zab’s communication medium guarantees in-order message delivery.

Followers accept transactions in the order that they receive them (transaction id order), and deliver those transactions once they receive the commit message and have delivered all previously accepted transactions with lower ids.

During this phase the leader may receive a CEPOCH message from a follower (for example, from a process that is recovering from failure). If so, it proposes back NEWEPOCH with the current epoch number, and NEWLEADER with the current epoch history. If it receives an acknowledgement of this new leader proposal, then the leader sends back a commit message.

Note that a follower and a leader follow the recovery protocol both when a new leader is emerging and when a follower connects to an established leader. If the leader is already established, the NEWLEADER proposal has already been committed so any acknowledgements for the NEWLEADER proposal are ignored.

This last part of the protocol seems under-specified to me in this version of the paper (of course, it’s very possible the details are there and I just can’t spot them!). The whole purpose of phase 2 (synchronization) is to get the whole group on the same page in the face of multiple outstanding transactions. So in phase 3, when the CEPOCH exchange occurs with an existing leader we need to preserve this property. Does the leader not propose any new transactions after sending back NEWLEADER with the current history until an acknowledgement is received (which would of course prevent progress until a timeout in the worst case)? Or is there an implied catch-up mechanism so that any deltas to the history between NEWLEADER and the subsequent commit can be received by the new follower? I suspect the latter. Such a copy mechanism is described informally for the leader to catch-up during synchronization:

The last zxid of a follower is sufficient for the prospective leader to determine if it needs to copy transactions from any given follower, and only copies missing transactions.